12 minute read · December 15, 2023

Using Dremio to Reduce Your Snowflake Data Warehouse Costs

· Senior Tech Evangelist, Dremio

DOWNLOAD WHITEPAPER: Reduce Analytics TCO by 50% with a Data Lakehouse

Snowflake has emerged as a powerful and flexible solution for organizations seeking to manage and analyze their data efficiently. However, as the volume and complexity of data grows, so do the associated costs. While flexible, Snowflake's pay-as-you-go pricing model can sometimes lead to unforeseen expenditure spikes, leaving organizations struggling to keep their data warehousing budgets in check.

Understanding and managing these costs is paramount. This is where Dremio, a data lake engine designed for high-performance data access and acceleration, comes into play. In this comprehensive guide, we will delve into the challenges of Snowflake warehouse costs and how Dremio can be the beacon that helps navigate these turbulent financial waters.

Cost control isn't just about keeping expenses in check; it's about ensuring that your organization's data infrastructure aligns with your budgetary constraints without compromising performance and scalability. As we explore the dynamic relationship between Snowflake and Dremio, you will discover how this partnership can provide a strategic advantage in taming runaway data warehouse costs while maintaining the high standards your business demands.

In the sections that follow, we will dissect the various facets of Snowflake warehouse costs, provide an overview of Snowflake's role in data warehousing, introduce Dremio as a cost-effective solution, and offer practical insights into setting up, monitoring, and managing costs with this powerful combination.

So, if you've ever found yourself grappling with escalating Snowflake expenses or seeking a more efficient and cost-conscious way to leverage your data warehouse, read on. This guide is your roadmap to achieving cost control and performance optimization in the world of Snowflake data warehousing with the assistance of Dremio.

Understanding Snowflake Warehouse Costs

When organizations adopt Snowflake as their data warehousing solution, they often do so to leverage its scalability, performance, and flexibility. However, the pay-as-you-go pricing model of Snowflake, while advantageous in many ways, can also introduce cost challenges that need to be carefully managed. In this section, we'll dissect the factors that contribute to escalating costs in a Snowflake warehouse and explore how these costs can impact an organization's budget.

Data Storage Costs

One of the fundamental elements of a Snowflake data warehouse is data storage. Snowflake allows you to store vast amounts of data in a highly structured and organized manner. While this is an asset for data management, it can quickly become a cost driver as data volumes grow. Snowflake charges organizations based on the amount of data stored, which means that storing larger datasets or retaining historical data can lead to substantial storage costs over time.

Query Execution Costs

Snowflake's ability to process complex queries quickly is one of its key strengths. However, executing queries, especially those involving large datasets or complex operations, can be resource-intensive. Snowflake charges are based on the computational resources used during query execution. As queries become more frequent or resource-demanding, costs can escalate rapidly. This factor often surprises organizations, particularly when they experience unexpected spikes in query activity.

Data Transfer Costs

Data transfer costs in Snowflake are associated with moving data in and out of the warehouse, whether from external data sources or between Snowflake regions and accounts. These costs can accumulate when organizations regularly ingest data from external sources, perform ETL (extract, transform, and load) processes, or replicate data across different Snowflake environments. Unmanaged data transfers can lead to significant expenses that impact the overall budget. These costs are often in the form of egress fees from cloud providers when you ETL data from the data lake into the data warehouse.

Concurrency Costs

Concurrency refers to the number of simultaneous queries executed in a Snowflake warehouse. Snowflake allows organizations to configure their concurrency settings based on their needs. However, higher concurrency limits come with higher costs. Managing concurrency efficiently is crucial, as excessive concurrent queries can drive up expenses, especially during peak usage times.

Impact on an Organization's Budget

The impact of these cost factors on an organization's budget can be substantial. Uncontrolled data storage can lead to unexpected overages, query execution costs can result in budget overruns during intense analytical workloads, data transfer costs can eat into financial resources, and concurrency costs can strain the budget when not carefully managed.

Moreover, when Snowflake's costs spiral out of control, they can divert financial resources from other critical projects and initiatives. This can hinder an organization's ability to invest in innovation, research, and development, ultimately affecting its competitive edge.

In the following sections, we will explore how Dremio can play a pivotal role in mitigating these escalating Snowflake warehouse costs and provide strategies to help organizations regain control over their data warehousing budgets.

What Is Dremio

As organizations grapple with the complexities of managing and extracting insights from vast and diverse datasets, the need for a high-performance data platform becomes increasingly vital. Dremio, a data lakehouse platform, steps in to revolutionize how data is accessed and analyzed.

Dremio's Role as a Data Lakehouse Platform

Dremio is more than just a query engine; it's a powerhouse that supercharges your data capabilities. It acts as an intermediary layer between your data sources and the tools and applications that need access to that data. Dremio is designed to make data acceleration and data access as seamless and efficient as possible.

How Dremio Enables Data Acceleration and Simplifies Data Access

Apache Arrow-based query engine: At the heart of Dremio is a lightning-fast query engine built on Apache Arrow. This engine is optimized for speed, enabling rapid query execution on large datasets. With Dremio, you can expect your queries to run with exceptional performance.

Data reflections: Dremio introduces a powerful concept known as data reflections. These are pre-aggregated, indexed, and optimized representations of your data, which can be created automatically or on demand. Data reflections can dramatically accelerate query performance, allowing for sub-second response times, particularly crucial for real-time business intelligence dashboards.

Columnar Cloud Cache (C3): Dremio employs a columnar cloud cache that reduces the need for frequent cloud network access calls. This cache stores frequently accessed data in a highly efficient format, reducing data transfer costs and improving query speed.

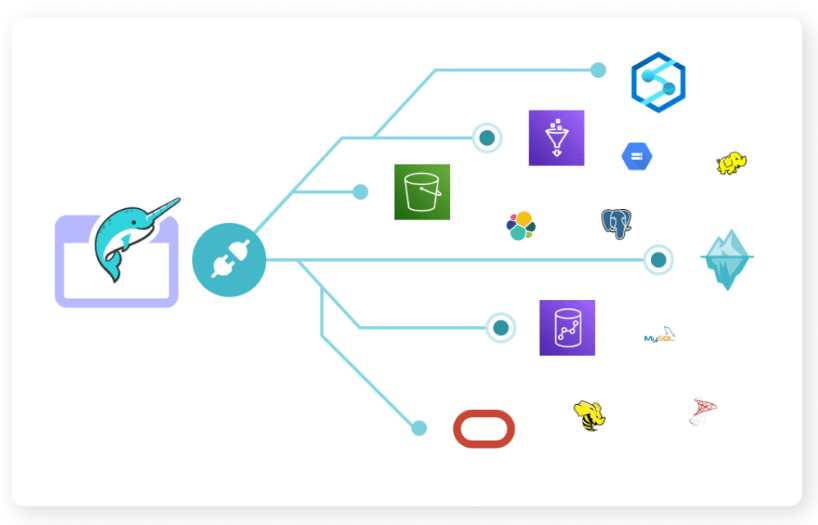

Versatile data source connectivity: Dremio is not confined to a single data source; it's designed to connect to a wide array of data sources. Whether you're dealing with databases, data lakes, data warehouses like Snowflake, or even semi-structured data formats, Dremio can seamlessly connect to them. This versatility ensures that your organization can leverage all its data assets without the hassle of complex integrations.

In essence, Dremio acts as a unified data access layer, making data from various sources readily available, optimizing queries for exceptional speed, and simplifying the process of accessing and analyzing data. Its ability to work with Apache Arrow, create data reflections, and manage data caches sets it apart as a powerful tool for organizations looking to maximize the value of their data while keeping costs in check.

How Dremio Helps Control Snowflake Costs

While flexible, Snowflake's pay-as-you-go pricing model can sometimes lead to unexpected costs. Fortunately, Dremio offers strategies to help organizations take control of their Snowflake expenses while enhancing data access and performance.

Approaches to Reducing Snowflake Costs

Reducing data movement: One of the primary cost drivers in Snowflake is data movement. Each time data is loaded into Snowflake, storage and data transfer costs are incurred. Dremio provides a solution by acting as a data virtualization layer. Instead of moving data into Snowflake, you can directly connect Snowflake and other data sources to Dremio. This means you can unify your Snowflake data with other data sources without physically moving them into Snowflake. Reducing data movement minimizes storage costs and eliminates unnecessary data transfer expenses.

Offloading workloads: Snowflake's compute costs can be a significant portion of the budget. Dremio can help optimize this aspect by offloading some of the workloads that typically occur on Snowflake onto Dremio on the data lake. Dremio leverages cost-effective compute resources available in the data lake environment, reducing the reliance on Snowflake's more expensive compute resources. This approach saves on compute costs and cuts down on storage and data transfer costs for the offloaded workloads.

Working with Apache Iceberg tables: Snowflake now supports externally managed Apache Iceberg tables. Dremio, with its ability to work seamlessly with various data sources, also supports Apache Iceberg tables. This means you can move some of the workloads on the same dataset to Dremio, reducing costs associated with Snowflake compute and storage. Leveraging Dremio's compatibility with Apache Iceberg tables allows you to optimize where and how your workloads are processed.

Additional Benefits

Beyond cost reduction, Dremio brings additional benefits to the table. Dremio's data virtualization capabilities enable organizations to federate data sources. You can easily leverage multiple data marketplaces with Dremio, such as AWS Data Exchange and the Snowflake Marketplace. By connecting and querying data from various marketplaces through Dremio, you gain a unified view of your data landscape without needing complex and costly data movement or integration projects.

Conclusion

Dremio, as a versatile data lakehouse platform, steps in as the beacon of cost control and performance optimization. By unifying data sources, leveraging its blazing-fast query engine, introducing data reflections for sub-second responses, employing columnar cloud caching, and supporting various data formats, Dremio streamlines data access and analysis while mitigating Snowflake's cost complexities.

Through the approaches discussed—reducing data movement, offloading workloads, and utilizing Apache Iceberg tables—organizations can strategically manage Snowflake costs, enhance budgetary control, and enable resource allocation for innovation and growth. Additionally, Dremio's data virtualization capabilities extend your data reach to multiple marketplaces, empowering you with a unified view of your data landscape.

As the data-driven era evolves, cost-effective and performance-oriented solutions like Dremio become indispensable. Whether you've encountered escalating Snowflake expenses or aspire to make your data infrastructure align with budget constraints without compromising on quality, Dremio offers a roadmap to cost control and data-driven success in Snowflake data warehousing. Embrace this powerful combination and navigate the dynamic data landscape with confidence.

DOWNLOAD WHITEPAPER: Reduce Analytics TCO by 50% with a Data Lakehouse