Advanced Guides

Starting Apache Arrow

Our CTO Jacques Nadeau sat down for a fireside chat with Wes Mckinnney, discussing the past, present, and future of Apache Arrow.

read moreApache Arrow combines the benefits of columnar data structures with in-memory computing. It delivers the performance benefits of these modern techniques while also providing the flexibility of complex data and dynamic schemas. And it does all of this in an open source and standardized way.

Over the past few decades, databases and data analysis have changed dramatically.

With these trends in mind, a clear opportunity emerged for a standard in-memory representation that every engine can use—one that’s modern, takes advantage of all the new performance strategies that are available, and makes sharing of data across platforms seamless and efficient. This is the goal of Apache Arrow.

To use an analogy, consider traveling to Europe for vacation before the European Union (EU) was established. To visit five countries in seven days, you could count on the fact that you were going to spend a few hours at each border for passport control, and you were going to lose some value of your money in the currency exchange. This is how working with data in-memory works without Arrow: enormous inefficiencies exist to serialize and deserialize data structures, and a copy is made in the process, wasting precious memory and CPU resources. In contrast, Arrow is like visiting Europe after the EU and the adoption of the common currency dubbed the euro: you don’t wait at the border, and one currency is used everywhere.

Arrow itself is not a storage or execution engine. It is designed to serve as a shared foundation for the following types of systems:

Arrow consists of a number of connected technologies designed to be integrated into storage and execution engines. The key components of Arrow include:

As such, Arrow doesn’t compete with any of these projects. Its core goal is to work within each of them to provide increased performance and stronger interoperability. In fact, Arrow is built by the lead developers of many of these projects.

The faster a user can get to the answer, the faster they can ask other questions. High performance results in more analysis, more use cases and further innovation. As CPUs become faster and more sophisticated, one of the key challenges is making sure processing technology uses CPUs efficiently.

Arrow is specifically designed to maximize:

Cache locality, pipelining and superscalar operations frequently provide 10-100x faster execution performance. Since many analytical workloads are CPU-bound, these benefits translate into dramatic end-user performance gains. Here are some examples:

Arrow also promotes zero-copy data sharing. As Arrow is adopted as the internal representation in each system, one system can hand data directly to the other system for consumption. And when these systems are located on the same node, the copy described above can also be avoided through the use of shared memory. This means that in many cases, moving data between two systems will have no overhead.

In-memory performance is great, but memory can be scarce. Arrow is designed to work even if the data doesn’t fit entirely in memory. The core data structure includes vectors of data and collections of these vectors (also called record batches). Record batches are typically 64KB-1MB, depending on the workload, and are usually bounded at 2^16 records. This not only improves cache locality, but also makes in-memory computing possible even in low-memory situations.

With many big data clusters ranging from hundreds to thousands of servers, systems must be able to take advantage of the aggregate memory of a cluster. Arrow is designed to minimize the cost of moving data on the network. It utilizes scatter/gather reads and writes and features a zero serialization/deserialization design, allowing low-cost data movement between nodes. Arrow also works directly with RDMA-capable interconnects to provide a single memory grid for larger in-memory workloads.

Another major benefit of adopting Arrow, besides stronger performance and interoperability, is a level playing field among different programming languages. Traditional data sharing is based on IPC and API-level integrations. While this is often simple, it hurts performance when the user’s language is different from the underlying system’s language. Depending on the language and the set of algorithms implemented, the language transformation causes the majority of the processing time.

Arrow currently supports C, C++, Java, JavaScript, Go, Python, Rust and Ruby.

Lead developers from 14 major open source projects are involved in Arrow:

Many projects include Arrow in order to improve performance and take advantage of the latest optimizations. These projects include Drill, Impala, Kudu, Ibis, Spark and many others. Arrow improves performance for data consumers and data engineers — which means less time spent gathering and processing data, and more time spent analyzing it and coming to useful conclusions.

Dremio is a data lake engine that uses end-to-end Apache Arrow to dramatically increase query performance. Users simplify and accelerate access to their data in any of their sources, making it easy for teams to find datasets, curate data, track data lineage and more. Dremio helps companies get more value from their data, faster. Try Dremio today!

Our CTO Jacques Nadeau sat down for a fireside chat with Wes Mckinnney, discussing the past, present, and future of Apache Arrow.

read more

Apache Parquet is a columnar file format. Learn more about the Parquet data format and its advantages & considerations over when to use other formats.

read more

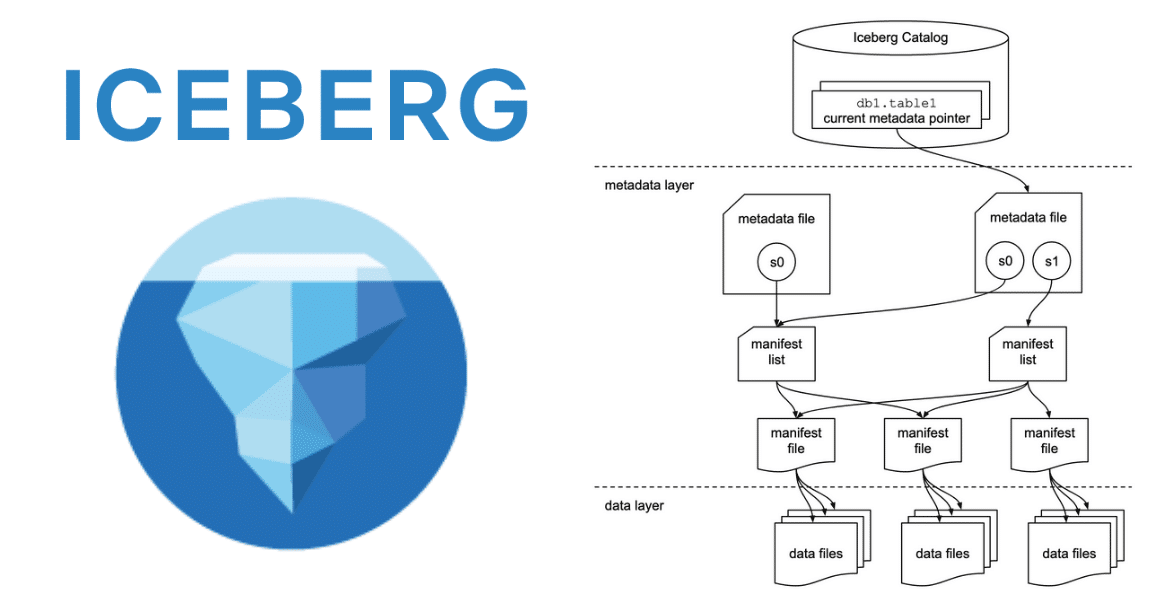

This article is an in-depth look at the problems Iceberg set out to solve and how Iceberg solves them under the covers.

read more