Featured Articles

Popular Articles

-

Product Insights from the Dremio Blog

Product Insights from the Dremio BlogOne Click with Dremio’s Claude Connector Using MCP

-

Dremio Blog: Various Insights

Dremio Blog: Various InsightsClaude + Dremio: Instantly Connect and Start Getting Insights

-

Dremio Blog: Various Insights

Dremio Blog: Various InsightsTransform Financial Services Analytics: From Data Chaos to Autonomous Intelligence

-

Product Insights from the Dremio Blog

Product Insights from the Dremio BlogOptimize Supply Chain Analytics on Dremio Cloud

Browse All Blog Articles

-

Product Insights from the Dremio Blog

One Click with Dremio’s Claude Connector Using MCP

If your team manages both a warehouse and a lake, give Claude the context it needs to actually help you. Using a dedicated MCP server bridges the gap between powerful language models and your complex data architecture. -

Dremio Blog: Various Insights

Claude + Dremio: Instantly Connect and Start Getting Insights

Today we're making it super simple to use Claude, Claude Cowork, and Claude Code with Dremio. The new Claude connector for Dremio Cloud lets anyone instantly connect Anthropic's Claude to their lakehouse. -

Dremio Blog: Various Insights

Transform Financial Services Analytics: From Data Chaos to Autonomous Intelligence

The Data Crisis Crippling Financial Services Most financial institutions today face the same bottleneck: fragmented, slow, and costly data systems that cripple the adoption of AI. Analysts wait hours for risk reports. Data engineers spend most of their time tuning queries instead of innovating. And autonomous AI agents hit performance walls and data inconsistencies that […] -

Product Insights from the Dremio Blog

Optimize Supply Chain Analytics on Dremio Cloud

This tutorial shows you how to build a supply chain analytics pipeline on Dremio Cloud that unifies procurement, warehouse, and sensor data. You'll seed sample datasets, model them through Bronze, Silver, and Gold views, and use the AI Agent to evaluate supplier performance and inventory risk through natural language questions. -

Product Insights from the Dremio Blog

Build Healthcare Analytics with Dremio Cloud

This tutorial shows you how to build a healthcare analytics pipeline on Dremio Cloud that unifies patient, claims, and prescription data in real time. You'll create sample datasets, model them into Bronze, Silver, and Gold views, and use the AI Agent to analyze readmission risk and cost patterns through natural language questions. -

Product Insights from the Dremio Blog

Analyze Financial Services Data with Dremio Cloud

This tutorial shows you how to build a financial analytics pipeline on Dremio Cloud in 30 minutes. You'll seed sample banking, market, and compliance data, model it into a medallion architecture, and use the AI Agent to detect transaction anomalies and assess account risk through natural language questions. -

Product Insights from the Dremio Blog

Build a Customer 360 View on Dremio Cloud

This tutorial walks you through building a complete Customer 360 view on Dremio Cloud, from signup to asking natural language questions about your customers. You'll seed sample data, model it through Bronze, Silver, and Gold views, enable AI-generated documentation, and use Dremio's built-in AI Agent to generate insights and charts. The entire process takes about 30 minutes with a free trial account. -

Dremio Blog: Various Insights

STACKIT Dremio: A sovereign, open lakehouse service for Europe

Across Europe, organizations are modernizing analytics and AI while tightening requirements around data control, jurisdiction, and operational accountability. What customers are increasingly seeking is straightforward: the flexibility of open architectures, the speed needed for modern workloads, and the confidence that their service operations, support, and contracting align with European expectations. Today, I’m excited to share […] -

Dremio Blog: Various Insights

Federated Semantics: The Foundation Enterprise Agentic AI Actually Needs

Most enterprise AI projects fail at the same place. Not the model. Not the infrastructure. The data. Specifically: the AI doesn't understand the data well enough to act on it accurately, and it can't reach most of the data anyway. These two problems compound each other. A model that can only see 30% of your […] -

Dremio Blog: Open Data Insights

The best analytics platforms with native AI integrations in 2026

Discover leading AI-powered data analytics solutions and see how they enhance insights, automation and enterprise decision-making. -

Dremio Blog: Open Data Insights

Apache Iceberg vs Delta Lake: Which is right for your lakehouse?

Explore the key differences between Delta Lake and Iceberg, and learn how open data formats enable scalable, AI-ready lakehouse architectures. -

Dremio Blog: Open Data Insights

Complete guide on semantic layer: Tools, benefits, and more

Explore how a universal semantic layer can unify your data sources, simplify analytics, and help you achieve better insights. -

Dremio Blog: Open Data Insights

13 best unified data management solutions: Guide with comparisons

Explore how data unification works, discover leading, and learn how Dremio can help your enterprise drive better business insights. -

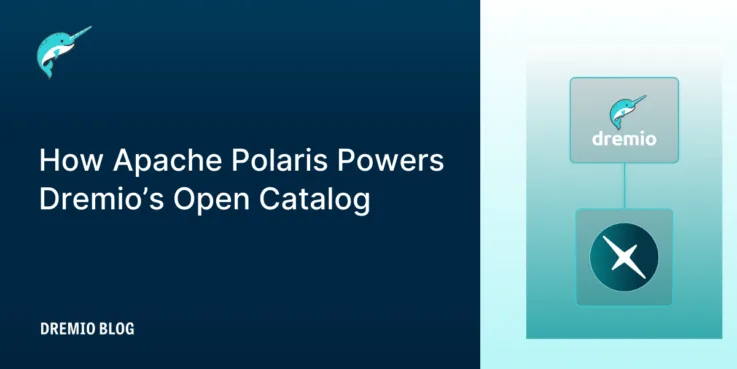

Product Insights from the Dremio Blog

How Apache Polaris Powers Dremio’s Open Catalog

Apache Polaris provides the open foundation, but enterprises running enterprise-wide, AI-driven platforms need more than a standards-compliant catalog service. They need enterprise governance, automation, and business context that AI systems can reason over. As original co-creator of Apache Polaris and one of the project’s largest contributors, Dremio uses Polaris as the foundation of Dremio’s Open […] -

Dremio Blog: News Highlights

Apache Polaris Graduates to a Top-Level Apache Project

Apache Polaris is now a Top-Level Project at the Apache Software Foundation. For anyone building on Apache Iceberg, this is one of the most important catalog milestones since the REST Catalog spec itself.

- 1

- 2

- 3

- …

- 38

- Next Page »