13 minute read · July 6, 2022

How We Got to Open Lakehouse

· Senior Product Marketing Manager, Dremio

Teams from small startups to large enterprises rely on data to make strategic decisions, improve core product offerings, and enhance the customer experience. Becoming a data-driven organization is difficult because you have to create a data infrastructure that will handle your use cases over time.

If you have been around the analytics world, the words “data warehouse”, “data lake”, and “lakehouse” may sound familiar. It’s been used over the years as a solution for data management.

While many data professionals have built their entire careers during the data warehousing era, others have started their careers in the cloud with data lakes as the foundation. As this landscape evolves over time, it is important to understand why the data warehouse was created and how we got to the open lakehouse.

What is a Data Warehouse?

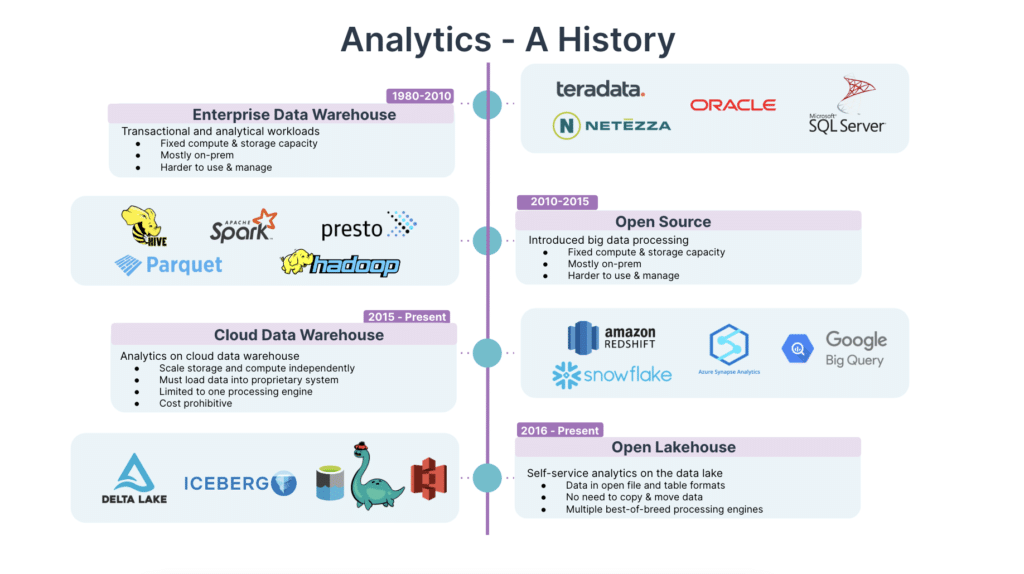

The enterprise data warehouse era started in the 1980s as a relational store for structured data. The data warehouse concept was the first in the market to help integrate disparate corporate data silos into one platform as the single source of truth. Enterprises used data warehouses to stitch together historical information from transactions, records, operations, and other applications to make data consumable for business users' analytics.

Multiple views of the data are needed.

As data warehouses gained adoption, organizations realized they needed their own customized view of the data and this gave life to data marts. Sales, marketing, finance, and engineering had their own way of looking at the data. Multiple copies of the data were in BI cubes and reporting tools for different lines of business.

Eventually, organizations ran into infrastructure inefficiencies with the data warehouse. The on-premise data warehouses had fixed compute and storage capacity, requiring additional hardware to scale. Adding licensing costs of the data warehouse and additional software for reporting requirements, managing infrastructure became a nightmare. Getting data out of the data warehouse was difficult without interrupting production workloads, and companies were locked to that specific proprietary vendor.

Another drawback of the data warehouse is that it was limited to structured, transactional-based data. With technology advancing, organizations found themselves unable to capture and analyze real-time data from smart devices and social media. Insights from semi-structured and unstructured data were needed.

This created an opportunity for the cloud data lake as a storage solution.

What is a Data Lake?

The early days of data lakes came around 2010, almost exclusively on Hadoop. Data lake deployment started on-prem as an alternative cost-effective storage solution to the data warehouse for sources that had large volumes, velocity, and variety of data.

Data lakes opened up more use cases.

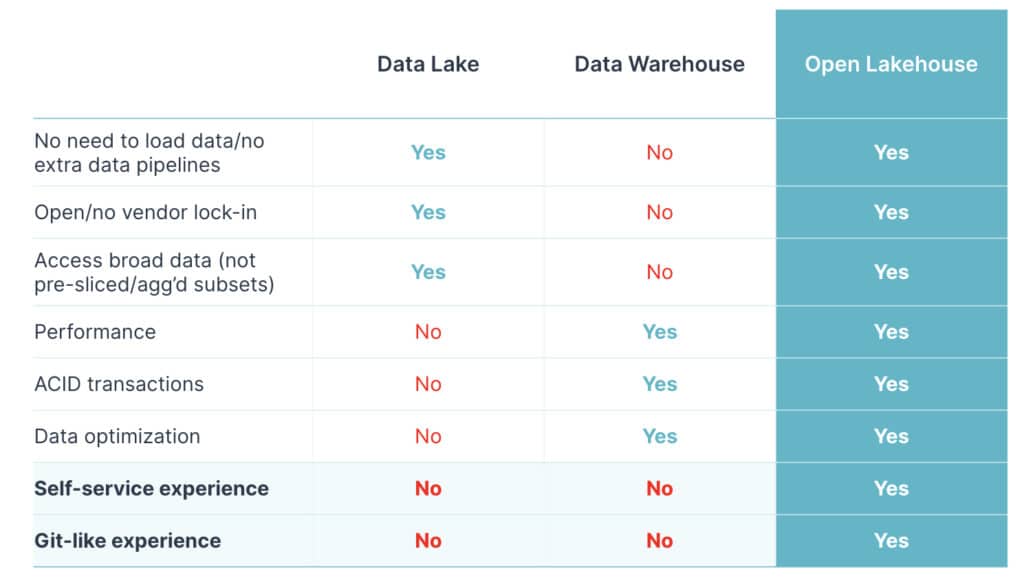

The data lake became a repository for all types of data to land into. Access to a wider scope of data opened up more use cases for data exploration that was not seen before in the data warehouse. Data scientists and analysts used the data lake for statistics and machine learning workloads.

But eventually, data lakes ran into similar infrastructure shortcomings as the data warehouse. As more data were loaded into the lake, organizations had to buy more hardware to keep up with the velocity. In parallel to hardware costs, end-users were not able to make decisions with data found in the lake because the performance was lackluster compared to the data warehouse. There was still a need for SQL, relational functionality, and ACID transactions from the data warehouse.

What about cloud data lake + data warehouse?

In 2015, we saw a shift to the cloud data lake and data warehouse. Enterprises were modernizing on-premise data warehouses and data lakes to the cloud.

There are many benefits to adopting a cloud data lake and data warehouse infrastructure. Since it is managed, teams could take advantage of cheap storage and flexibility to handle massive volumes of data as they scaled. The raw processing power of the data warehouse helped meet reporting requirements.

With workloads being in the cloud, it was hard to name a better duo than the cloud data lake and warehouse. Like its predecessor, the cloud data lake still did not provide a performant vehicle for analytics, and the data warehouse bill was starting to accumulate at an unmanageable rate. Many challenges were presented with this modern analytics approach.

Complex data architecture: For many use cases, a cloud data lake and warehouse solution can create a very complex architecture to implement and maintain. Typically, an ETL process will move your source data into the lake, where it is stored and curated before it’s copied into a data warehouse. We know different teams will want their own view of the data, so additional ETL pipelines are created to move that data from the data warehouse to separate data marts and BI cubes. This is non-stop data copying just to provide a semantic layer.

Decreased Productivity: With data being more available than ever, data consumers want faster access. At the pace that data is growing, data requests from end-users are exceeding the available capacity for data delivery from IT. Data engineers are asked to maintain complex ETL processes and juggle competing data requests in the queue. This can end in burnout for both IT and business teams.

Vendor lock-in: With the exception of the cloud data lake, any data stored in the data warehouse is locked into a proprietary format, meaning customers are tied to a specific vendor. What happens when organizations have multiple data warehouses across different cloud vendors? They don’t talk with each other and the only way to share data is to copy it back into a data lake.

There are pros and cons to the cloud data lake and data warehouse approach. While it works for a lot of organizations, many find themselves repeating history from the on-premise days with a complex architecture and data lock-in from proprietary data warehouses. ETL processes are also becoming more difficult and brittle, and the only thing scaling is the cost of managed cloud services.

A simple architecture with the benefits of both a data lake and data warehouse is needed. In the next section, we will examine the “Open Lakehouse” concept.

What is an Open Lakehouse?

In 2016, the adoption of open-source software increased, as it became an alternative to proprietary data warehouses. The most popular and efficient way to address challenges faced by cloud data warehouses and data lakes is open table formats.

What is open table format? The biggest challenge with the data lake is not having full data warehouse capabilities. The data is not optimized for analytics, so IT teams have to create data copies into a data warehouse that puts it in a proprietary format. More copies are later created in aggregate cubes and BI extracts. The open table format solves this problem.

Open table format such as Apache Iceberg removes the additional step of copying your data. Your data may land in files like CSV and you want to make it into a more performant format. Think of it as an upgraded version of Parquet. All your data is owned by you in the data lake and stays there. There are many benefits - both tangible and intangible.

Data warehouse in your data lake: Bring full data warehouse features to your data lake. Bring similar capabilities and functionalities as SQL tables in traditional databases to your data lake.

- Transactional consistency between applications where files can be added, removed, or modified atomically, with full read isolation.

- Full schema evolution to track table changes over time.

- Time travel to query historical data.

- Support for data-as-code. Roll back to prior versions of your data to quickly correct issues and return tables to a previous state.

No vendor-lock-in: Your data doesn’t have to live in a proprietary data warehouse format anymore. Previously, only one engine can access your data. Now, your data is in an open table format and you can bring the engine of your choice for different use cases. Use the best engines available today and new engines that have yet to come.

- SQL for BI

- Spark for data science and machine learning

- Flink for real-time processing

Simplified architecture: As your organization grows, so does your data capacity. Teams will have expanded use cases and will often need to bring together structured and unstructured data. Open lakehouse simplifies data architectures, data lands in your data lake, and stays there.

- Get rid of unnecessary data movement and copies

- Prioritize self-service data governance, meet GDPR and compliance requirements

- Query your data where it lives

- Reduce infrastructure management costs

Increased productivity: With fewer data copies circulating around, data engineers will have less ETL pipeline maintenance work. They can focus on driving business value and bringing in critical data sets for their business counterparts. For data consumers, this creates a single source of governed metrics and business data, simplifying self-service BI.

By now, I hope you’re able to get a better understanding of how we went from the data warehouse to the open lakehouse.

There is no one-size fit solution for data architectures. An open lakehouse architecture is the future of analytics, but it would be a stretch to tell teams to do away with a recently modernized data warehouse overnight.

These things take time to adopt. For now, the open lakehouse will live side-by-side with the data warehouse. Your use cases will determine which architecture to adopt.

Get Started with an Easy and Open Lakehouse Platform

Dremio’s open lakehouse platform is available as a fully managed cloud service with a forever-free tier. Dremio Cloud makes deploying an open data lakehouse architecture as easy as a cloud data warehouse. You can spin up Dremio Cloud in your AWS account and explore your data lake in minutes. Get started today.

If you are new to the open lakehouse, check out this video that breaks down what a lakehouse is and why you need one.