16 minute read · February 24, 2023

Dealing with Data Incidents Using the Rollback Feature in Apache Iceberg

· Developer Advocate, Dremio

Imagine you are a data engineer working for the platform engineering team of your company’s analytics team. Your responsibilities include building data pipelines and infrastructure to make data available and support analytical workflows such as business intelligence (BI) and machine learning (ML) across your organization. In the past, your analytical workloads used to run on top of a cloud data warehouse that presented limitations in running advanced analytics such as machine learning, and the cost factor associated with such a platform was super high. Not to mention, your data was locked into the specific warehouse’s storage, which the warehouse’s processing engine could only access.

Having dealt with these challenges, your team decides to adopt a new form of data architecture that can specifically target the shortfalls of your existing platform. Cloud data lakes are a usual first choice as they can store any type of data, i.e., structured and unstructured. However, the problem with data lakes is that there is no support for ACID transactions, and if the lake isn’t managed properly, it can become a data swamp, which would make it extremely difficult to derive insights. Data lakehouse is a new form of data architecture that caters to these caveats by combining the best of a data warehouse with a data lake.

In short, a lakehouse brings the following benefits to your organization:

- Stores data in open formats so it can be used by multiple engines

- Uses cost-effective cloud object stores for storing data

- Delivers transactional support with atomicity guarantees and consistency

- Support multiple analytical workloads such as BI and machine learning

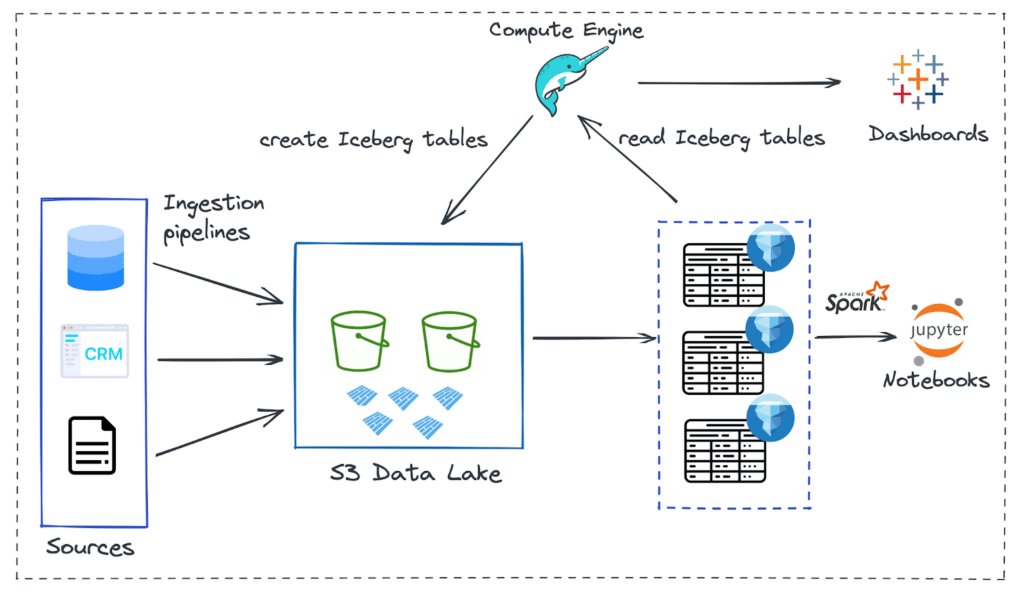

As of today, let’s say your architecture looks something like this at a high level:

And, the usual way of making data available across the organization for various analytical purposes is captured in these steps:

- First, the data engineering team ingests data from a multitude of sources via different ETL pipelines into a cloud data lake. In this case, it is Amazon S3 buckets.

- Then the raw data files, i.e., Parquet files, are converted into a specific data lake table format. Table formats help abstract the physical complexity of the data structure and bring in the ability to perform data warehouse-level transactions. Apache Iceberg is the suitable table format used in this architecture.

- To deal with reading and writing Iceberg tables on the data lake and to run ad hoc queries, there is the need for a compute engine. Dremio, an end-to-end lakehouse platform, is used for this specific purpose. It brings in capabilities such as a compute engine, metastore, and semantic layer and, most importantly, provides native connectivity with BI tools. The data engineering team usually manages the preparation of Iceberg tables and supplies them to data analysts/scientists.

- Finally, the Iceberg tables (datasets) are made available for consumption by applications for various analytical purposes. In this workflow, data analysts develop Tableau dashboards directly on the data lake via Dremio’s platform without the need for data copies. For ML workloads, data scientists can use other engines (such as Apache Spark) directly on the Iceberg datasets.

An important thing to note in this lakehouse architecture is the data lake table format used, i.e., Apache Iceberg. Iceberg is a high-performance, open table format for large-scale analytics. It brings capabilities such as ACID compliance, full schema evolution (using SQL), partition evolution, time travel, etc., that address some of the critical problems within data lakes and allow you to do all the things you would typically expect from a data warehouse.

One of the best things about the Iceberg table format is that it enables you to work simultaneously with various compute engines (such as Spark, Dremio, Flink, etc.) on the same data. So, depending on the use case, you can decide which engine to use. For the following demo, we will use Dremio Sonar as the query engine to deal with Iceberg tables.

Data Request for Building Dashboard

Now imagine a data analyst in your organization wants to build a new dashboard to do some customer churn analysis. To start their analysis, they need access to the relevant datasets. So, they log a new ticket for your data engineering team to get the data.

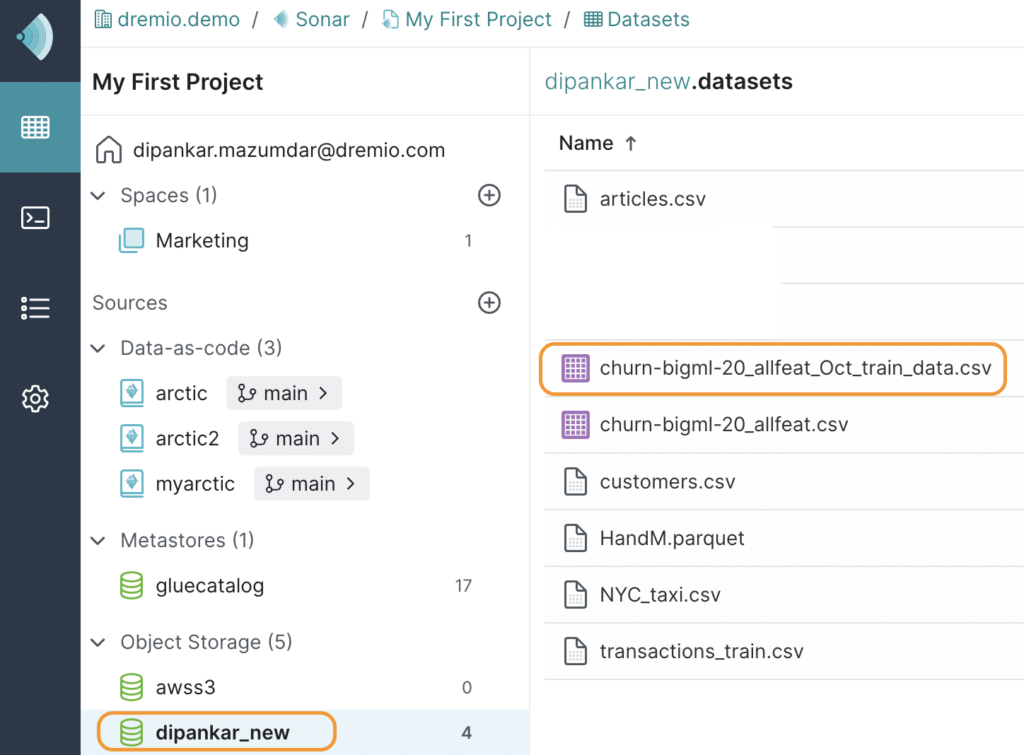

For this demonstration, assume that the relevant data files are already ETL'd into a data lake called dipankar_new and ready to be converted into Apache Iceberg tables. Upon accessing the dataset from Dremio UI, this is how it looks.

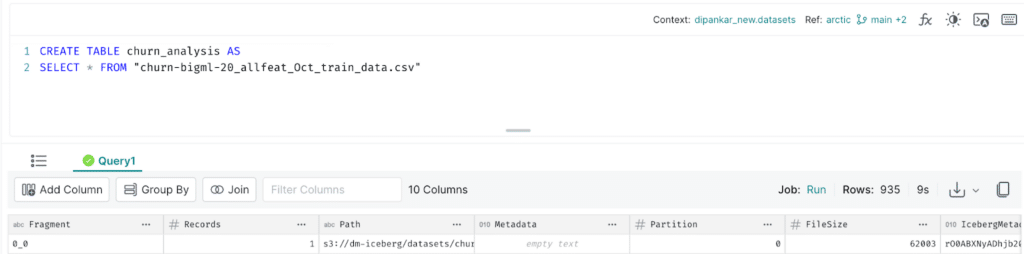

Now, to convert the churn-bigml-20-allfeat_Oct_train_data.csv dataset into an Iceberg table, you write a CTAS statement as shown below in Dremio:

CREATE TABLE churn_analysis AS SELECT * FROM "churn-bigml-20_allfeat_Oct_train_data.csv"

After the statement executes, you will have successfully created the first Iceberg table named churn_analysis.

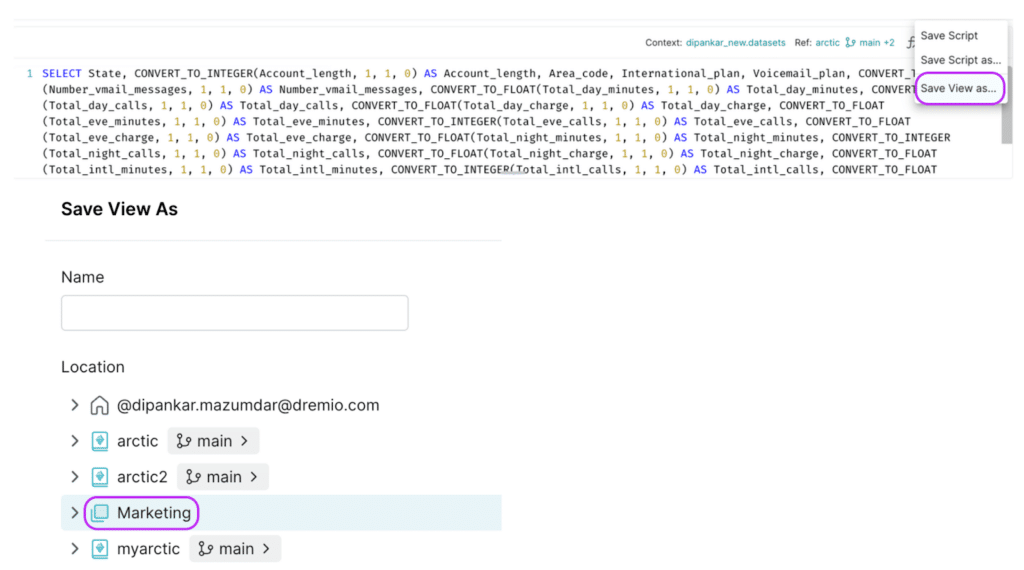

Next, you need to share this dataset with the analyst. Dremio allows you to quickly share datasets in a number of ways. However, the best approach is to create a virtual dataset (aka VDS) and save it to a specific space, i.e., a semantic layer that the analyst has access to. This is also when you can apply any transformation on the dataset. For this demo, we will just change the datatypes of a few columns and save it to a space called Marketing as shown below.

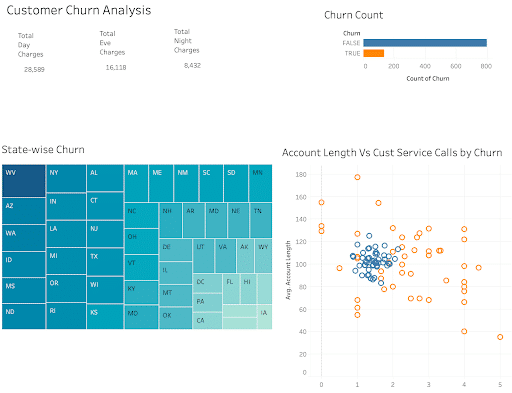

Finally, the analyst starts working on the dashboard and publishes it for their stakeholder’s analysis. This is what the dashboard looks like currently.

Data Write Mistakes

Now, let’s say you are working on a different ticket the next day, and it involves ingesting some new data to a couple of Iceberg tables in your data lake. You write the code and run a job. The job runs successfully, but upon verifying the job details, you realize that you have made a mistake and updated tables in the production environment instead of staging — that’s a horror story!

To add to that, another thing that you observe is that you have also updated the churn_analysis table based on this SQL statement.

INSERT INTO churn_analysis SELECT * FROM "churn-bigml-20_allfeat.csv"

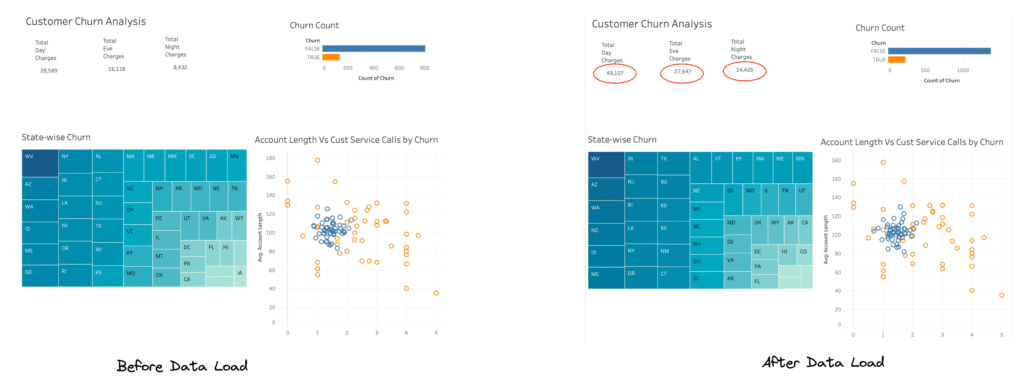

This is the same table the analyst used to develop the “Churn analysis” dashboard. Although it is not an uncommon scenario, the impact of this mistake is huge, especially for the stakeholders who make decisions based on this data. While you dig more into how this happened and what to do, you get a Severity-1 ticket from the same analyst who says the obvious: “The dashboard has completely different data now. Can you please investigate before I can present this to stakeholders?”. They also attached a screenshot of the dashboard with the unexpected numbers, as shown below.

On the brighter side, you know this was a mistake on your part, and exactly what tables were impacted in the data lake because of the job that you ran. This saves a lot of time in terms of debugging and figuring out the root cause. However, you are worried about the next steps to fix this situation. In your previous architecture, which was based on a cloud data warehouse, you could simply roll back the tables. So, the question now is, do you have similar capabilities with a data lakehouse?

The good news is that Apache Iceberg specifically brings these features to a lakehouse architecture and allows you to roll back tables to quickly correct problems by resetting tables to a previous good state. Note that Iceberg as a table format provides APIs to achieve this functionality; however, the responsibility of actually rolling back the tables lies in the hands of the compute engine. Therefore, the choice of engine is critical. In your case, Dremio is the engine, and it supports the operation of rolling back tables. There are precisely two ways to roll back tables: 1. Using the timestamp, or 2. Using the snapshot ID.

To start this operation, you need to first understand the history of the table churn_analysis and look at what happened. Iceberg provides access to these system tables for metadata querying and is super helpful in these types of scenarios. To do so, you run the following query in Dremio UI.

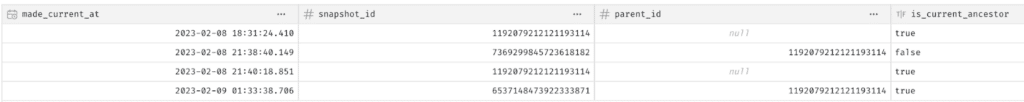

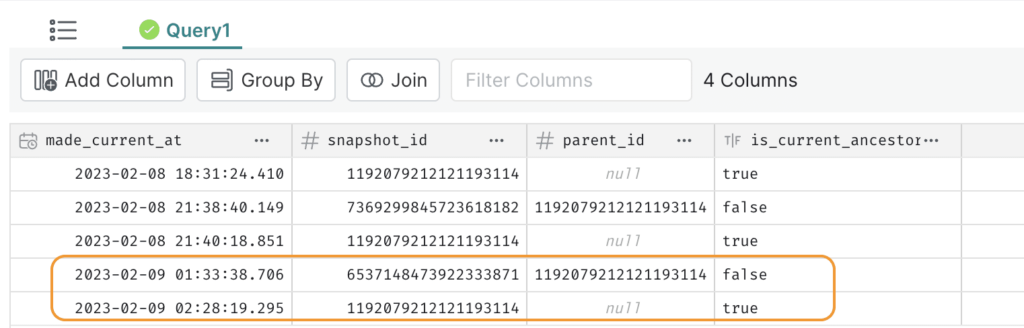

SELECT * FROM TABLE (table_history('churn_analysis'))This is what you see.

Based on the timestamp 2023-02-09 01:33:38.706, you identify that the last row led to the unintended INSERT operation in this production table. And the one above that with the timestamp 2023-02-08 21:40:18.851 is the correct state during which the analyst developed the dashboard.

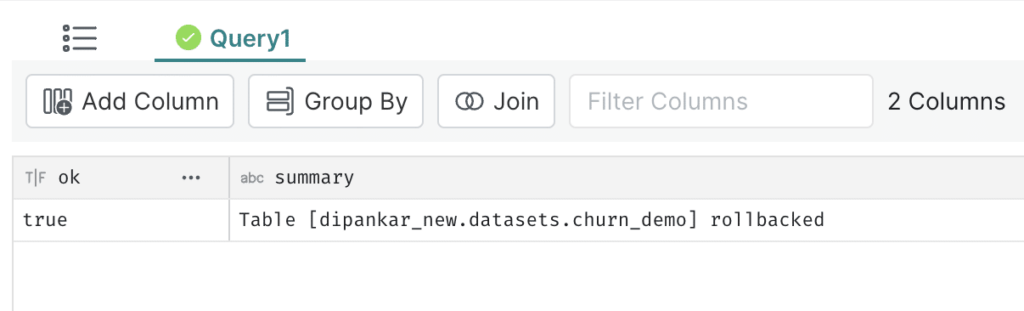

You now run the following command in Dremio to roll back the Iceberg table to its previous state.

ROLLBACK TABLE churn_analysis TO TIMESTAMP '2023-02-08 21:40:18.851'

The message shows that the table was rolled back. Upon running the table_history command again, you now see the following.

- The

is_current_ancestorcolumn is set to false for the snapshot that was created after the specified timestamp for rollback, i.e., 2023-02-08 21:40:18.851. - A new snapshot is created that makes it an ancestor.

You also run some count checks to verify the number of records. Finally, you ask the analyst to refresh the data source in Tableau to validate things on their end. The analyst confirms that the dashboard is now in a previously expected state and has no impact.

Conclusion

Data incidents like the one described in this blog are inevitable when multiple pipelines run in a data platform to make data available to the stakeholders. Table formats like Apache Iceberg deliver capabilities such as version rollback and time travel to efficiently handle these situations, ensuring no downstream impact. Ultimately, a data lakehouse brings all the advantages of open data architecture, allowing multiple analytical workloads to run in conjunction with significantly reduced costs. Check out these resources to learn more about the architecture of Apache Iceberg and its various capabilities:

- Apache Iceberg: An Architectural Look Under the Covers

- A Hands-On Look at the Structure of an Apache Iceberg Table

- Apache Iceberg – Top 5 Features

Get a Free Early Release Copy of "Apache Iceberg: The Definitive Guide".

Sign up for AI Ready Data content