Amazon Accelerates Supply Chain Decision Making by Implementing an Innovative Analytics Architecture using Dremio

- 10x in query performance, from 60 to 4-6 seconds

- 90% reduction in setup time

- 60hrs of work eliminated per project

NEW Introducing Dremio's Next Generation Cloud

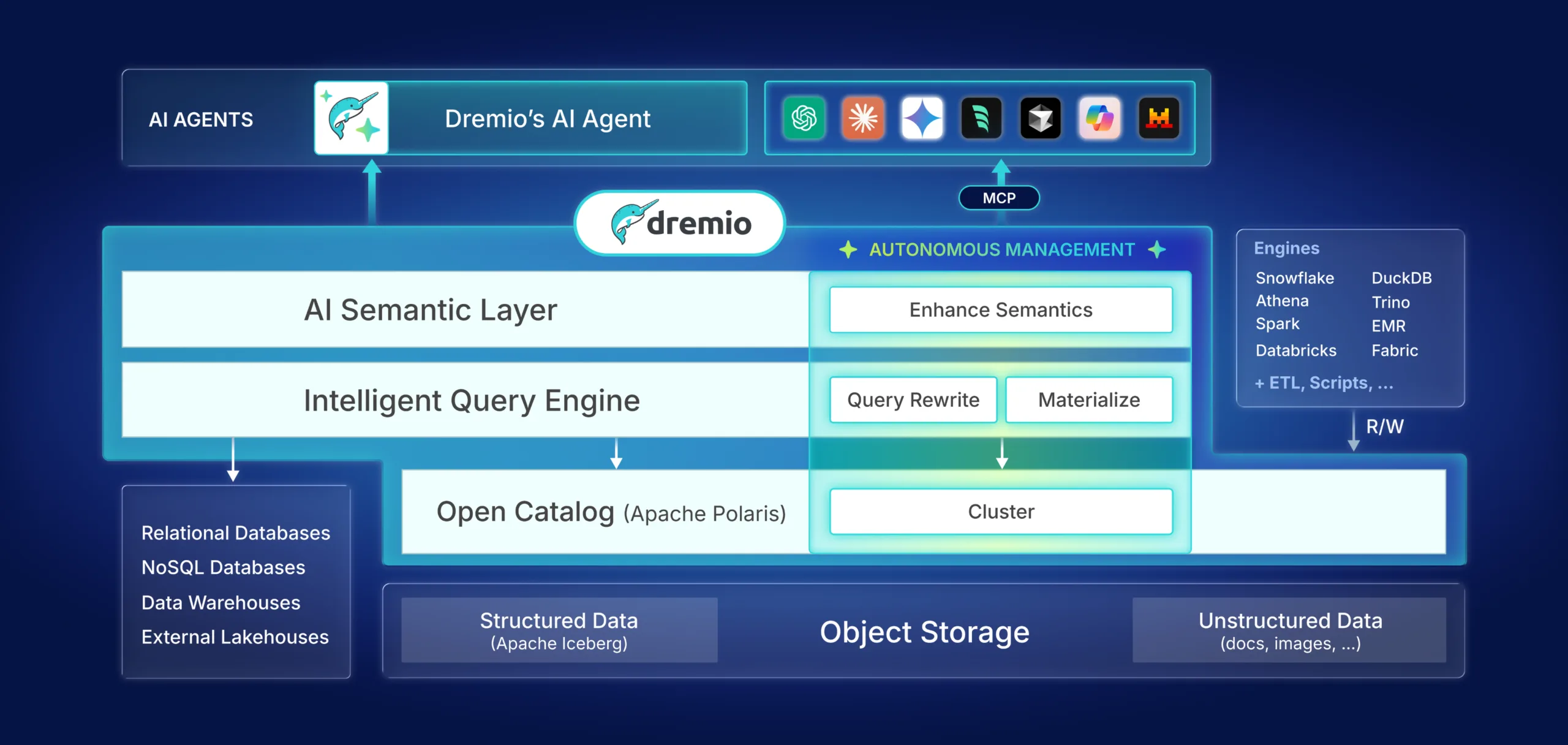

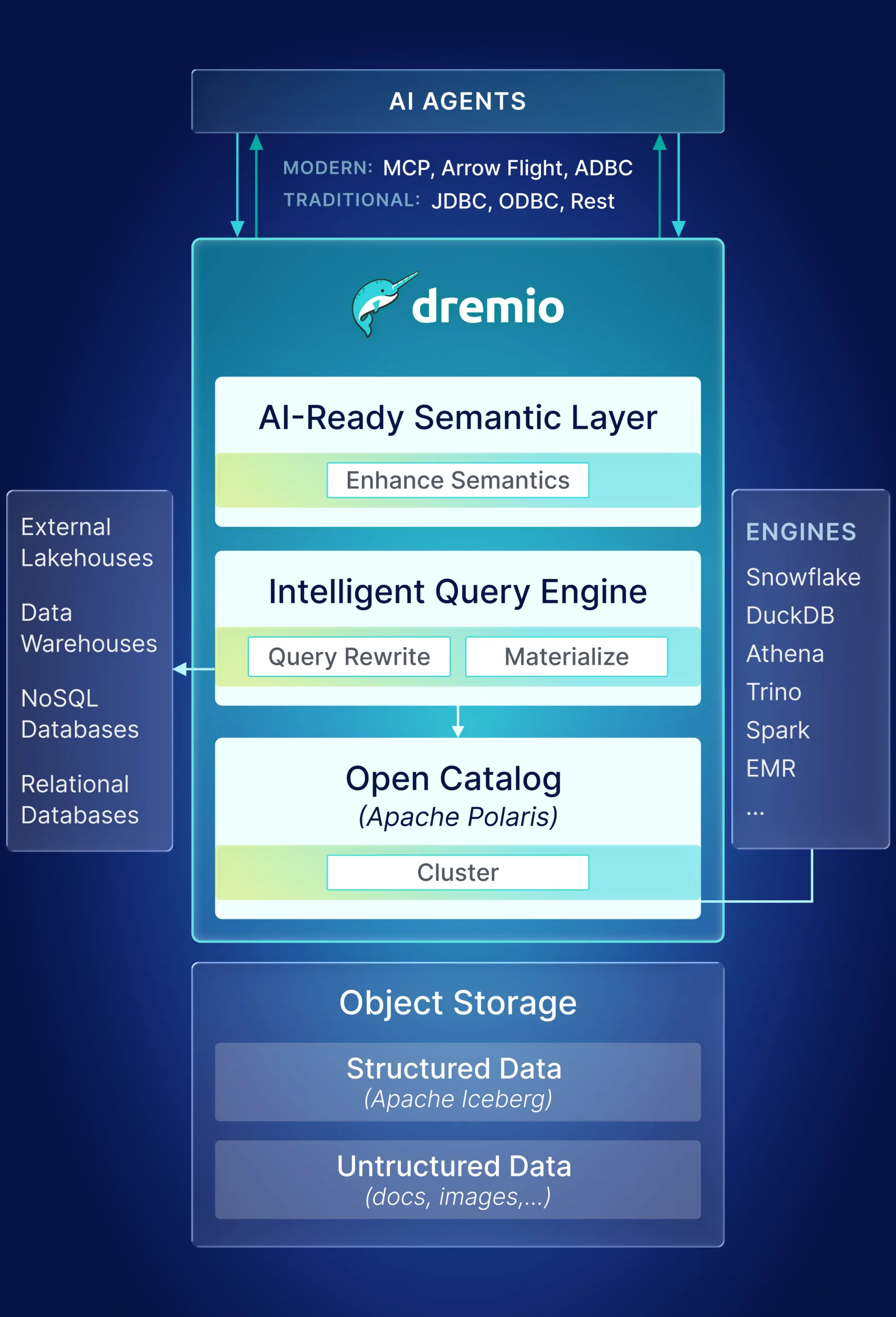

The only lakehouse built for agents, managed by agents

Fastest path to deliver

Easiest way to Operate your

20x Performance at the

Agentic

Designed for agentic-first experience

Complete

The full, integrated picture of all your data

Contextual

Provide AI the context it requires

Governed

Access control enforced end-to-end

Autonomous

Keeps performance on autopilot

CASE STUDIES

1000s of companies across all industries trust Dremio.

USE CASES

See real-world use cases of companies preparing their data for AI and innovation.

Agentic Analytics

Enable AI agents with direct access to enterprise data through natural language. MCP Server provides zero-integration connectivity to LLMs and AI frameworks.

Unify Data & Analytics

Eliminate data silos with a unified semantic layer and query federation that provides consistent definitions, metrics, and business logic across all your data sources.

Warehouse to Lakehouse

Get faster performance, more flexibility, and lower management overhead than traditional warehouses. The best warehouse is an Iceberg Lakehouse.

Lake to Iceberg Lakehouse

Connect on-premises and cloud data lakes into a unified lakehouse architecture through advanced query federation and virtual data integration - no ETL required.

Hybrid Lakehouse

Connect on-premises and cloud data lakes into a unified lakehouse architecture through advanced query federation and virtual data integration - no ETL required.

Data Fabric

Connect disparate data sources across hybrid and multi-cloud environments with a unified architecture that enables consistent governance, discovery, and access controls.

RESOURCES

Overview Dremio provides out-of-the-box methods of handling complex data types in, for example JSON and parquet datasets. Common characteristics are embedded “columns within columns” and “rows within columns”. ...

FAQs

Find quick answers to common questions about Dremio's platform and its features.

Dremio continuously analyzes query patterns and automatically creates Autonomous Reflections (smart materializations), applies Iceberg Clustering (intelligent data layout), and optimizes tables—all without human intervention. The system learns and adapts as your workload changes.

The MCP Server enables AI agents to discover and use data tools like RunSqlQuery and GetSchemaOfTable automatically. AI-enabled semantic search lets you find data using plain language. No custom integrations required—just natural language access to your enterprise data.

No data movement required. Dremio queries data where it lives using advanced federation. Your existing SQL queries work unchanged—they’re automatically optimized at runtime to take advantage of Reflections and intelligent caching. For Iceberg datasets, the management of Reflection based acceleration will be autonomous.

Yes, Dremio can be used with Python. Thanks to Dremio’s support for REST, ODBC, JDBC, and Apache Arrow Flight interfaces, you can connect to Dremio from virtually any programming language, including Python. Dremio also offers Python libraries such as dremio-simple-query and pyDremio, which make it easy to query and analyze data directly from your Python environment.

Yes. Dremio provides an ARP (Advanced Relational Pushdown) framework that allows you to build custom connectors. The community has already created connectors for many data sources using this framework. However, when possible, we recommend ingesting data into Apache Iceberg tables instead. This allows you to take full advantage of Dremio’s Autonomous Performance features, including acceleration, optimization, and caching.

Traditional systems require manual partitioning schemes that become maintenance nightmares. Dremio’s Iceberg Clustering automatically organizes data for optimal performance without any manual partition management. You get better performance with zero partitioning overhead.

All your current tools work exactly the same. Dremio provides one-click integrations with Power BI, Tableau, and other BI platforms. Users see faster dashboards and queries without changing anything in their workflow.

Yes. The Dremio Catalog is built on Apache Polaris, which implements the Apache Iceberg REST Catalog specification. This means any tool that supports the Iceberg REST catalog—such as Spark, Flink, and others—can read from and write to tables managed by the Dremio Catalog. Likewise, Dremio can connect to external Iceberg catalogs using its REST Catalog connector.

Yes. Dremio’s OPTIMIZE and VACUUM commands can be used on any Iceberg table that Dremio has write access to, regardless of which catalog it resides in. However, automatic optimization features are currently only available for Iceberg tables managed within the Dremio Catalog.