14 minute read · May 31, 2023

How to Convert JSON Files Into an Apache Iceberg Table with Dremio

· Head of DevRel, Dremio

Apache Iceberg is an open table format that enables robust, affordable, and quick analytics on the data lakehouse and is poised to change the data industry in ways we can only begin to imagine. Check out our Apache Iceberg 101 course to learn all the nuts and bolts about Iceberg. By storing your data in Apache Iceberg tables, you can run your workloads on the data without needing to duplicate it in a data warehouse while also keeping the data accessible so a variety of tools can utilize it.

This article demonstrates how Dremio Cloud can be employed to transform JSON files into an Iceberg table quickly. This enables faster query execution, running of DML transactions, and time-traveling of the dataset straight from your data lakehouse storage.

This article uses a single JSON file for simplicity’s sake, but you can follow the same steps for a dataset consisting of multiple JSON files.

Summary of the Steps

Dremio Cloud is an ideal solution for this task because it provides an access layer that is compatible with most sources, enabling you to access data stored in object storage (CSV, JSON, Parquet, etc.), relational databases, or a metastore for Apache Iceberg tables. Furthermore, Dremio allows for DML operations to be run on Iceberg tables, making it easy to convert data from any source into an Iceberg table.

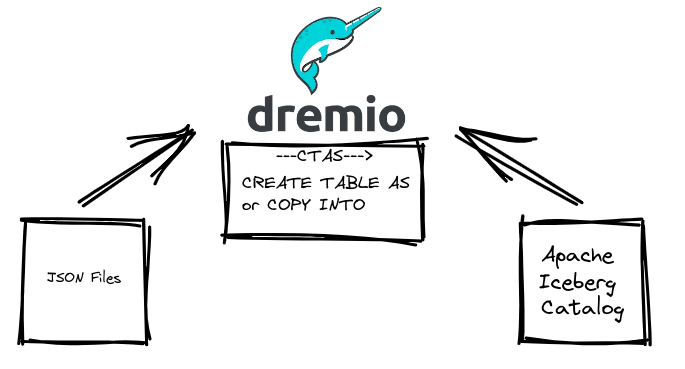

You simply need to:

- Connect your Iceberg catalog.

- Upload your JSON file or connect a source with a JSON file (like S3).

CTAS the JSON file into a new Iceberg table in your catalog

(or use COPY INTO to directly copy the file into existing Iceberg table).

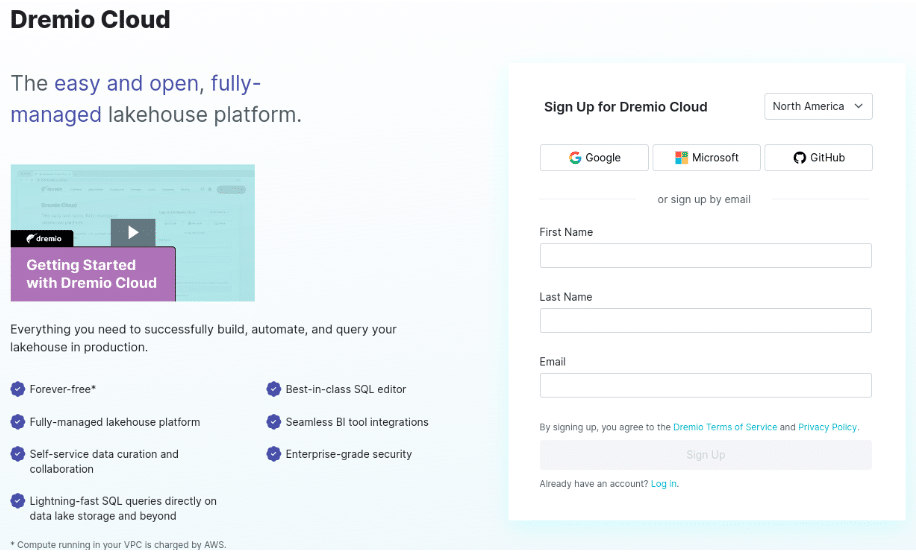

Step 1 – Get a Dremio Account

The first step is to get a Dremio Cloud account which offers a standard tier free of software and licensing costs (so the only cost would be AWS costs for any clusters you use to run your queries). You can get started with Dremio Cloud in minutes by following the steps highlighted in the video on the getting started page.

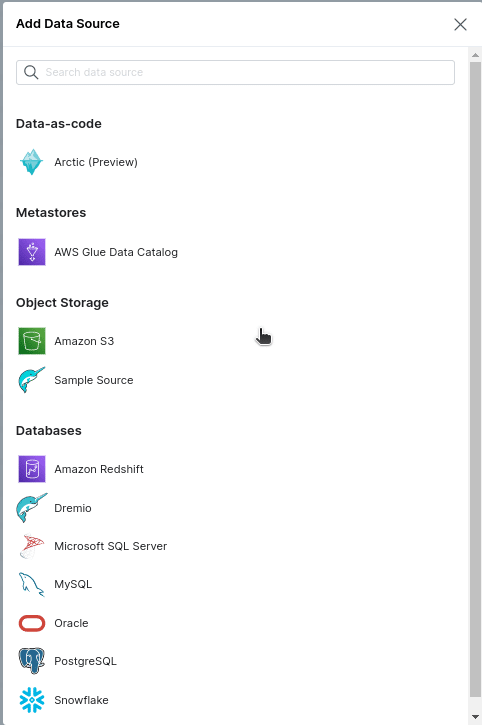

Step 2 – Connect Your Iceberg Catalog

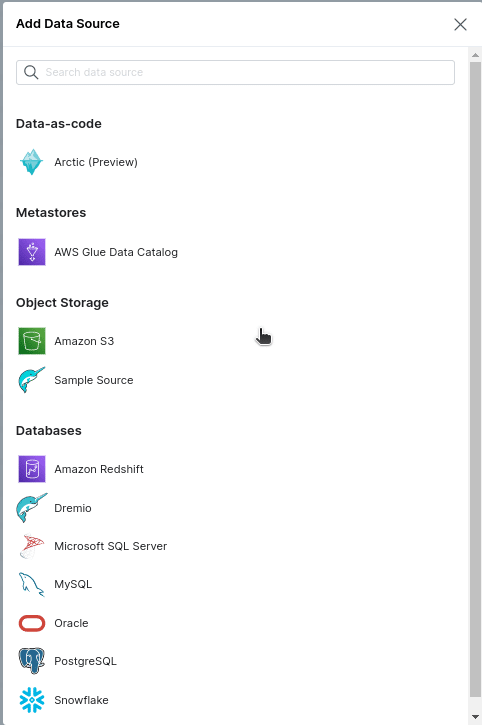

You currently have three choices for your Iceberg catalog when using Dremio Cloud to write to Iceberg tables: AWS Glue, AWS S3, and Project Nessie. These can all be connected by clicking the “Add Source” button in the bottom left corner of the Dremio UI and selecting the source you want to connect.

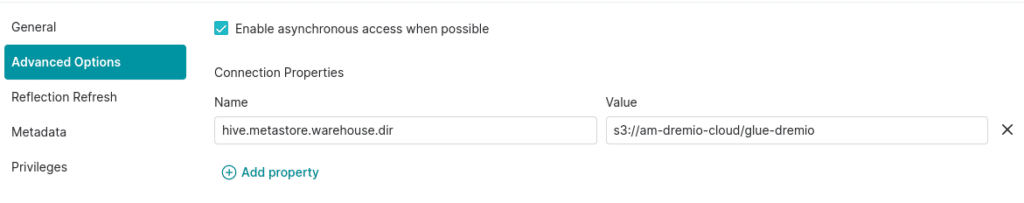

If using an AWS Glue source:

In the source settings under “Advanced Options” add a property called “hive.metastore.warehouse.dir”. This will determine the S3 folder any Iceberg tables you create in the catalog will be written to.

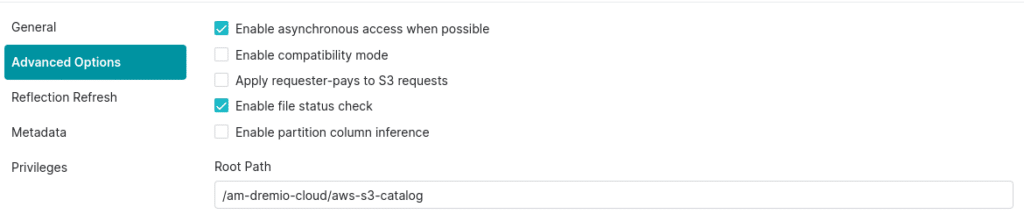

If using an S3 source:

In the source settings under “Advanced Options” make sure to set the root path to the S3 directory you want the source to default to, this will be where Iceberg tables will be created in this catalog.

If using a Dremio Arctic source:

If using Dremio’s Arctic catalog as your Apache Iceberg catalog a bucket associated with your Dremio Sonar’s project-associated cloud will be assigned automatically (this can be changed in the settings of the source if you want).

Now you have two approaches you can use going forward to load your JSON data into an Apache Iceberg table:

- CTAS – The JSON must be a dataset in your Dremio Account can then create a new table via a “CREATE TABLE AS” statement from that JSON.

- COPY INTO – You tell Dremio to copy the contents of JSON files into an existing Apache Iceberg table. A big benefit of this approach is the data from the JSON file will coerced into the Iceberg tables schema.

Step 3 – Load Your JSON Data

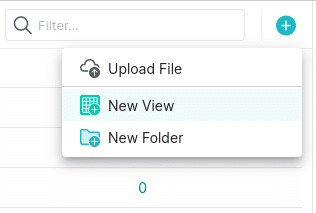

There are two ways to bring a JSON file into Dremio. You can either connect a cloud object storage source like S3 that has JSON files in it or you can upload the file directly into your Dremio account.

To keep it simple, if you click on “add source” you’ll see a “Sample Source” under the object storage category that has all sorts of data you can use for demonstration.

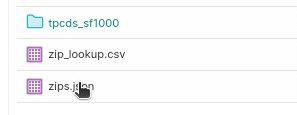

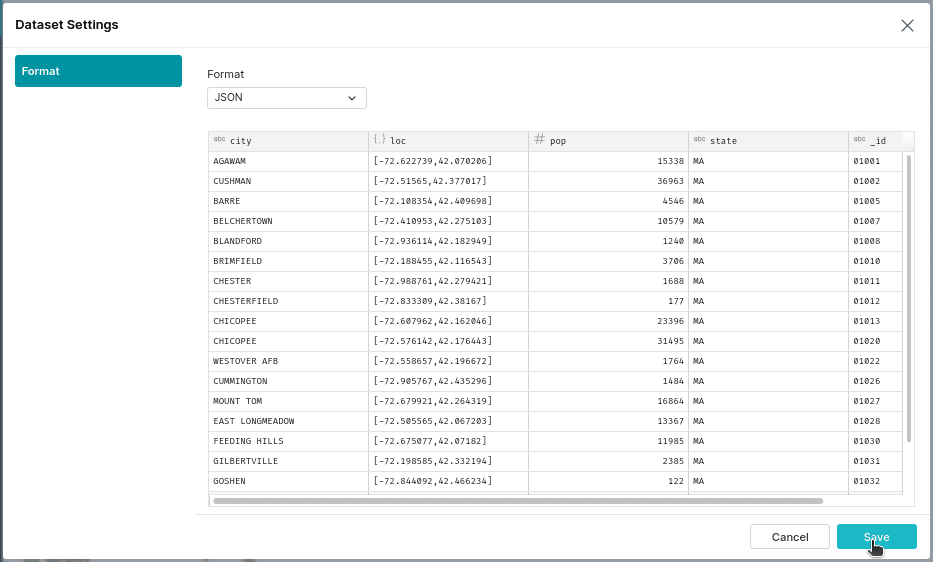

You should now have a source called “Samples”. There is a JSON “zips.json” in this sample source that you will want to promote to a dataset. To do so click on the “Format File” button.

Make sure “JSON” is selected for Format and click “Save”.

Step 4 – Option 1 – Converting a JSON File Into an Iceberg Table

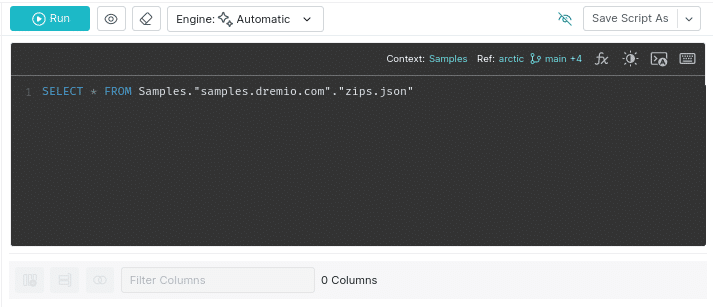

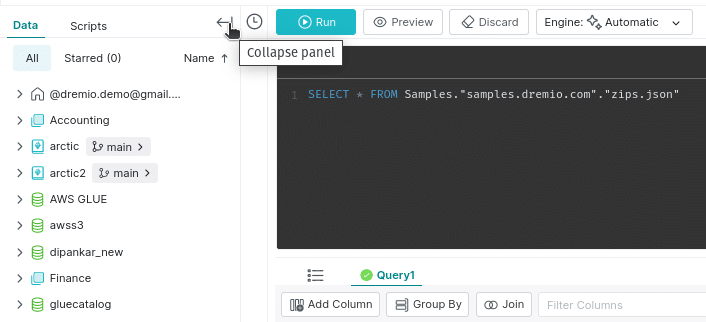

After formatting the JSON file into a dataset, it should automatically bring you to this dataset in the UI. When viewing the dataset, you’ll see the SQL Editor for editing and running SQL statements, along with the following options:

- Data – This is the current screen with the SQL Editor

- Details – Here you can write and view documentation on the datasets wiki and other information

- Graph – Here you can see a datasets lineage graph for where that dataset comes from within Dremio

- Reflections – Here you can enable data reflections to accelerate queries on a particular dataset further

Click the “Run” button to run the “Select *” query and to see that your data was promoted correctly (all the column headings should be there).

Off to the right, you’ll see an icon you can click to expand the panel that allows you to easily drag and drop your data into your queries, saving you time from having to type long namespaces.

Then you can use a CREATE TABLE AS statement to take the data from the JSON file and write it to a new table in your desired Iceberg catalog.

CREATE TABLE awss3.zips_from_json AS SELECT * FROM Samples."samples.dremio.com"."zips.json"

The image above uses an S3 source but when using any of the three catalogs, the namespace for your new table should look like the following:

- S3:

name_of_s3_source.new_table_name - AWS Glue:

name_of_glue_source.existing_glue_database.new_table_name - Project Nessie:

name_of_nessie_source.folder_name.new_table_name

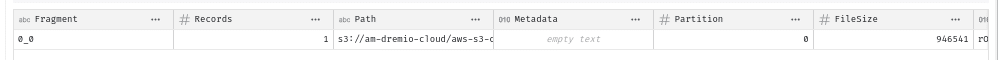

Once you run the query you’ll get a confirmation message that tells you where the table was written:

Step 4 – Option 2 – Using the COPY INTO Approach

The COPY INTO command allows you to copy the contents of CSV, JSON, and other files into an Apache Iceberg table. This can be from a single file or a directory of files. This is particularly useful when adding data from multiple files or making multiple additions at different times since it does not create a new table like the CTAS statement. COPY INTO will also take the values from JSON data and coerce them into the schema of the target table, saving you from doing type conversions manually.

Let’s make an empty table with our desired schema.

CREATE TABLE awss3.zip_from_json_2 (city VARCHAR, loc ARRAY(DOUBLE), pop INT, state VARCHAR, _id VARCHAR);

Then you can use the COPY INTO commands to copy the contents of the JSON file into the existing table (make sure to specify the delimiter for this particular file).

COPY INTO awss3.zips_from_json2 FROM '@Samples/samples.dremio.com/zips.json' FILE_FORMAT 'json';

A couple of benefits of the COPY INTO approach:

- It will use the schema of the destination table, whereas CTAS won’t know the desired schema and will coerce the fields based standard JSON datatype

- You can add data from a single file as well as from a directory of files

The Iceberg Advantage

Now that your data is in an Iceberg table you can take advantage of full DML from Dremio to run updates, deletes, and upserts on your data.

UPDATE awss3."zips_from_json" SET pop=11111 WHERE city='AGAWAM';

DELETE FROM awss3."zips_from_json" WHERE city='AGAWAM';

MERGE INTO awss3."zips_from_json" z USING (SELECT * FROM zips_staging) s ON n.id = s.id WHEN MATCH THEN UPDATE SET city = s.city, loc = s.loc, pop = s.pop, state = s.state, _id = s._id WHEN NOT MATCHED THEN INSERT (city, loc, pop, state, _id) VALUES (s.city, s.loc, s.pop, s.state, s_id);

Conclusion

Not only can you query this new Iceberg table using your Dremio account but you can also work with this table using other tools like Apache Spark, Apache Flink, and any other tool that supports Apache Iceberg and connects to your catalog, giving you open access to your data with metadata that enables smarter query planning to speed up performance and lower your cloud compute bill.

Sign up for AI Ready Data content