9 minute read · August 18, 2022

Future-Proof Partitioning and Fewer Table Rewrites with Apache Iceberg

· Head of DevRel, Dremio

One of the biggest headaches with data lake tables is dealing with the need to change the table’s architecture. Too often, when your partitioning needs to change the only choice you have is to rewrite the entire table and at scale that can be pretty expensive. The alternative is to just live with the existing partitioning scheme and all the issues caused by it.

Fortunately, if you build your data lakehouse based on Apache Iceberg tables, you can avoid these unnecessary and expensive rewrites with Iceberg’s partition evolution feature.

How Does It Work?

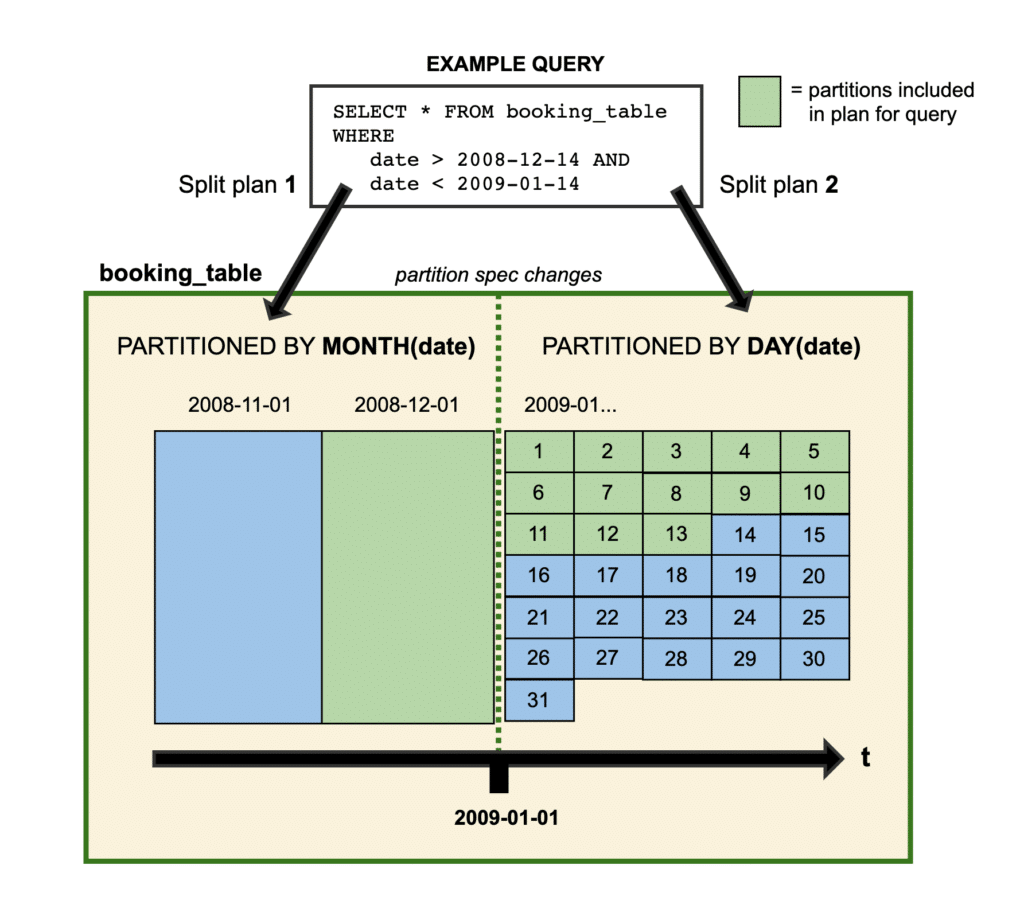

Usually, you can only define a table's partitioning scheme at its creation, but with Iceberg you can change the definition of how the table is partitioned at any time. The new partition specification applies to all new data written to the table while all prior data still has the previous partition specification.

Updating a partition spec is purely a metadata operation since prior data isn’t rewritten, making it very quick, easy, and inexpensive. When a query is planned the engine will split up the work and create a different plan for the data that applies to each partition spec. Since you can break up the planning of the query, there is no need to rewrite all the older data, making incremental changes to your table's architecture easy and inexpensive. Note that you can always rewrite the older data using the rewriteDataFiles procedure for compaction and sorting purposes anytime you want.

How to Update the Partition Scheme

To easily update the partition scheme in your Iceberg table you can use SQL to make all the changes you’d like.

You can add a partition field as easily as this ALTER TABLE statement:

ALTER TABLE catalog.db.table ADD PARTITION FIELD color

You can also take advantage of Apache Iceberg’s partition transforms when altering the partition spec:

-- Partition subsequently written data by id into 8 buckets ALTER TABLE catalog.db.table ADD PARTITION FIELD bucket(8, id)

-- Partition subsequently written data by the field letter of last_name ALTER TABLE catalog.db.table ADD PARTITION FIELD truncate(last_name, 1)

-- Partition subsequently written data by year of a timestamp field ALTER TABLE catalog.db.table ADD PARTITION FIELD year(timestamp_field)

Just as easily, you can drop a field that you’ve been partitioning and not partition it going forward:

-- Stop partitioning the table by year of a timestamp field ALTER TABLE catalog.db.table DROP PARTITION FIELD year(timestamp_field)

You can also use Iceberg’s Java API to update the partitioning:

Table nfl_players = ...;

nfl_players.updateSpec()

.addField(bucket("id", 8))

.removeField("team")

.commit();Conclusion

Apache Iceberg is the only data lakehouse table format with the ability to incrementally update your partitioning specification, and the ability to use SQL to make the updates easy and accessible. Data lakehouses need to be open, flexible, and easy to use to support your ever-evolving data needs, and Apache Iceberg, with its evolution features and intuitive SQL syntax, provides the backbone to make your data lakehouse productive.

Sign up for AI Ready Data content