15 minute read · June 22, 2022

Table Format Partitioning Comparison: Apache Iceberg, Apache Hudi, and Delta Lake

· Senior Tech Evangelist, Dremio

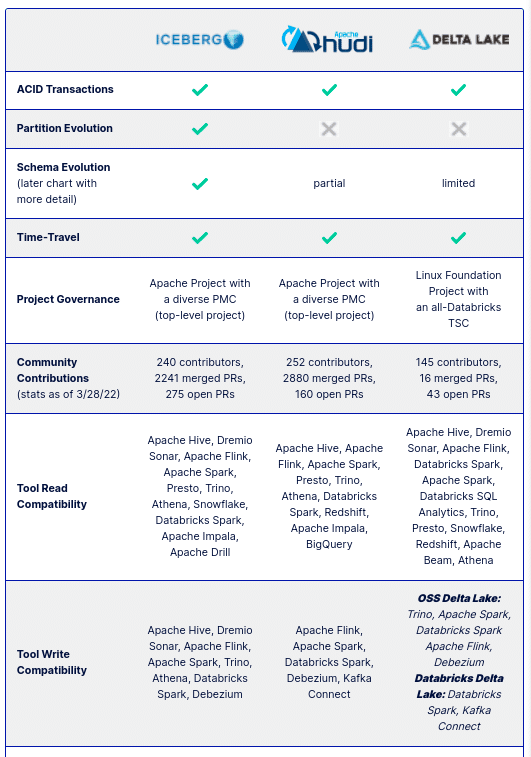

In this article, we compared several features between the three major data lake table formats: Apache Iceberg, Apache Hudi, and Delta Lake. Below is a summary of the findings of that article:

One of the areas we compared was partitioning features. In this article, we will dive deeper into the details of partitioning for each table format.

What Is Partitioning?

Partitioning is a strategy for optimizing how you store your data by subdividing the data by the values in one or more fields. For example, for sales data where queries often rely on when the sale occurred, you may partition the data by day, month, or year of the sale. Or, if you are looking at voter registration data, you may want to partition by party registration or precinct.

The benefit of partitioning occurs when a query filters the data by a partitioned column – the query engine can limit its scan to only subdivisions/partitions that apply to the query, resulting in much faster query performance.

How to Partition a Table

In most situations, the partitioning scheme is determined when the table is created by specifying columns whose values you want to partition the data by. The following shows what this would look like in each of the table formats.

Apache Iceberg

In Apache Iceberg you can partition the table in your CREATE TABLE statements, as shown.

CREATE TABLE IF NOT EXISTS catalog.db.players (

id int,

name string,

team string

) PARTITIONED BY (team) USING icebergBeyond selecting a particular column to partition by, you can select a “transform” and partition the table by the transformed value of the column.

Transforms available in Iceberg include:

- Day

- Month

- Year

- Hour

- Bucket (partitions data into a specified number of buckets using a hash function)

- Truncate (partitions the table based on the truncated value of the field, and can specify the width of truncated value)

Some examples:

-- partitioned by first letter of the team field CREATE TABLE catalog.db.players ( id int, name string, team string ) PARTITIONED BY (truncate(1, team)) USING iceberg

-- partitioned by day, month, year of a timestamp CREATE TABLE catalog.db.players ( id int, name string, team string, ts timestamp ) PARTITIONED BY (year(ts), month(ts), day(ts)) USING iceberg

-- partitioned into 8 buckets of equal sized ranges CREATE TABLE catalog.db.players ( id int, name string, team string ) PARTITIONED BY (bucket(8, team)) USING iceberg

Apache Hudi

In Apache Hudi you also specify your partitions in your CREATE TABLE statements. In Hudi you can’t specify transforms – just a column whose values you want to be used for partitioning.

CREATE TABLE IF NOT EXISTS default.players ( id int, name string, team string ) USING hudi PARTITIONED BY (team);

Delta Lake

In Delta Lake, you also declare how the table is partitioned when the table is created, for example:

CREATE TABLE default.players ( id int, name STRING, team STRING, ) USING DELTA PARTITIONED BY (team)

While you can’t specify a transform in your partitions like Apache Iceberg, you can create “generated columns” which are columns whose values are calculated based on another field. You can then partition based on that field. The example below will partition based on the first letter of the team name like the truncate() example from Iceberg. This feature creates a new column so that the generated value is stored.

(Note: The generated columns feature is fully functional only in Databricks Delta Lake and can use any Spark-supported SQL function aside from user-defined functions, window functions, aggregation functions, and those that return multiple rows. For the full functionality to exist in Delta Lake OSS some updates to Spark OSS are required which are expected in the Spark 3.4 release according to this GitHub issue. Keep in mind that this may cause issues when running other engines that may not support these Spark functions, making it difficult to get the open value of lakehouses.)

CREATE TABLE default.players ( id INT, name STRING, team STRING, team_first_letter STRING GENERATED ALWAYS AS (SUBSTRING(name, 1, 1)) ) USING DELTA PARTITIONED BY (team_first_letter)

How to Maximize the Benefits of Partitioning

In the Hive world, partitioning by a particular column or columns worked well to improve queries in many situations but had some areas of difficulty which modern table formats try to improve upon in different ways.

While you may have the table partitioned, query engines may not automatically know to take advantage of partitioning. In Hive tables, this required explicit filtering using the partition column (which were often derived from other columns and resulted in long-winded queries). An example of how verbose queries in Hive would be like, examine the following query:

SELECT * FROM orders WHERE order_time BETWEEN '2022-06-01 10:00:00' AND '2022-07-15 10:00:00' AND order_year = 2022 AND order_month BETWEEN 6 AND 7;

As you can see, querying by the timestamp field isn’t enough to accelerate the query with partitioning, so you have to add filters on the derived month and year columns, which is assumed to be the partition columns for the table. Not having the filters on the order_month and order_year columns would result in a full table scan. Data consumers may not know enough about the engineering of a table to add these extra filters, resulting in slower queries. Plus, you really shouldn’t have to know the physical layout of the table to have a good experience with it.

Let’s look at how the different table formats try to improve upon this situation.

Apache Iceberg

In Apache Iceberg there is a feature called “hidden partitioning” that makes getting the maximum benefit of partitioning quite easy. When you partition the data, you specify a column and transform like day(ts), so any query on the transformed column will automatically benefit from the partitioning and avoid a full table scan where logically possible.

So instead of this:

SELECT * FROM orders WHERE order_time BETWEEN '2022-06-01 10:00:00' AND '2022-07-15 10:00:00' AND order_year = 2022 AND order_month BETWEEN 6 AND 7;

Iceberg simplifies it to this:

SELECT * FROM orders WHERE order_time BETWEEN '2022-06-01 10:00:00' AND '2022-07-15 10:00:00';

Iceberg does even more to reduce the amount of data an engine needs to read. Within the metadata files that Iceberg uses to track tables are all sorts of column metadata tracking counts, minimum values, maximum values, and ranges at the manifest and data file level for further pruning based on any filtered column.

Apache Hudi

Using partitions in Hudi works like traditional Hive partitioning – there are no transforms at partition declaration like in Iceberg so any partition columns must be explicitly stated in your query like in Hive.

To compensate for this, Hudi stores column metadata in a column stats index in the metadata table that optimizes file pruning. Using this index, it can prune files based on their metadata and avoid the need for additional column partitioning such as day, month, or year columns (referred to as data skipping). So if data skipping and the metadata table are enabled on your Hudi table, a query filter on a timestamp field as shown below can be optimized using transforms in your filter.

SELECT * FROM orders WHERE date_format( order_time, "YYYY-MM-DD" ) BETWEEN '2022-06-01' AND '2022-07-15';

The query engine will then use the column stats index to skip data files that don’t include relevant data to improve scan times. However, this requires you to know how to write a query like this, which isn’t the typical ANSI SQL way to write it.

Delta Lake

In Delta Lake, if you have added generated columns to your table, it will automatically add filter predicates on those tables when you filter by the source column. A query in Delta Lake would look like the following:

SELECT * FROM orders WHERE order_time BETWEEN '2022-06-01' AND '2022-07-15';

If you have generated columns order_year, order_month, and order_day and those are specified as partition columns, then Delta Lake would generate the predicates to take advantage of the partition columns.

Like Iceberg and Hudi, Delta Lake will also attempt further file pruning using metadata. In Delta Lake’s case, it will maintain indexes on the first 32 columns in your table (this can be reduced or increased) which will be used to prune when those columns are filtered.

How to Evolve the Partition Scheme

As your data and use cases grow and evolve, you’ll sometimes find that your current partitioning scheme needs to change. Oftentimes to change the partitioning scheme, you have to run the time-consuming, expensive, and disruptive operation of re-writing the entire table.

Apache Iceberg

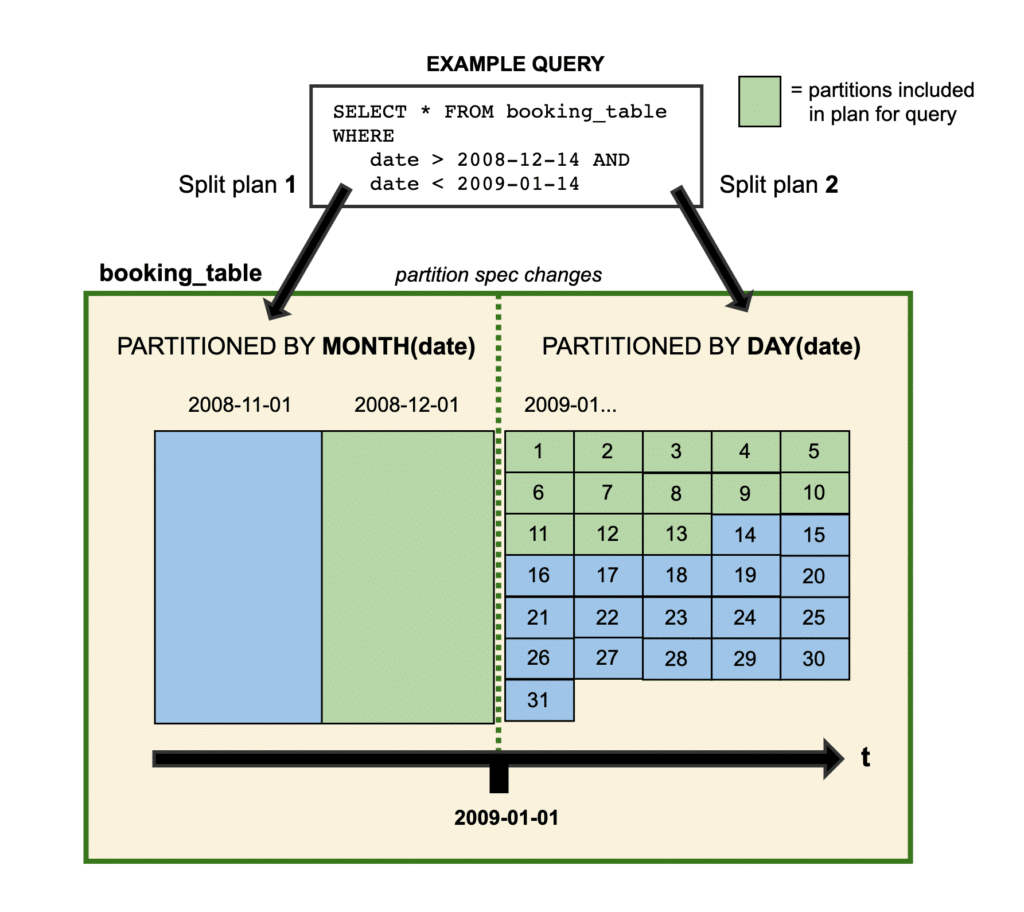

In Apache Iceberg, all the data on how files are partitioned are handled in the metadata file. Each of the three tiers of metadata files (metadata files, manifest lists, and manifests) has information on the partitioning of the table. This enables the ability to change how the table is partitioned going forward, in order to evolve the partitioning scheme (referred to as partition evolution).

The image above was originally partitioned by month, then the partition scheme was evolved to partition by day beginning January 2009. This new scheme applies to all new data added to the table after the change is made. If by chance new 2008 data is added after the change in the partition spec, the new data will have the new spec applied, and during any query planning it will be lumped with any data that shares the same partition spec.

When planning a query, a separate plan will be made for data in each partition scheme so each segment is optimized for the best query performance.

Updating the partition scheme is as simple as running an ALTER TABLE statement.

-- Removing old partition field ALTER TABLE prod.db.booking_table DROP PARTITION FIELD month(date); -- Adding a new partition field ALTER TABLE prod.db.booking_table ADD PARTITION FIELD day(date);

Apache Hudi

You cannot evolve the partition scheme in Apache Hudi without rewriting the table.

Delta Lake

You cannot evolve the partition scheme in Delta Lake without rewriting the table.

Conclusion

Partitioning is important to delivering performant queries on large data sets. All three of the main data lake table formats have different approaches to the role of partitioning in how they optimize file pruning for performant queries. When deciding on which format best suits your needs, be sure to ask and answer the following questions:

- What are my current partitioning practices?

- Will my partitions evolve in the future?

- Which format will be most ergonomic and easy to use for my data consumers?