7 minute read · July 30, 2025

Realising the Self-Service Dream with Dremio & MCP

· Technical Evangelist

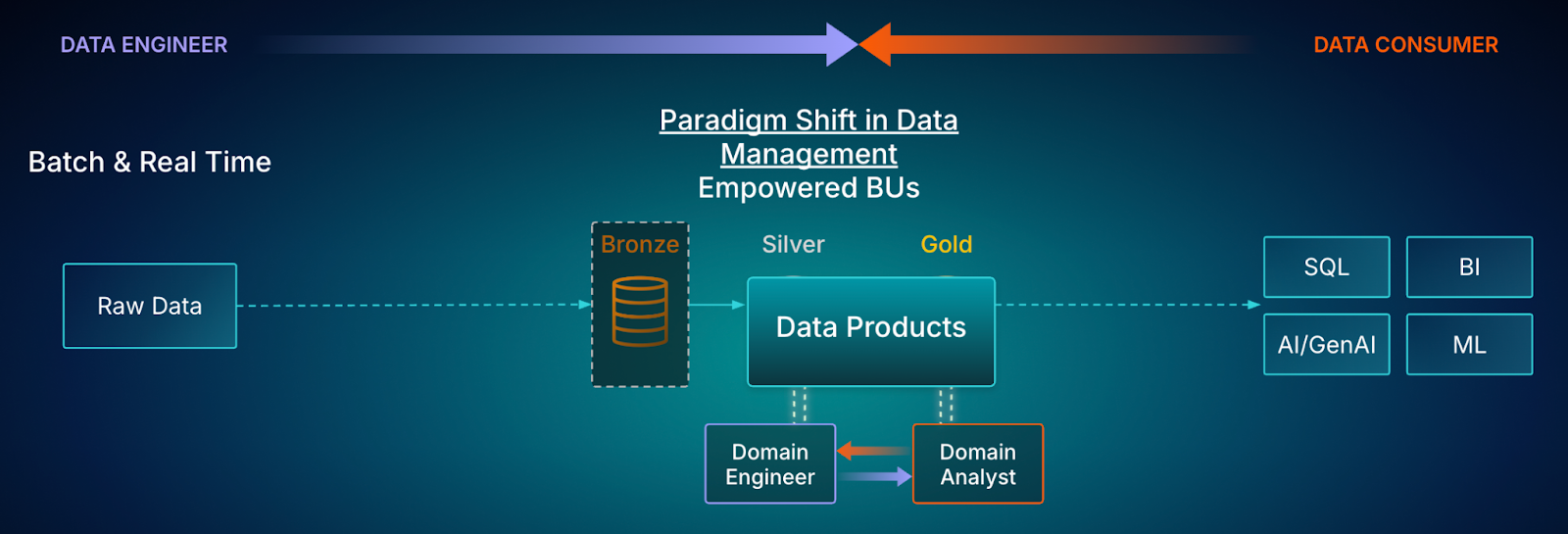

A promise of self-service data platforms, such as the Data Lakehouse, is to democratise data. The idea is that they empower business users (BUs), those with little or no technical expertise, to access, prep, and analyse data for themselves. With the right platform and tools your subject matter experts can take work away from your data engineers and produce curated and reliable Data Products themselves.

However, data preparation and refinement is only one part of the data engineer’s responsibilities. There is also the matter of maintenance work, such as performance optimisation and managing data storage. This work is too technical for the average BU and it’s hardly fair to make your data engineers maintain code and datasets they didn’t create. So who do these tasks fall to?

What if I told you that there was a third option: no-one does these tasks. Read on to learn how Dremio can make these maintenance tasks literally handle themselves.

Dremio MCP Server

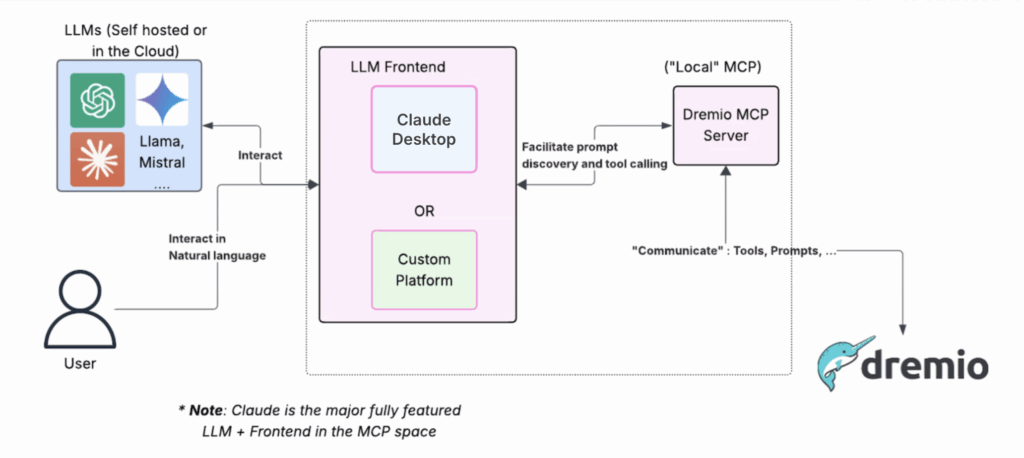

Getting BUs to write SQL is a reasonable ask (as much as some people may balk at the idea). SQL has been around since the 1970s so there are plenty of resources to learn from. However, if you want some assistance in your education you can have an LLM show you how.

With the Dremio MCP Server the LLM of your choice knows how to interact with Dremio; facilitating authentication, executing requests against the Dremio environment, and returning results to the LLM. This allows natural language to become the interface to your enterprise data, granting access to real-time insights, metadata exploration, and context-aware queries.

Based on analytical questions and data engineering requests, the LLM will generate and execute SQL queries to deliver BUs the results they’re looking for. What makes this a powerful educational tool is that the LLM provides full transparency to the SQL it executes, which users can understand and learn from. It can also suggest improvements to existing SQL code, so users can understand how to enhance or optimise their queries.

With this powerful AI tool BUs can learn to write SQL while actively generating code in the working style that fits their SQL understanding best; from writing raw SQL to no-code natural language interactions, and anywhere in between.

Table Maintenance

Dremio simplifies the maintenance of Apache Iceberg tables within your lakehouse through automated processes that ensure that data storage is efficient and that access speeds are optimised for analytics. Dremio’s Enterprise Data Catalog allows the automation of:

- Optimisation: Compacting small files into larger files. By reducing the number of files your query engine has to read you keep your queries performant.

- Cleanup: Deleting expired snapshots and orphaned metadata files. This minimises your storage costs and keeps your data catalog easy to manage and explore.

Try Dremio’s Interactive Demo

Explore this interactive demo and see how Dremio's Intelligent Lakehouse enables Agentic AI

Iceberg Clustering

Data partitioning is hard. You need upfront planning to get the right partitioning scheme for your workloads. Get it wrong? Your data distribution is skewed and performance suffers. Get it right? That good performance will degrade unless you constantly tune your partitioning.

Iceberg Clustering, however, is a much easier alternative to deal with. It doesn’t matter how your data is physically partitioned in storage, all you need to know is how it’s queried. Perfect for BUs who understand how your data is consumed rather than how it’s stored.

With Iceberg Clustering, Dremio automatically:

- Reorganises data within partitions,

- Sorts files for faster queries,

- Compacts small files,

- Optimises metadata.

The result? Efficient and predictable read/write performance that doesn’t degrade over time.

Autonomous Reflections

Dremio’s powerful query acceleration feature, Reflections, is now fully autonomous. As of v26.0 Dremio can create and manage reflections based on query patterns and user workloads, without human intervention.

What’s more, these new reflections will not become another maintenance headache. Multiple guardrails have been implemented to ensure that any created reflections are:

- valuable in terms of query performance improvements,

- autonomously deleted when they stop demonstrating value,

- not consuming excessive resources.

In practice, with Autonomous Reflections BUs will see their SQL query performance radically improve without them changing their code in any way.

Summary

The dream of self-service analytics has always been at odds with the reality of ETL pipelines. Data engineers do not just write SQL code but also maintain and optimise query performance. These coding and maintenance tasks are too technically demanding to reasonably expect the average BU to take ownership of. However, with the advent of Dremio’s MCP server and Autonomous Performance features BUs can focus on producing quality data products and analytics insights, whilst the tedious maintenance work takes care of itself.