Whitepaper

Warehouse to Lakehouse Migration Playbook

A guide for modernizing cloud data warehouse to an open data lakehouse with Dremio

Learn more ->A data lakehouse is an architectural approach that combines the performance, functionality, and governance of a data warehouse with the scalability, flexibility, and cost advantages of a data lake.

With open table formats like Apache Iceberg, you can operate directly over your data in the data lakehouse as you would with SQL tables. Open formats let you future-proof your data architecture without locking your data into proprietary warehouse formats or requiring endless data copies to support complex ETL processes.

Choosing a data lakehouse platform isn't just about storage, it's about accelerating access, reducing cost, and supporting AI-driven decisions. Dremio is built for this next chapter. Here’s what makes it different.

A single universal access layer for data consumers to build business metrics and virtual data marts with zero ETL.

Best price-performance data lakehouse engine, delivering up to 45% faster performance than the leading cloud data warehouse.

Complete data lakehouse management with an intelligent data catalog for Apache Iceberg, automatic data optimization, and Git for data experience.

Dremio is the only data lakehouse platform that meets technology leaders at all stages of their data maturity journey. We help enterprises deliver faster access to their data and reduce the cost of cloud data warehouses.

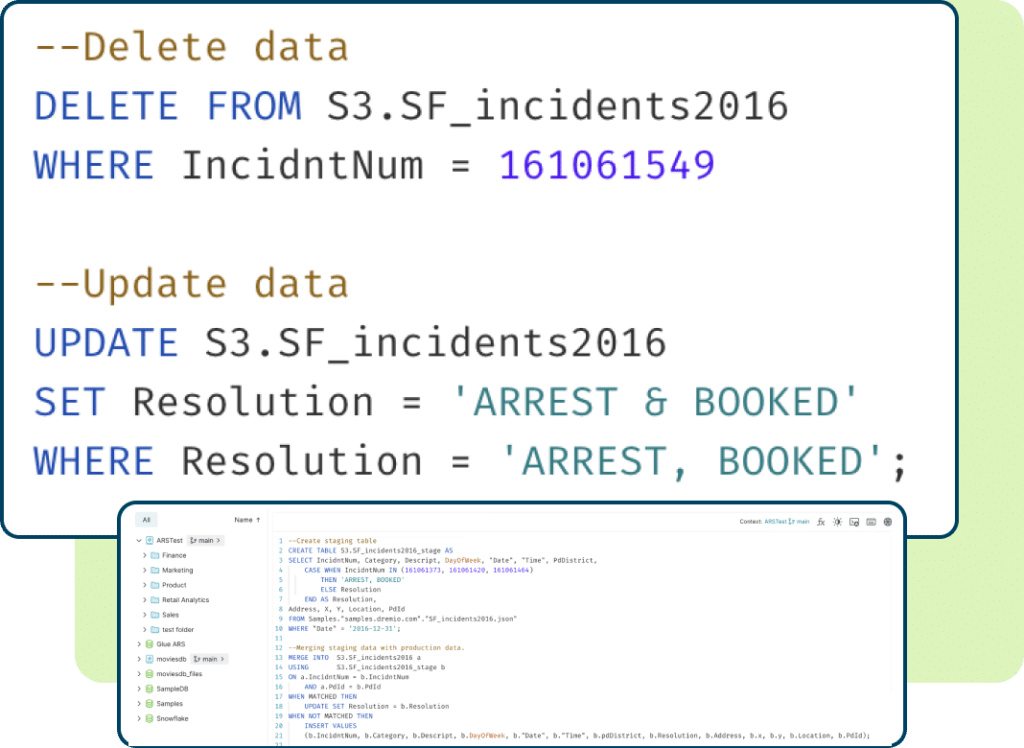

Bring your existing SQL data warehouse skill sets to the data lakehouse. Dremio’s SQL lakehouse engine supports DML, DDL, schema and partition evolution, time travel, and more.

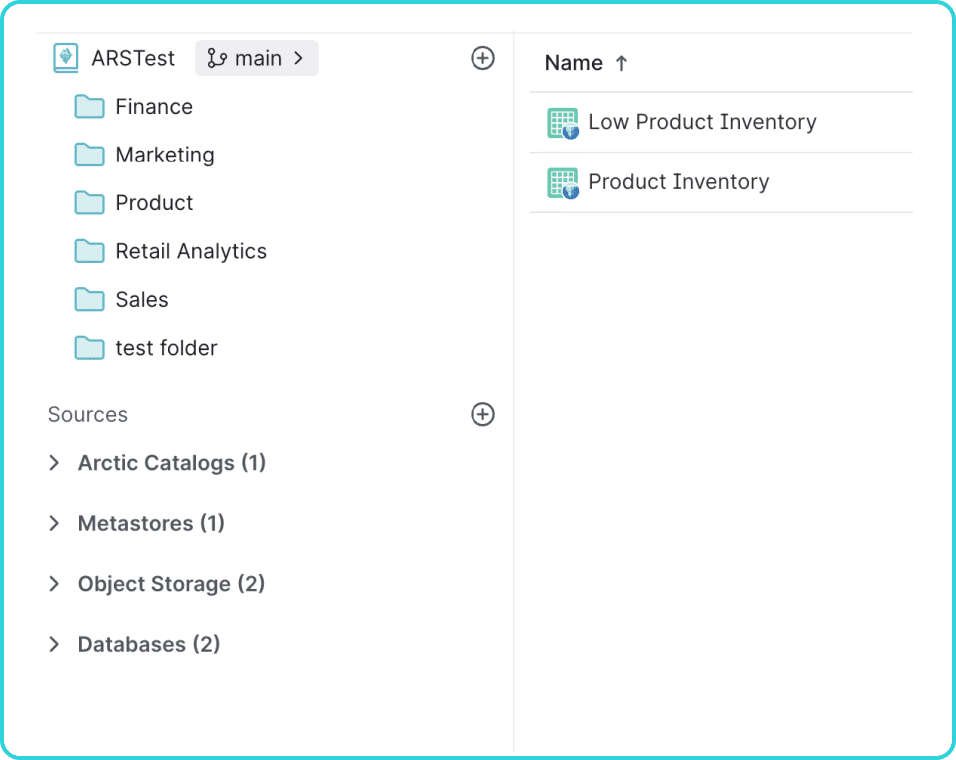

A single access layer to govern and make decisions about how enterprise data is used for self-service. Dremio’s universal semantic layer allows teams to build and share data products from one place.

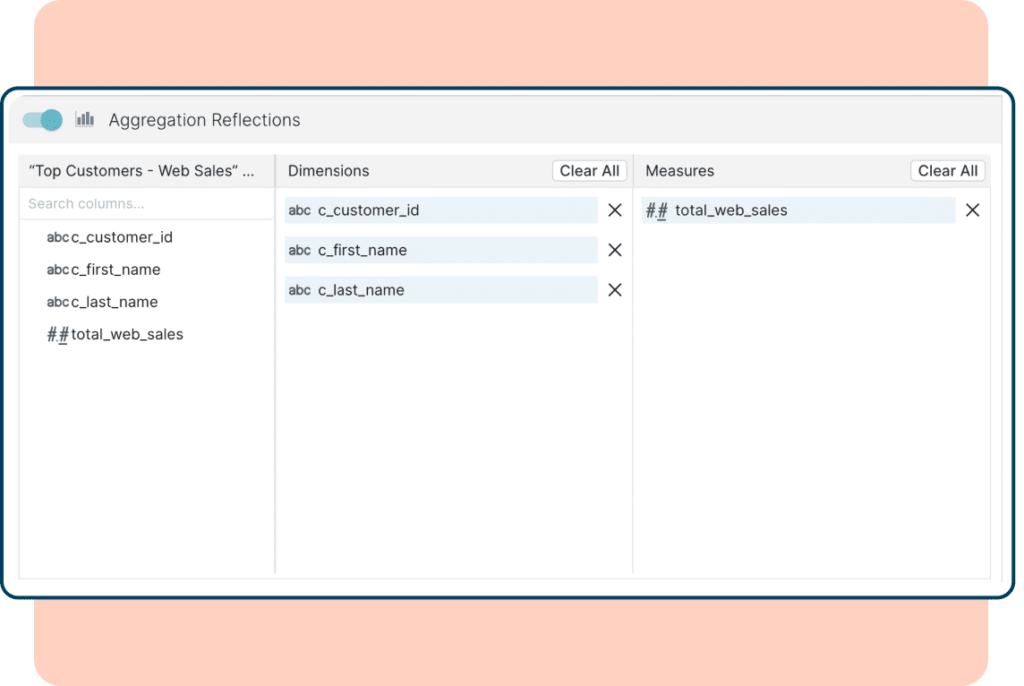

Supercharge your business intelligence dashboards without creating materialized views, data marts, or copying into expensive BI extracts. Powered by Reflections, accelerate analytics insight across the data lakehouse and relational databases while reducing your total cost of ownership.

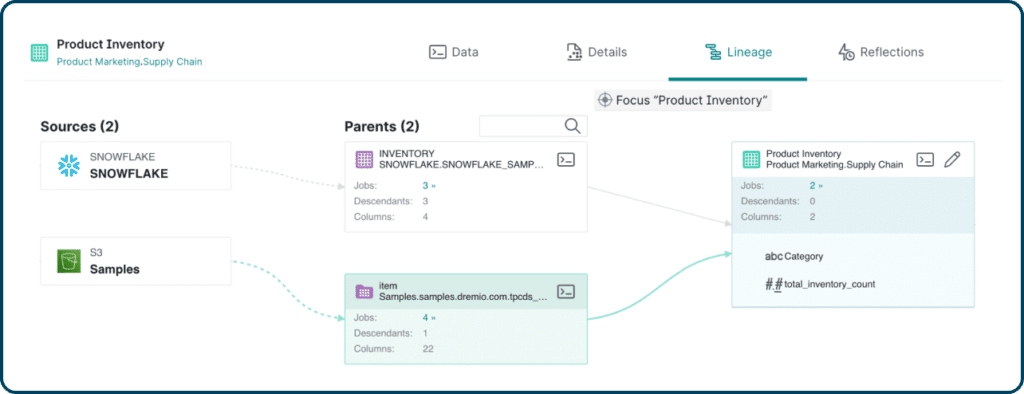

Manage all of your data sources in the cloud and on-premises, including full data lineage. Empower data consumers to discover, access, and leverage data products on their own with self-service capabilities like search, tags, and wikis.

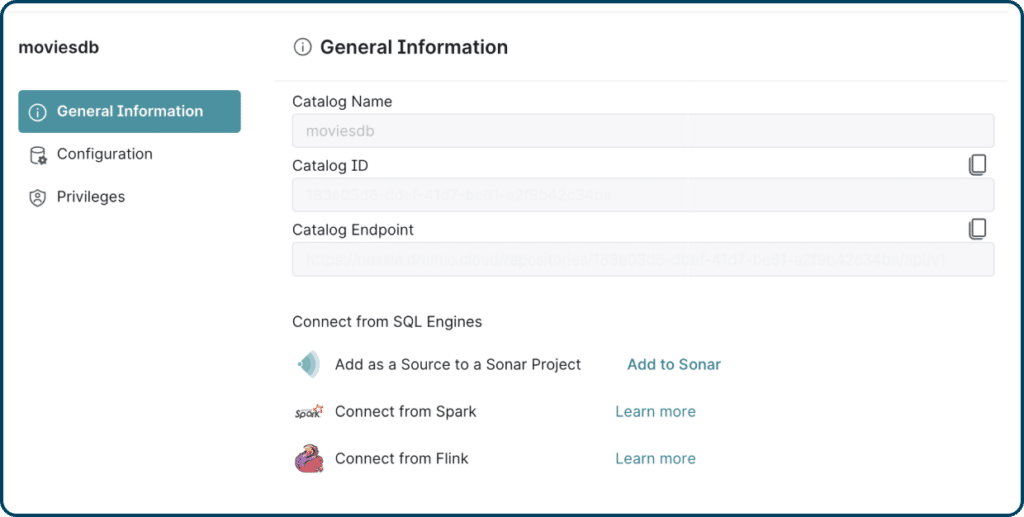

Automate tedious data management tasks in the data lakehouse. Dremio’s lakehouse catalog manages your Apache Iceberg metadata, and automatically optimizes and cleans up your files to ensure high-performance queries and reduced storage costs.

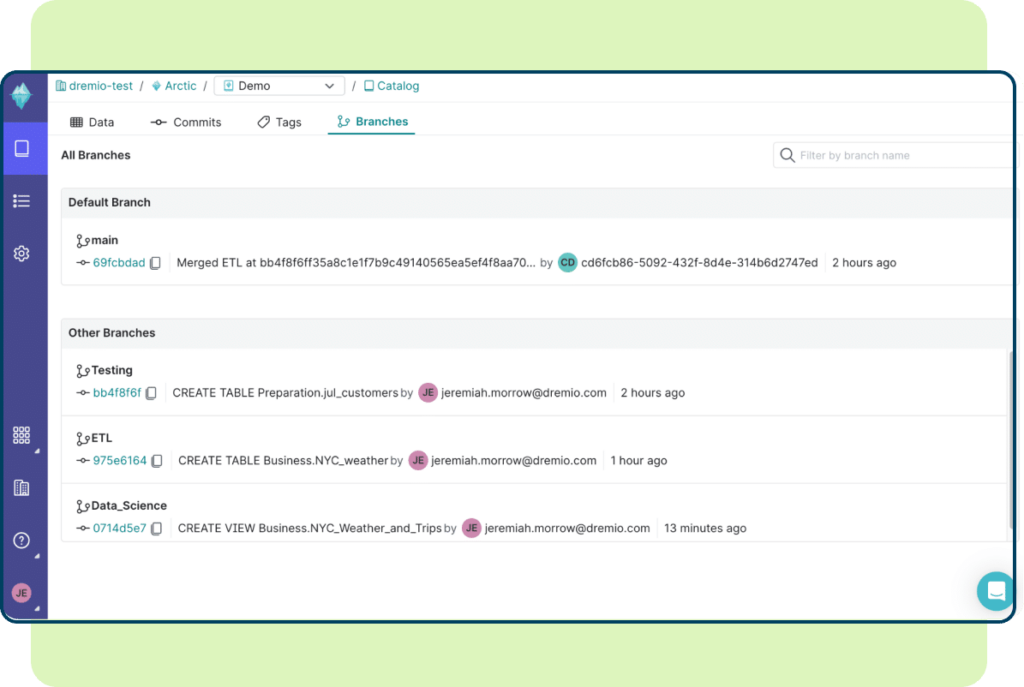

Use Git-inspired versioning to deliver a consistent and accurate view of your data. Spin-up isolated, zero-copy clones of production data in seconds for development, testing, data science, experimentation, and more. Drop or merge changes atomically to ensure consistency for production users, and easily recover from mistakes with effortless rollback.

Explore this interactive demo and see how Dremio's Intelligent Lakehouse Platform enables Agentic AI

Global enterprises across industries trust Dremio to deliver fast, governed access to their most critical data. Explore how customers like NCR and AP Intego modernized their data infrastructure with an intelligent lakehouse.

Looking to deepen your understanding of open data architectures and modern data strategies? These resources cover lakehouse adoption, migration best practices, and the role of semantics in self-service and AI.

A guide for modernizing cloud data warehouse to an open data lakehouse with Dremio

Learn more ->

This 2-hour virtual event is designed to provide data leaders with market data and expert insights to help them benchmark their organization against peers and determine the 2024 initiatives that are likely to drive successful outcomes for the business. Learn about the state of the lakehouse, table format, data mesh, and AI adoption.

Learn more ->

In this blog, learn how Dremio's universal semantic layer makes self-service over your data lakehouse easy.

Learn more ->FAQs

A lakehouse platform combines the scalability of data lakes with the performance and structure of data warehouses. Unlike traditional systems that require data to be copied and moved between tools, a lakehouse provides a single, open environment for querying, analyzing, and managing data.

Dremio is the intelligent lakehouse platform built for the AI era. It unifies data across all sources without ETL, accelerates query performance automatically, and provides rich semantic context, allowing humans and AI agents to work from the same governed foundation.

Dremio goes further. It’s not just a lakehouse—it’s an intelligent lakehouse. With autonomous optimization, a unified semantic layer, and zero-ETL data federation, Dremio meets the needs of both data teams and AI agents.

A lakehouse helps organizations:

With Dremio, you get more: faster performance without manual tuning, semantic search across all sources, and real-time access for AI-driven applications. Teams can explore live data, build trusted datasets, and deliver governed access from one platform.

Most lakehouses rely on open file formats, table formats like Apache Iceberg, and distributed query engines. They provide ACID transactions, schema evolution, and support for multiple compute engines.

Dremio is built natively on Apache Iceberg, Apache Polaris, and Apache Arrow. It offers an integrated catalog, automatic query acceleration (Reflections), and seamless access to all data sources through zero-ETL federation. These technologies work together to deliver fast, open, and intelligent data access.

Data architecture has come a long way—but the goal has always been the same: make data usable. Here’s how we got here:

From data warehouses: structure, but slow

In the 1990s and 2000s, data warehouses brought order and governance—but at a cost.

Then came data lakes: flexible, but chaotic

As data types expanded, organizations needed scalable storage. Data lakes delivered that—but lacked structure.

Enter the lakehouse: balance and unification

The lakehouse combined the flexibility of lakes with the structure of warehouses.

Now: the intelligent lakehouse

Today, traditional lakehouses fall short—especially for AI workloads. Dremio moves beyond the basics:

From warehouse rigidity to lake sprawl to the intelligence of today’s lakehouse, the direction is clear: data must be fast, open, and ready for every user and agent. Dremio completes the journey. From the rigidity of warehouses to the sprawl of lakes to the intelligence of the modern lakehouse, data architecture has moved toward one goal: making data more usable. Dremio completes that journey.

Start by eliminating silos and giving users and AI agents direct access to live data. A successful lakehouse should support:

Dremio delivers on all of this. It replaces brittle pipelines with virtualized access, layers business logic across all sources, and uses intelligent caching to boost performance. You get faster time to insight—and a platform ready for AI.

Common lakehouse platforms include Dremio, Databricks, and Snowflake. But not all are equally open or optimized for modern AI workloads.

Dremio stands out as the only lakehouse platform built natively on Apache Iceberg, Polaris, and Arrow. It offers unmatched openness, zero-ETL data federation, autonomous query performance, and full support for Agentic AI systems.

Choosing a lakehouse platform is a strategic decision. It shapes how your teams access, govern, and activate data—not just today, but long term. The right platform aligns with your architecture, supports AI and analytics, and works across every layer of your stack.

Here’s what to look for:

Dremio delivers on all of this.

It’s the only lakehouse platform built natively on Apache Iceberg, Arrow, and Polaris. Dremio gives you real-time, zero-ETL access, autonomous performance, and governed, AI-ready data—all through a shared semantic layer that works across your tools.

Choose the lakehouse that moves with your business—not against it.