Introduction

When using standard SQL, we can create a table by issuing the CREATE TABLE statement, however, this will create an empty table that we then have to insert data into. Dremio provides the formal ability to export virtual datasets (VDS) to their respective sources (S3, ADLS, HDFS, and NAS) using standard SQL-based commands. Create table as select (CTAS) will allow users to create and populate a virtual dataset and write it to specific sources that they have WRITE access to in a single SQL statement.

In this tutorial, we explore the use cases that this feature will help you with, then we explore how you can enable it in any of the data sources that support this ability today. Additionally, we will execute the CTAS command and check out the results.

Prerequisites

To get the most of this tutorial, we recommend that you first follow getting oriented to Dremio and working with your first dataset tutorials. Also, please keep in mind that at the moment of writing of this tutorial, the only data sources that support CTAS are: Amazon S3, ADLS and HDFS, and NAS.

The CTAS Use Case

The use of high performance parallel exports allow users to create, reorganize, download and export large (>1 million rows) or small datasets from any source of data into any of the CTAS-supporting data sources within Dremio. When users employ the CTAS statement, Dremio will then store the results of the query into one or many parquet files (depending on the size of the source) on which users have full control on the naming, destination path and security rules. Like any dataset created within Dremio, the results of the high performance parallel exports will be cataloged and made searchable so users can easily find, share, and collaborate.

Enabling CTAS on a Source

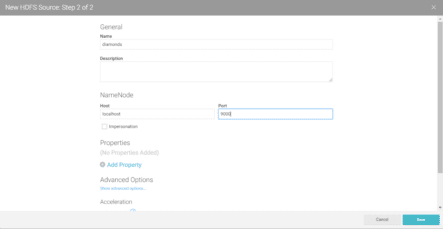

To enable CTAS on the supported sources, first add the CTAS-supported data source that you want to use. For purposes of this tutorial, we will work with Amazon S3.

Once we selected, click on “Advanced Options”, then select “Enable exports into the source (CTAS and DROP)” and click “SAVE”

The steps we have performed up to this point will enable the functionality on the source connector using impersonation for permissions to allow WRITE access to the source via SQL-based commands.

Once the datasource has been added, you will be able to see all the buckets available in your S3 environment.

Using CTAS on a Source

When creating a CTAS on a source, Dremio will generate a parquet file (or several parquet files, depending on the size of the datasource) that contains the results of the “SELECT” statement and will place this file in a directory inside the source that you will be working with. The steps to create this directory are somewhat different for each one of the supported data sources. In this tutorial, we will use an S3 bucket; please refer to your data source’s documentation to verify the steps that you need to follow to create and grant permissions to the new directory where the CTAS results will be stored. A great advantage of this, is that you can leverage the file system permissions to ensure secure access to the data.

In this tutorial, I will be working with the San Francisco Police Department incidents dataset that I already have available in one my S3 buckets.

After reviewing the data inside the VDS, I will go ahead and write the CTAS statement to create data export using the following syntax:

CREATE TABLE [S3 source].["bucket name"].[file_name] [HASH PARTITION BY (column, . .) ] [LOCALSORT BY (column) ] AS SELECT [statement]

In this case, I want to create a subset of 100 records from the Incidents dataset and store it into my “dremio.uploads” bucket using the following statement.

CREATE TABLE jeffs3."dremio-upload".incidents100_subset as SELECT * FROM "@lucio".incidents Limit 100

To do so, simply click on the “New Query” icon on the top of your working space

Then type the SQL statement in the SQL editor field and click “Run”.

Once the query runs, Dremio will provide a brief report of the results. Here we can see that the query provided 100 records and the results have been stored in a Parquet file in the path that was specified in the query.

Visualizing the results

Now, whether we want to verify or make use of the results that the query generated, we will need to head out to the datasource that we specified in the query. Select the datasource that you are working with and then from the list of available buckets, select the one that you specified in the query. Here first the results we will present as a Parquet file, click on it.

At this point you will be able to see a sample of the data that is contained in the file. Click the “Save” button.

Dremio will immediately execute a query to preview the results. Notice that at this point we are working directly on the parquet file that was created using the CTAS statement.

At this point we can curate the data, add catalog information, save the dataset somewhere in our local space and/or start analyzing the data using our BI tool of choice.

Joining Data from different sources

Using CTAS in Dremio still allows the flexibility of connecting to and joining data from multiple and different data sources. In this next use case, I’ve been presented with two different datasets, one flat CSV file that contains the user ID number and name for a list of employees, and another file stored on S3 that contains the ID and biographical information for these employees.

Normally, in a situation like this, I would have to figure out a way to join these two datasets using a data integration tool. However, let’s see how easy we can join these two sets into a single file that we will create into S3 using the CTAS feature in Dremio.

Uploading the CSV file

First, I will upload into Dremio the CSV file that was provided to me. To do this, simply click on the purple grid icon on the top of your working space.

Then navigate to the local directory where the file is located, or you can also drag and drop the file here. Click “Save”

In the next screen, from the drop-down menu select the appropriate format for your file. In my case, I will select “Text(delimited)”

Then, make the appropriate changes needed so the data is read correctly into Dremio from the file. I’ll change the line delimiter as well as select the “Extract field names” option. Click “Save”

The new dataset should now be listed on your working space.

Now for the rest of the data. I know that it is stored in a dataset called “pbsample” so I’m going to search for it in Dremio.

And here I can see that this dataset contains the missing fields that I need to complete my employees dataset.

I will save this dataset under the name of “id_noname” under my working Space.

Now, to complete the task, I want to join “ID_name” and “ID_noname” datasets and save them into a new table that I will create using Dremio’s CTAS feature. To do that, click on the “New Query” icon.

I will use the CTAS syntax and join the tables at the same time in the SELECT statement as follows:

CREATE TABLE jeffs3."dremio-upload".employees_list_final as SELECT nest.Gender AS Gender, nest.Age AS Age, nest."Height(in)" AS "Height(in)", nest."Weight(lbs)" AS "Weight(lbs)", nested.ID AS ID, join_ID_name.ID AS ID0, join_ID_name."""Name""" AS """Name""" FROM ( SELECT ID, Gender, Age, "Height(in)", "Weight(lbs)" FROM "@lucio".id_noname ) nest INNER JOIN "@lucio".ID_name AS join_ID_name ON nest.ID = join_ID_name.ID

Once the query is in, click “Run”

Once the query runs successfully, you will be able to see the location of the new file under the “Path” field in the results grid.

To see the results, navigate to the indicated path within Dremio and open the parquet file. Once you save it, Dremio will present you with the option to start working with the joined dataset.

To make this dataset more presentable and usable for my team, I will make some changes as well as adding the email addresses for this list of employees.

First, I will drop the extra ID field by selecting the dropdown menu and selection the “Drop” option.

Notice that in the results, there is a name that needs a bit of clean-up. I will go ahead and clean that fieldname and also the value ‘ “Alex ‘ so we don’t come across any string issues later.

From the dropdown menu for the “name” field select “Replace Text”. Here I will be cleaning only one value so I can get away with replacing anything that contains double quotes with the value that I want. However for more complex transformations you can use any supported SQL function in the SQL editor or you could also use regular expressions.

Once I’m satisfied with the results, I can click “Apply.” Notice that Dremio automatically created the query used to alter the value and shows it on the SQL Editor.

One last thing before this list is ready, we need to add email addresses to the names. This can simply be done by adding a new field. Click on the “add field” icon and then concatenate the “@email” string to the name field.

Before applying the changes make sure that you preview the results to make sure that they are what you are expecting.

Now we just need to save the dataset and it will be ready to be shared and used by other members of my team.

The same way we have joined data from a local CSV file and an S3 bucket and export it to a different S3 bucket using the CTAS statement, you can join and export data from any of the supported data sources in Dremio into parquet files in S3.

In this new scenario, I have a dataset that contains Yelp reviews stored in Elasticsearch and the rest of the information for the same Yelp reviews is stored in a MongoDB source. Following the previous steps I can use a CTAS statement to create a parquet file on S3 that contains the joined data from these two sources, as follows:

We can also verify the origin of the data using Dremio’s data lineage feature

Navigating to the datasource allows us to verify that the joined data has been placed in the indicated S3 bucket

If we have the proper access to our storage environment, we can also double check where the parquet files have been created.

Last but not least, we can query the newly created dataset to explore its contents.

Dropping a table

If you recall, at the beginning of the tutorial when we enabled the CTAS feature, enabling this feature also enabled the option to DROP the tables that were created in that source.

Once you are done using the table that have been created using the CTAS statement, you can drop it by simply issuing the DROP TABLE statement against the same path where the table is located, as follows:

Notice that I’m giving Dremio the path of the table that I originally created using the CTAS statement, and I’m not providing the path to the parquet file inside the bucket.

To verify that the table is dropped, we can navigate to the data source path given in the DROP statement and make sure the parquet files have been removed.

In addition, if you have access to your AWS console, you can check that the bucket has been removed from your environment as well.

Conclusion

In this tutorial we walked through the steps of using Dremio’s new High Performance Parallel Export functionality. Users can use this feature to write back to sources where they have “Write” access to. In this case we used Amazon S3 and we learned how Dremio stored the results of the CTAS statement as a parquet file on the S3 bucket of our choice. Additionally, we were able to use the create table statement along with a Join statement to create a dataset composed by two different data sources and save the results directly into an S3 bucket for later use.

We hope that you enjoyed this tutorial, stay tuned to learn more about how you can use Dremio to gain more value from your data, faster.

Sign up for AI Ready Data content