30 minute read · October 29, 2019

Cluster Analysis The Cloud Data Lake with Dremio and Python

· Dremio Team

Introduction

Today’s modern world is filled with a myriad of different devices, gadgets, and systems equipped with GPS modules. The main function of these modules is to locate the positions of the moving objects and record them to a file called a GPS track. The services for accounting and processing such files, which are generally called GPS data, are becoming more and more popular. They enable the collection and analysis of data over a long-term time period, which makes it possible to use this data to solve different tasks such as logistics, optimization, and analytics problems, as well as systems monitoring and controlling (depending on the intensity of the objects’ movement).

Usually, the GPS data processing algorithm includes the following steps:

- Recording GPS data for certain time periods, usually a few hours per day.

- Saving data in GPS tracks and storing them on special services, e.g., Strava, GPS-series, etc.

- When enough data is collected, analyzing it and making recommendations.

While these technologies and services have become more and more popular, the following problems appear in GPS analysis processes:

- Data redundancy – usually data is stored at a high frequency (every second).

- Different types of GPS tracks – data without marking can be recorded when walking or playing sports, driving a car, etc.

- Large volumes of GPS data – more and more people or systems use these technologies.

To solve these types of problems, data is split into groups according to attributes. This approach makes it possible to apply the analytics methods to a certain data group and as a result, to simplify data processing. The methods and algorithms that use these approaches are called clustering methods. They are usually used for unsupervised machine learning algorithms to split data into clusters.

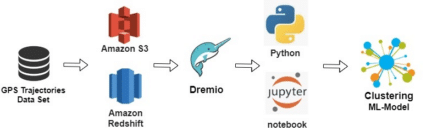

In this tutorial, we will show you how to cluster data from the GPS Trajectories Data Set. The general data processing algorithm is shown below:

Assumptions

We assume that you have the following items already installed and setup:

Data overview

The data is presented as two CSV files:

- go_track_trackspoints – GPS data by geographical coordinates (latitude and longitude), geographical location, time for each point, and track ID

- go_track_tracks – tracks with additional parameters: speed, distance, weather

Let’s analyze the data structure for further data processing with Pandas.

import pandas as pd

# path to data

def path_to_csv(file):

return os.path.join(os.path.abspath(os.curdir), file)

# CSV-files

csv_tracks_points = path_to_csv('go_track_points.csv')

csv_tracks = path_to_csv('go_track_tracks.csv')

# load CSV to DataFrame

df_points = pd.read_csv(csv_tracks_points)

df_tracks = pd.read_csv(csv_tracks)

# data shape

df_points.info()

df_tracks.info()

# DataFrame

df_points.head(5)

Df_tracks.loc[0]

The data presented in the form of geographical coordinates more fully describes all GPS tracks, so we will use it for clustering. In general, all other data that describes GPS tracks will be used for the analysis of the clustered data.

AWS operations

We will use AWS Redshift high-performance databases to access and store GPS data. To upload data, follow the next steps:

- Create a bucket in AWS S3 storage and upload CSV files to it.

- Create AWS Redshift cluster and database (or use an existing one).

- Create two datatables with matching CSV files fields.

- Use the COPY command to copy data from CSV files to the Redshift datatables.

- Configure access to data.

The first and second steps were described in previous articles. so we won’t focus on them in detail; just note the important issues and results.

There are two possible ways to create an AWS bucket and upload files to it: 1) using the AWS interface, and 2) AWS SDK code. After that, you will get an AWS bucket and two uploaded CSV files. You can optionally configure access to it.

At this step, it is important to store the path to the AWS bucket and CSV files, and also to acquire credentials in the AWS Identity and Access Management (IAM) settings.

You will need a master username and password to access the cluster and database. We’ll use the previously created AWS cluster and database.

Optionally, you will need a JDBC URL to access the database with the SQL client (SQL-workbench). But for our task, we will use the new AWS console interface (Query Editor).

To use the AWS Query Editor functionality, you need to:

- Select the Query Editor in the AWS console menu

- Select the proper cluster

- Enter your credentials.

Next, let’s upload our CSV files. Select a new Query tab and create a SQL query that will generate a datatable with the columns from the CSV file.

CREATE TABLE gps_points ( id varchar(255), latitude varchar(255), longitude varchar(255), track_id varchar(255), time varchar(255) ); copy gps_points from 's3://gps-data-tracks/go_track_points.csv' credentials 'aws_access_key_id=XXXXX;aws_secret_access_key=XXXXX' DELIMITER ',' IGNOREHEADER 1 SELECT * FROM gps_points

Click the Run button and open Query results.

As a result, we will get a datatable in the AWS Redshift database with data from the CSV file. Note that this procedure must be performed twice for each CSV file separately, with references to file paths and column names in the datatables.

Data processing in Dremio

There are some difficulties in data processing when using different data sources or adding data according to some attributes (as in our case). For example, there might be troubles with data features such as merging, data aggregation, conversion to the required format, or generating new data features based on existing attributes. Luckily, Dremio can help with this.

In the Dremio GUI, click on the button to add a new source. Then choose Amazon Redshift. The following window should appear:

Enter the database name for Dremio and the JDBC URL that defines the required AWS Cluster and database. Fill in the credentials of the AWS Redshift database and click Save. After that, we will receive a new data source that contains datatables with GPS data.

Let’s review the datatables and check what data operations they require.

First, we need to transform the data formats. For the specific data column, choose the required data format in the submenu. Optionally, create a new column based on the new data format or simply replace it. Click the Apply button.

However, while changing the type of the TIME column, we got NULL.

This can be explained by the fact that TIME data is enclosed in “ “ symbols. Let’s use the Extract function and separate time data.

Finally, save the processed data as a virtual dataset in Dremio space.

After repeating similar transformations for the second dataset, we will receive two virtual datasets ready for analysis with clustering algorithms.

Clustering algorithms

Machine learning models are based on algorithms that use statistical data correlations and help to solve problems that have no direct solution or are too complex.In our case, the data describes a huge range of GPS points that require analysis. With standard analysis tools, it would be difficult to get an accurate result since there is a lot of similar data. Therefore, we will apply clustering algorithms that split the data into clusters, making data analysis quite a simple procedure.

In this tutorial, we will focus on the two different clustering algorithms, K-means and DBSCAN.

We will use K-means (a simpler algorithm) to analyze the main big clusters of GPS points, and the DBSCAN algorithm for a more precise analysis of the GPS points for the most important cluster (the largest one). After that, we will analyze the GPS data from the resulting clusters.

Connecting Dremio to Jupyter Notebook

In the script below, we define the connection format and credentials for the Dremio platform, form a SQL type query for the Dremio space, and upload data to the Pandas DataFrame.

import pyodbc

import pandas as pd

# Credentail query

credentails = f'DSN=Dremio Connector;UID={user_id};PWD={user_password}'

# ODBC connection to the Dremio platform

cnxn = pyodbc.connect(credentails, autocommit=True)

# SQL type query to retrive the Dremio's space data

sql_points = r'SELECT * FROM "GPS Data"."gps-pont"'

sql_tracks = r'SELECT * FROM "GPS Data"."gps-tracks"'

# Load data

df_points = pd.read_sql(sql_points, cnxn)

df_tracks = pd.read_sql(sql_tracks, cnxn)Note that ‘user_id’ and ‘user_password’ are the credentials for the Dremio platform. So we have two DataFrames with GPS data.

The K-means algorithm must have a pre-defined number of clusters. This requires a pre-analysis of the GPS data. So we’ll perform this procedure by creating GPS data visualizations using the gmplot and geopandas libraries.

First, create GPS data in the form of geopandas DataFrame:

import geopandas as gpd

from shapely.geometry import Point, Polygon

# coordinate systems

crs = {'init':'epsg:4326'}

# create GPS points for graphics

geometry = [Point(xy) for xy in zip(df_points['longitude'],

df_points['latitude'])]

# create GeoDataFrame with GPS data points

geo_df = gpd.GeoDataFrame(df_points, crs=crs, geometry=geometry)

# reduce data to every 10 sec

df = df_points.iloc[::2, :]

df.head(5)

Note that we have reduced GPS data redundancy for more accurate analysis. So the GPS data on the map looks like this.

# define the map's center and map zoomed in

import gmplot

gmap = gmplot.GoogleMapPlotter(df.latitude[0], df.longitude[0], 15)

gmap.plot(df.latitude, df.longitude)

# html generating

gmap.draw("map.html")Now we can see that GPS data is located in Brazil and has at least two different clusters. Let’s see how the GPS tracks are placed on this map with SHP data for the Brazilian region.

import matplotlib.pyplot as plt

fix, ax = plt.subplots()

# shape data

bra_map = gpd.read_file('BRA_adm0.shp')

bra_map.plot(ax=ax, color="grey")

# gps points data

for track in geo_df['track_id'].unique():

geo_df[geo_df['track_id'] == track].plot(ax=ax,

markersize=50,

color="red")

plt.title('GPS points on Brazilian region')

plt.xlabel("Longitude")

plt.ylabel("Latitude")

plt.grid(True)

plt.show()

We can conclude that GPS data is clearly split into two clusters, and we can also implicitly split them into two more. Let’s make sure of this and build a K-means model.

from sklearn.cluster import KMeans

from sklearn.metrics import silhouette_score

# define clusters

num_clusters = 3

# KMean model

features = df_min[['latitude', 'longitude']].to_numpy()

cluster_labels = KMeans(n_clusters=num_clusters,

random_state=0).fit_predict(features)

# result

print('Unique clusters: ', np.unique(cluster_labels))

print('Clustering accuracy: ', silhouette_score(features,

labels=cluster_labels))

As a result, we have clustered the GPS data into three clusters with high accuracy. The accuracy of clustering is defined by the Silhouette score metrics.

We store GPS data to the proper clusters and define the volume for each cluster.

# turn the clusters in to a pandas series

clusters = pd.Series([coords[cluster_labels == cluster].to_numpy()

for cluster in range(num_clusters)])

print('Clusters: ', clusters)

# clusters length

clusters_length = [len(cluster) for cluster in clusters]

print('Clusters length: ', clusters_length)

The clusters’ volumes are quite different. This can be explained by the fact that GPS data is concentrated around one city. Let’s visualize these clusters.

The visualization of the scatter plot and annotation cluster looks like this:

def get_center_point (cluster):

centroid = (MultiPoint(cluster).centroid.x,

MultiPoint(cluster).centroid.y)

Center_point = min(cluster,

key=lambda point: great_circle(point, centroid).m)

return tuple(center_point)

# get the center for each cluster

center_points = clusters.map(get_center_point)

lats, lons = zip(* center_points)

center_clusters = pd.DataFrame({'lon':lons, 'lat':lats})

# centroids

for ind, (_ , center) in enumerate(center_clusters.iterrows()):

ax.scatter(center['lon'],

center['lat'],

s=clusters_length[ind],

alpha=0.7)

# data

df_scatter = ax.scatter(df['longitude'],

df['latitude'],

c='k',

alpha=0.9,

s=3)

ax.set_title('GPS Data and KMean clusters')

ax.set_xlabel('Longitude')

ax.set_ylabel('Latitude')

plt.grid(True)

plt.show()

Note that clusters are displayed in different colors, and the centroid diameter shows the size of the clusters.

Let’s build the same chart using Basemap library and geomap binding. We’ll show only the part of the code that differs from the previous one.

from mpl_toolkits.basemap import Basemap

# basemap

m = Basemap(projection='lcc',

resolution='c',

width=3000000,

height=3000000,

lat_0=-20,

lon_0=-43)

m.shadedrelief()

m.drawcoastlines(color='gray')

m.drawcountries(color='gray')

m.drawstates(color='gray')

# Lines

parallels = np.arange(-28.,-10., 4)

# labels = [left,right,top,bottom]

m.drawparallels(parallels,labels=[False,True,True,False])

meridians = np.arange(-50,-34, 5)

m.drawmeridians(meridians,labels=[True,False,False,True])

# City

x, y = m(-37, -10.9)

plt.plot(x, y, 'ok', markersize=5)

plt.text(x, y, 'Aracaju')

ax.set_title('GPS Data and KMean clusters')

ax.set_xlabel('Latitude')

ax.set_ylabel('Longitude')

plt.show()

We can conclude from the graphics that most of the GPS data (70%) is concentrated in the Aracaju city area. In order to show the visuals more effectively, let’s present the clusters with TSNE decomposition.

from sklearn.manifold import TSNE

# model TSNE

tsne = TSNE(n_components=2, init='random', random_state=0)

features_tsne = tsne.fit_transform(features)

# plot

plt.scatter(features_tsne[:, 0], features_tsne[:, 1], c=cluster_labels)

plt.title('Clusters for GPS data by TSNE')

plt.grid(True)

plt.show()

We can confirm that the main GPS data is concentrated in the first cluster. So let’s focus on its analysis and build a similar K-means clustering model.

# reduce data for the first cluster # df = df[(df['longitude'] > -37.4) & (df['longitude'] < -36.9)]

The code for the K-means model and the related graphics matches the previous ones, so we won’t show it and will present only the result. The only thing to note is that for the K-means model, we will pre-define four clusters. Here is the result:

The scatter plot function shows the following:

Let’s generate the same data with TSNE decomposition.

We’ll demonstrate the overlapping of cluster centers and their volumes on the map using the gmplot library.

So the charts show that GPS data is clustered with sufficient accuracy into four different clusters.

Clustering GPS data with DBScan

The DBSCAN algorithm defines the number of clusters by itself. At the same time, we need to define the eps parameter - the maximum distance between two samples. In our case, we’ll define this parameter as 100 m per radians value. The DBSCAN clustering model is shown below.

from sklearn.cluster import DBSCAN

# reduce data

sample_rate = 22

df = df.iloc[range(0, len(df), sample_rate)]

# GPS points as features

features = df.as_matrix(columns=['latitude', 'longitude'])

# earth's radius in km

kms_per_radian = 6371.0088

# define epsilon as 0.1 kilometers

epsilon = 0.1 / kms_per_radian

# DBSCAN model

db = DBSCAN(eps=epsilon,

min_samples=1,

algorithm='ball_tree',

metric='haversine').fit(np.radians(features))

# define cluster lables

cluster_labels = db.labels_

# the number of clusters

num_clusters = len(set(cluster_labels))

print('Clustered'+str(len(df))+'points to'+str(num_clusters) + 'clusters')

print('Silhouette score:',

silhouette_score(features, labels=cluster_labels))

Note that epsilon (for GPS data) is the distance (100 m - the average distance between GPS points) weighted by the Earth’s radius. This model is performed with high accuracy and defines a wide range of clusters. Let’s demonstrate the clusters’ distribution with scatter plot and TSNE decomposition. The code for the next graphics matches the previous ones for the K-means model, so we won’t show it.

We can see that the data is distributed more locally.

Next, let’s see how the cluster centers match the GPS data. Another thing we want to show is the overlapping of cluster centers on the map.

Analysis of the DBSCAN model and the charts show that the accuracy of clustering is high and correlates with the real GPS data distribution. Next, on the basis of the result clusters, we’ll perform GPS data analysis.

Analyzing the GPS data with K-means clusters

The first step is to determine the value of the resulting cluster to the GPS data.

df_list = []

for ind, cluster in enumerate(clusters):

df_cluster = df[df['longitude'].isin(cluster.T[1]) &

df['latitude'].isin(cluster.T[0])]

df_cluster['cluster'] = ind

df_list.append(df_cluster)

df = pd.concat(df_list)

df.head(5)

Let’s estimate the time spent in each cluster and plot the corresponding diagrams.

# time estimation

time_in_cluster = []

for cluster in df.cluster.unique():

time_in_cluster.append(df[df['cluster'] == cluster]['date_time']

.apply(lambda x: x.hour))

# plot graphics

fig, axs = plt.subplots(2, 2)

fig.suptitle('Time in Clusters by GPS data')

axs[0, 0].hist(time_in_cluster[0])

axs[0, 1].hist(time_in_cluster[1])

axs[1, 0].hist(time_in_cluster[2])

axs[1, 1].hist(time_in_cluster[3])

for ax in axs.flat:

ax.set(xlabel='Day Hour', ylabel='Time in Cluster')

for ax in fig.get_axes():

ax.label_outer()

plt.show()

We see that the time allocation for each cluster is different. This allows the prediction of traffic volume in a specific cluster. The next step is to merge the clustered GPS data with GPS tracks. In order to do this, we should define ‘track_id’ for each cluster.

# track ID by clusters

cluster_id = [df[df['cluster'] == cluster]['track_id'].unique()

for cluster in df.cluster.unique()]

print('Tracks ID for first cluster: ', cluster_id[0])

Then, we’ll merge clustered GPS data with GPS tracks by the ‘track_id’ parameter.

df_list = [] for ind, cluster in enumerate(cluster_id): df_cluster = df_tracks[df_tracks['id'].isin(cluster)] df_cluster['cluster'] = ind df_list.append(df_cluster) df_track_cluster = pd.concat(df_list) df_track_cluster.head(5)

So, we can analyze the traffic speed in different clusters.

# speed by clusters

speed_cluster = []

for cluster in df.cluster.unique():

speed_cluster.append(df_track_cluster[df_track_cluster['cluster'] ==

cluster]['speed'])

fig, axs = plt.subplots(2, 2,)

fig.suptitle('Speed in Clusters by GPS data')

axs[0, 0].hist(speed_in_cluster[0])

axs[0, 1].hist(speed_in_cluster[1])

axs[1, 0].hist(speed_in_cluster[2])

axs[1, 1].hist(speed_in_cluster[3])

for ax in axs.flat:

ax.set(xlabel='Speed', ylabel='')

for ax in fig.get_axes():

ax.label_outer()

plt.show()

By analyzing the diagrams, we can find patterns of traffic speed distribution in different clusters.

Conclusion

In this tutorial, we performed the clustering of GPS data using Amazon Web Services, Dremio, and Python. We stored data in AWS and connected it to the Dremio platform. We then executed data operations in the Dremio platform, connected Dremio to the Jupyter Notebook, and performed GPS data clustering and analysis with ML models.

Sign up for AI Ready Data content