32 minute read · April 18, 2019

Creating a Classification ML model using data stored in ADLS

· Dremio Team

Intro

Azure Data Lake Store (ADLS) is one of the several offerings of Microsoft’s Azure cloud platform. Its primary function is to store the enterprise data, mainly big data. It is a highly scalable and secure system. Besides data storing, the Azure Data Lake has other interesting features. Some of them include:

- Convenient integration with various 3rd party services

- Data analytics with the Azure Data Lake Analytics service

- Simple big data applications development, refactoring, and optimization

- HDInsight service for Apache Spark and Hadoop.

In this tutorial, we will show you how to build a machine learning classifier using the connection to Azure Data Lake Store (ADLS) through Dremio. In other words, we will load data into Azure Data Lake, use Scikit-learn Python package to build the model. Part of data preprocessing will be also done with the help of the Dremio.

Assumptions

We assume that you have Dremio and ODBC driver installed; if not, go to Dremio’s deployments page, pick the correct deployment option (including local), and visit Dremio Docs to read about installations and deployments. You should also have an Azure Account. We will use Python 3.6, and scikit-learn, Pandas, NumPy, and Matplotlib packages.

Preparation of Azure Data Lake

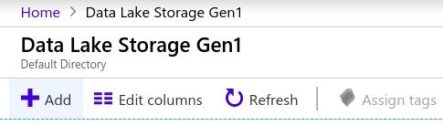

Go to the Azure Portal in your web browser and log in. After that, you will see the menu with different Azure services on the left. Click All services, then pick Storage and choose Data Lake Storage Gen1.

This will lead you to the default directory of Azure Data Lake Storage. Now, create a new instance by clicking Add in the top line menu:

You should specify the name of the new instance, pick subscription, resource group, location, pricing package, and encryption. For this tutorial, we will name our instance “bankfull”. We have also created a “datalake1” resource group for demonstration purposes.

After you click Create, Azure Data Lake Storage should be launched. It will be displayed in the default folder:

Now we can start uploading data into ADLS.

Uploading data into Azure Data Lake Store

In order to build a machine learning classification model, we will use the Bank Marketing Dataset. This is the data about the marketing campaigns of a Portuguese bank. The marketing campaigns were based on direct phone calls to potential clients. The dataset contains many independent variables, which represent information about a particular client, history of communication with prospects, marketing campaign, some macroeconomic indicators at the time of the contact. For example, there are such features as job, age, marital status of the contacted person, month and day of the week when the phone call was made, employment variation rate, consumer price index, etc. The target variable we want to predict is binary (includes only 2 values - “yes” or ”no”). It shows whether the person subscribed as a result of the call.

So, we have our dataset in the CSV format. The file is called bank-additional-full.csv. Once you download it to your local machine you are ready to upload it to Azure Data Lake Storage using Azure web portal.

First, click on the name of the instance (bankfull) in the default Data Lake Storage folder. Then, click on the Data Explorer button:

Then, click Upload.

Select the needed file from your local machine, confirm uploading and wait for completion. After the successful load, you should see your file in the data explorer window:

Connecting to ADLS from Dremio

Open Dremio in your web browser (the default link is http://localhost:9047), log in, choose to add a new data source. In the opened window find and select Azure Data Lake Store:

Fill in some parameters required by Dremio to connect to Azure Data Lake:

First, specify Name. It doesn’t have to correspond with the names you created in Azure.

The next block of parameters is authentication. Start from the simplest - Data Lake Store Resource Name. This is the name of the instance you created in Azure Data Lake. In our case, this name was “bankfull”.

The next is the Application ID. To get the app ID, you should create an application first. So, in the left-side menu of Azure Portal, go to Azure Active Directory, then choose App registrations and click on the New application registration button:

Fill in the form by specifying the name of the application, type, and website (URL is not very important here):

Wait until the creation of the app will be completed. Then, you will be able to see the application details, including the Application ID.

The next parameter you need is the OAuth 2.0 Token Endpoint. You can find it in the Endpoints tab of the App registrations section:

The last thing to specify is the Access key value. Go to the created application details. Click Settings, then choose Keys. In the opened window add the description for the key, pick the duration and save it. This will generate the key. Save it somewhere, because the next time you will go to this section, the key will not be displayed. This is the value that you need to enter in the corresponding field in the source creation form in Dremio.

This is all the information you need to provide to Dremio for creation the connection with Azure Data Lake Store. However, you have to change some access settings directly in Azure in order to give Dremio permissions to communicate with Azure Data Lake.

Go to your Azure Data Lake instance. Click on the Access control (IAM) tab. Then click Add → Add role assignment. In the opened window set Role as Owner and find the application that you created earlier (in our case, it is called dremio). Click Save.

Now, go to Data explorer of the Azure Data Lake instance you want to connect Dremio to (in our case, it is “bankfull” instance) and click on the Access button:

Click Add and find the created app (in our case, it is called dremio) in the Select user or group section:

Make sure that you enabled Read, Write, and Execute permissions for your application.

Finally, you can go back to the Dremio page and click the Save button in order to connect to the data source. Wait until the connection will be completed.

You can make sure that everything works fine by accessing your dataset in the Dremio window. Our CSV file had the “;” signs as delimiters. So, change the delimiter in the corresponding field and save the changes to make the dataset displayed correctly:

The last thing that is recommended to do is to add a connection property called fs.adl.impl.disable.cache to the data source settings and set its value to false. Open the Edit Source window:

Then, go to the Advanced Settings → Connection Properties section and add a new property. Save changes and restart your Dremio cluster:

Data curation in Dremio

Machine learning models use clean data, but real data is usually far from clean. We should preprocess it in order to prepare for use in the training process. We are going to split the data preparation into two steps. On the first step, we will use Dremio to curate our data. On the second step, we will send the data from Dremio to Python and perform some final preparations there.

First, we should create a space in Dremio. The reason why we need a space is that when modifying a dataset, Dremio doesn’t do it in-place. Instead, it uses the concept of virtual datasets. A space is an area where the manipulations with virtual datasets take place. For this tutorial, we want to create a space called “azuredl_sklearn_space”. The spaces are created from the Dremio main page.

After that, click on the source in the list of sources and then click on the name of the dataset (bank-additional-full.csv). We can see, that the names of the columns aren’t displayed correctly and we want to use the first line of the dataset as the column names. So, we go to the dataset settings and check the point Extract Field Names in the checkbox:

Next, we want to delete the “duration” column from our dataset. The reason is that the usage of this column while training can disrupt the results. This variable can be known only after the phone call will be finished. So, for prediction purposes it is senseless. In addition, it highly correlates with the target variable, and just by using this feature we can achieve good results.

Find the column with this feature and select Drop in the drop-down menu:

Also, we will rename our target column. It is called “y” and we want to call it “target”. To do this, you should pick _Rename _ from the drop-down menu and specify the new name:

Changing data types is a very common task in data preprocessing. Dremio can help you to deal with this easily. Choose the column, click on the symbols that show the current data type and pick the type you want to convert to. In our case, we pick “Integer”:

Specify several parameters for type conversion and click the Apply button:

You should convert all integer values to an integer type and all float values to float type.

Note that each step you perform is displayed as a point from the right side of your window. If you hover on a point, the pop-up window will say what this particular step was:

Another important note is that Dremio generates SQL statement for performing all actions that you did:

We have also renamed all column names that had a dot in the name as it may cause an error when importing the data into Python.

To save all performed changes click Save as and choose the previously created space:

Now, after some basic data curation, we can load the data from Dremio into Python for further machine learning model creation.

Use scikit-learn to build a machine learning classifier with data from Dremio

To use data from Dremio in Python, you should install the ODBC driver and perform several preparation steps.

Go to the Download page and get ODBC driver for your operating system. In this tutorial, we use Ubuntu 16.04, so the installation instructions below is true only for this system.

After you have downloaded the driver, you should convert it from rpm format to deb. To do this, you need to install and use the alien tool:

sudo apt-get install alien sudo alien dremio-odbc-1.3.22.1055-1.x86_64.rpm

Next, install the unixodbc tool, which is the prerequisite to use odbc drivers:

sudo apt-get install unixodbc-dev

Also, install python package for working with odbc:

pip install pyodbc

Finally, install the Dremio ODBC driver:

sudo dpkg -i dremio-odbc_1.3.22.1055-2_amd64.deb

Now, we can start working directly with Python. Let’s import the libraries we will need:

import pandas as pd import numpy as np import itertools import pyodbc from sklearn.utils import resample from sklearn.metrics import accuracy_score from sklearn.metrics import f1_score from sklearn.metrics import confusion_matrix from sklearn.model_selection import train_test_split from sklearn.ensemble import RandomForestClassifier import matplotlib.pyplot as plt %matplotlib inline

Use pyodbc package to connect to Dremio:

host='localhost'

port=31010

uid ='username'

pwd = 'password'

driver = '/opt/dremio-odbc/lib64/libdrillodbc_sb64.so'

cnxn = pyodbc.connect("Driver={};ConnectionType=Direct;HOST={};PORT={};AuthenticationType=Plain;UID={};PWD={}".format(driver,host,port,uid,pwd),autocommit=True)

Use sql to fetch the dataset:

sql = "SELECT * FROM azuredl_sklearn_space.bankfull"

df = pd.read_sql(sql,cnxn)Now, we can start to work on the machine learning classifier creation directly in Python.

If we check the shape of the dataframe, we will see that it has 41188 rows and 21 columns. Our target variable (“target”) has values “yes” and “no”. “Yes” means that the contact was successful and the client subscribed the term deposit in the bank. “No” means that the client refused to subscribe to a deposit. We need to convert these values into numbers. So, the code below will replace “yes” with “1” and “no” with “0”.

def f(row):

if row['target'] == 'no':

val = 0

elif row['target']== 'yes':

val = 1

return val

df['target'] = df.apply(f, axis=1)It is useful to perform exploratory data analysis (EDA) before the machine learning model creation. Basic EDA can include, for example, getting information about the number and type of the variables in the dataset, target value distribution, as well as some numeric values statistics (like min and max values, standard deviation, average, etc.).

Below, you can see images where we use simple pandas methods to get some information about our dataset.

Note, that describe() method works only with numeric columns:

To get similar information about the categorical variables, we need to set the parameter include to [‘O’] value:

We know that our task is a binary classification: we have just two possible outcomes, “1” or “0”. But what about the distribution of the target variable? Below, you can find a code which generates the Matplotlib histogram of the target variable distribution.

x = df['target']

bins = np.arange(0, x.max() + 1.5) - 0.5

fig, ax = plt.subplots()

N, bins, patches = ax.hist(x, bins, edgecolor='white')

ax.set_xticks(bins + 0.5)

patches[0].set_facecolor('b')

patches[1].set_facecolor('y')

As we can see, the dataset is highly unbalanced. There are 7 times more instances of one class than another. We will try to use this insight for improving our model later.

Now, we have to convert to numbers not only the target variable but also other variables. The method we will use the one-hot encoding method. Each unique categorical variable in the column will be replaced with the separate column. This column has value “1” for the examples when that categorical value was present and “0” when wasn’t. There is a more detailed explanation of the technique here.

The problem with this method is that it explodes the number of columns. For example, if we have the column where there are 5 unique categorical values, after performing one-hot encoding we will have 5 different columns each responsible for its own unique value representation. That’s why we want to avoid using this approach where possible. In our case, we don’t have to use one-hot encoding for the “contact” column, because it has only 2 unique values and we can simply replace them with “1” and “0”. For other variables, we will use Pandas method “get_dummies”. You can see the code below.

df['contact_new'] = df.apply(f, axis=1)

df = df.drop(['contact'], axis=1)

df = df.rename(columns={'contact_new': 'contact'})

df_ohe = pd.get_dummies(df, columns=['job', 'marital', 'education', 'default', 'housing', 'loan', 'month', 'day_of_week', 'poutcome', ] , prefix='dummy')We had 20 columns before performing one-hot encoding and 62 columns after! Here is how it looks:

Nevertheless, now we can go straight to machine learning models creation.

The following code shows how to split the data into the set of the independent variables and the target variable and then into the train and test.

X = df_ohe.drop(['target'], axis=1) y = df_ohe['target'] X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.33, random_state=42)

Now, the random forest model will be used for training:

rf = RandomForestClassifier(n_estimators=100, random_state=42) rf.fit(X_train, y_train) predictions = rf.predict(X_test)

Next, we should measure the quality of the trained model. We will use an accuracy score and F1-score. Accuracy is probably the simplest metric used in the classification tasks, though it has several downsides. First of all, accuracy shows the share of the correct predictions among all predictions. This is a good metric if the classes in the dataset are balanced. If they are unbalanced, the accuracy could be distorted. Say, we have a model which gives prediction “0” in all cases. We want to test it on the dataset where there are 950 examples with “0” answer and 50 examples with “1” answer. In this case, our model will have very high accuracy - 95%. But this model is useless because it is not able to predict “1” label, which may be very important depending on the particular task. F1-score is a metric which combines precision and recall. Precision shows how many selected examples are true positives. Recall shows how many true items the model selects.

Our dataset is highly unbalanced. So, we want to test our model using both accuracy measure and f1_score. You can see on the image below, that accuracy is pretty good - around 89%. But the F1-score is low - only around 38%.

There is another method to understand the performance of the trained model. It is called the confusion matrix. Below, you can see the code we used to generate the confusion matrix.

def plot_confusion_matrix(cm, classes, normalize=False, title='Confusion matrix', cmap=plt.cm.Blues):

"""

This function prints and plots the confusion matrix.

Normalization can be applied by setting `normalize=True`.

"""

if normalize:

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

print("Normalized confusion matrix")

else:

print('Confusion matrix, without normalization')

print(cm)

plt.imshow(cm, interpolation='nearest', cmap=cmap)

plt.title(title)

plt.colorbar()

tick_marks = np.arange(len(classes))

plt.xticks(tick_marks, classes, rotation=45)

plt.yticks(tick_marks, classes)

fmt = '.2f' if normalize else 'd'

thresh = cm.max() / 2.

for i, j in itertools.product(range(cm.shape[0]), range(cm.shape[1])):

plt.text(j, i, format(cm[i, j], fmt),

horizontalalignment="center",

color="white" if cm[i, j] > thresh else "black")

plt.tight_layout()

plt.ylabel('True label')

plt.xlabel('Predicted label')

cnf_matrix = confusion_matrix(y_test, predictions)

plt.figure()

plot_confusion_matrix(cnf_matrix, classes=[0,1], title='Without normalization')

plt.figure()

plot_confusion_matrix(cnf_matrix, normalize=True, classes=[0,1], title='With normalization')This code generates two graphs which you can find below. The first graph is the confusion matrix without the normalization while the second graph is the confusion matrix with normalization. We can see that our model classifies as “0” too many examples of the “1” class. This is due to the highly unbalanced dataset. It is more profitable for the model to make fewer mistakes on the major class than on the minor because in this case, the cumulative error will be smaller. In our case, this led to the situation, where there are 71% misclassified examples of the “1” class.

In order to improve the model, we need to perform dataset balancing. There are several methods and some Python specialized libraries for accomplishing this task, but we will use the simple sklearn’s resample() function. The method we want to use is called oversampling. It states that we should duplicate the examples of the minor class by the needed number of times to get the approximately equal proportion between classes. Find the code we used below:

df_majority = df[df.target==0] df_minority = df[df.target==1] df_minority_oversampled = resample(df_minority, replace=True, n_samples=20000, random_state=42) df_oversampled = pd.concat([df_majority, df_minority_oversampled]) df_oversampled.target.value_counts()

After executing this code we have 36548 examples of the class “0” and 20000 examples of the class “1”. The new histogram reflecting the distribution of the target variable:

The dataset is still unbalanced, but we duplicated the minority class by approximately the factor 4, and we hope that this should be enough to improve the model quality.

Now we can retrain the random forest model. One-hot encoding and train/test splitting are pretty same as before balancing, except they are applied to the df_oversampled dataframe. All model parameters are also the same as previously. However, the accuracy and F1-score are different:

F1-score increased dramatically. Let’s look at the confusion matrices now:

It is obvious that the model’s quality is substantially better this time. It is able to predict both classes correctly.

Conclusion

In this tutorial, we used Dremio to connect to a dataset stored in Azure Data Lake Store. After performing basic data curation directly in Dremio, we accessed the data from Python and built a classification machine learning model. With the help of the Scikit-learn and Pandas Python packages, we demonstrated how to prepare training data, build a simple model, perform class balancing, improve the model and estimate the results.

Some of the next things you can try to improve the model are to perform some feature engineering, other models utilization, data normalization and scaling, etc.

We hope you enjoyed this tutorial, checkout the rest of our tutorials and resources page to learn more about how you can gaining insights from your Azure data, faster, using Dremio.

Sign up for AI Ready Data content