Introduction

Apache Hive is a modern and convenient instrument built on top of Apache Hadoop. It is used for processing large amounts of data, stored in a distributed file system, using SQL. Sometimes there are situations when we may need to get data from sources like Hive and perform analysis with the help of different tools like Python or Spark. In such cases, integration problems may show up. However, one of the great advantages of Dremio is that it gives us the ability to integrate different data sources and instruments for analysis in a simple way.

In this tutorial, we will walk through the steps of using Dremio to connect to data that has been stored in Hive. The data will be read by Dremio’s SQL engine based on Apache Arrow. Then, we will perform a data curation process prior to analyzing the dataset using Python inside a Jupyter notebook.

Assumptions

This tutorial has been developed on Ubuntu and assumes that you have covered the “Getting Oriented on Dremio” tutorial and you have the following requirements:

- Python

- Pyodbc

- Apache Hadoop

- Apache Hive

- Dremio ODBC Driver.

Loading data into Hive

For this tutorial we will work with the Metropolitan Transportation Authority Turnstile Usage Overview dataset. It consists of ‘entries’ and ‘exits’ audit data which are generated from Control Areas in the city of New York. Originally this data will be stored in Hive, our goal is to connect to it from Dremio, perform data curation, and then make visualizations in Python in the form of two plots. These plots will be the time dependences of the entries and exits for the defined day, station, and scp (Subunit/Channel/position which represents a specific address for a given device).

To follow this tutorial, you should download the above-mentioned dataset (‘turnstile-usage-data-2018.csv’) and save it to HIVE_HOME directory. Then, navigate to your HIVE_HOME directory and run the Hive console with the following command:

sudo ./hive

Now, we will create a new “input_data” table with predefined column names by typing in the Hive console:

CREATE TABLE

input_data

('C/A' string, 'Unit' string, 'SCP' string, 'Station' string,

'Line_Name' string,'Division' string, 'Date' string, 'Time' string,

'Description' string, 'Entries' int, 'Exits' int)

row format delimited fields terminated by ',';After that, you can load the data:

LOAD DATA LOCAL INPATH 'turnstile-usage-data-2018.csv' INTO TABLE input_data;

To double check that the data has been loaded correctly, you can perform the following query:

SELECT * FROM input_data LIMIT 5;

The results should look like the following:

Now, we have our dataset stored in Hive, at this point we can connect to Dremio and perform data curation as needed.

Connecting to data in Dremio

To make a connection between Hive and Dremio, you should first run the Hive metastore service. You can do this by running the following command in the main console:

sudo ./hive --service metastore

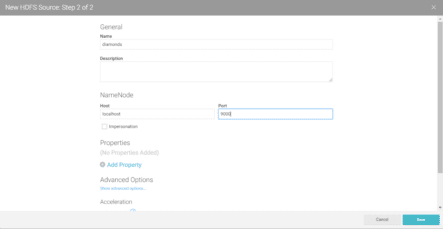

Then, go to the Dremio UI at http://localhost:9047. After login, we will need to add a new data source. To do this, press “+” button near Sources menu.

Choose ‘Hive’ from the list and enter “hive_test” (as the name), “127.0.0.1” (as a Hive Metastore host), and “9083” (default port). And then click “Save”.

After that, you will be able to see “hive_test” source in the sources list.

Now, we can open this source by clicking on it and perform data curation as needed.

Data curation in Dremio

First, we will change the datatypes of several columns, such as “date” and “time”. To change the “date” column type, click on the icon near the column name and choose the necessary data type, “Date” in our case.

Then, choose the options suggested on the image below. You will see the preview of the conversion in your dataset as an additional column. Once ready, click “Apply”.

A similar operation will be performed with the “time” column.

Note that when you perform these data curation operations, Dremio won’t change the integrity or structure of the physical dataset. Instead, it will create a Virtual dataset (VDS), and apply all the changes to it. Now, let’s save our virtual dataset. Before this, we have to create a new “Turnstiles” working space (for more information about spaces, see Getting Oriented to Dremio). To save the Virtual dataset click the “Save As” button at the top right corner.

Enter the Name of the dataset (“turnstile_usage”), choose the space (“Turnstiles”), and click “Save”.

Now, you can find this dataset under the Turnstiles space.

To make visualizations, we should obtain necessary data from our dataset. For this purpose, we need to extract rows with the defined ‘date’, ‘scp’, and ‘station’. We can extract this data by executing the following SQL query:

SELECT "date", "time", entries, exits FROM Turnstiles.turnstile_usage WHERE "date" = '2018-04-21' AND scp = '04-03-03' AND station = 'TIMES SQ-42 ST'

As a result, you will see the table below. Let’s save it as a separate VDS called “usage_by_time”.

Data analysis and visualization using Python

To connect to Dremio from Python, we will need to download the Dremio ODBC Driver for your operating system from the Drivers page:

We will download the ODBC Driver for Linux and install it according to the instructions.If you use a Debian-based Linux distribution you should previously convert the downloaded package from .rpm to .deb format with the help of Alien:

sudo apt-get install alien sudo alien dremio-odbc-1.3.19.1052-1.x86_64.rpm

Before installing the ODBC Driver, we should install the additional package:

sudo apt-get install unixodbc-dev

Finally, install the ODBC Driver:

sudo apt-get dremio-odbc_1.3.19.1052-2_amd64.deb

Also, we have to install the ‘pyodbc’ package with the following command:

pip install pyodbc

Now, let’s begin to write our Python script. Let’s start by importing all necessary libraries:

import pyodbc import numpy import pandas

To connect to Dremio from Python, we need to configure connection parameters (it is possible that the host and driver vary depending on your environment):

host = "localhost" port = 31010 uid = 'username' pwd = 'password' driver = "/home/base/dremio-odbc-1.3.19.1052/debian/dremio-odbc/opt/dremio-odbc/lib64/libdrillodbc_sb64.so"

Initialize connector:

cnxn = pyodbc.connect("Driver={};ConnectionType=Direct;HOST={};PORT={};AuthenticationType=Plain;UID={};PWD={}".format(driver, host,port,uid,pwd),autocommit=True)Now we are ready to fetch data from Dremio:

sql = "SELECT * FROM Turnstiles.usage_by_time" dataframe = pandas.read_sql(sql,cnxn)

To see the obtained dataframe, add the following line:

dataframe.head(6)

The output should look like below:

date time entries exits _____________________________________________________ 0 2018-04-21 00:00:00 4879070 2645139 1 2018-04-21 04:00:00 4879282 2645193 2 2018-04-21 08:00:00 4879324 2645306 3 2018-04-21 12:00:00 4879639 2645601 4 2018-04-21 16:00:00 4880086 2646104 5 2018-04-21 20:00:00 4880838 2646555

To visualize this data, you should first import the library for visualization:

import matplotlib.pyplot as plt

Now, let’s define axes and plots parameters:

x_axis = dataframe.time y1_axis = dataframe.entries y2_axis = dataframe.exits

Make plot with 2 subplots

plt.subplot(1, 2, 1)

plt.plot(x_axis, y1_axis, 'bo-')

plt.ylabel('Entries')

plt.xlabel('Time')

plt.grid(color = '#D3D3D3', linestyle = 'solid')

plt.xticks(rotation = 70)

plt.subplot(1, 2, 2)

plt.plot(x_axis, y1_axis, 'go-')

plt.ylabel('Exits')

plt.xlabel('Time')

plt.grid(color = '#D3D3D3', linestyle = 'solid')

plt.xticks(rotation = 70)

plt.subplots_adjust(left = 0.1, right = 2, bottom = 0.1, top = 1, wspace = 0.3)

plt.show()And this is the result we got:

As you can see from the figures above, both the number of entries and exits increase towards the evening.

Summary

In this tutorial we showed that the integration of Hive with Python using Dremio is rather simple and convenient process. Also, it gives us the possibility to transform, analyze, and visualize data effectively with the help of user-friendly interface. We hope that you enjoyed and found this tutorial useful. Stay tuned to learn more about how Dremio can help you get more value from your data, faster.

Sign up for AI Ready Data content