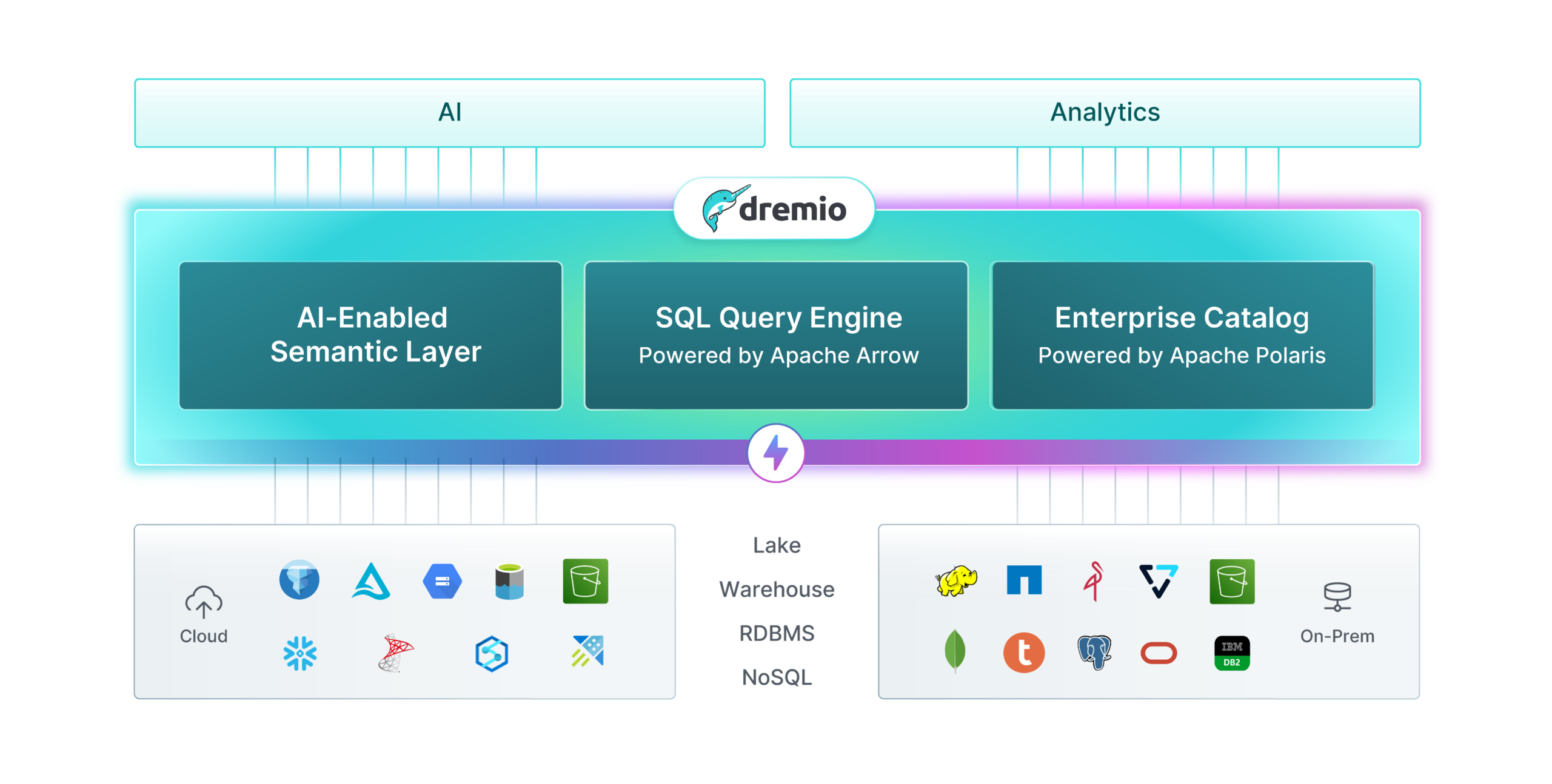

The Intelligent Lakehouse Platform

Accelerate AI and analytics with AI-ready data products - driven by unified data and autonomous performance.

Dremio is the only lakehouse built natively on Apache Iceberg, Polaris, and Arrow - providing flexibility, preventing lock-in, and enabling community-driven innovation.

SEMANTIC LAYER

Easily Find and Understand Data

Dremio's semantic layer is a business-friendly representation of data sources that translates technical structures into business terms and concepts, creating a unified view with consistent definitions, metrics, and business logic—all accessible through a single access point without data movement.

- Organize data through spaces, folders, and layered views

- Find data assets using AI-Enabled Semantic Search with plain language

- Enable rich data discovery with tags, wiki content, and lineage tracking

- Implement role-based access control for appropriate visibility

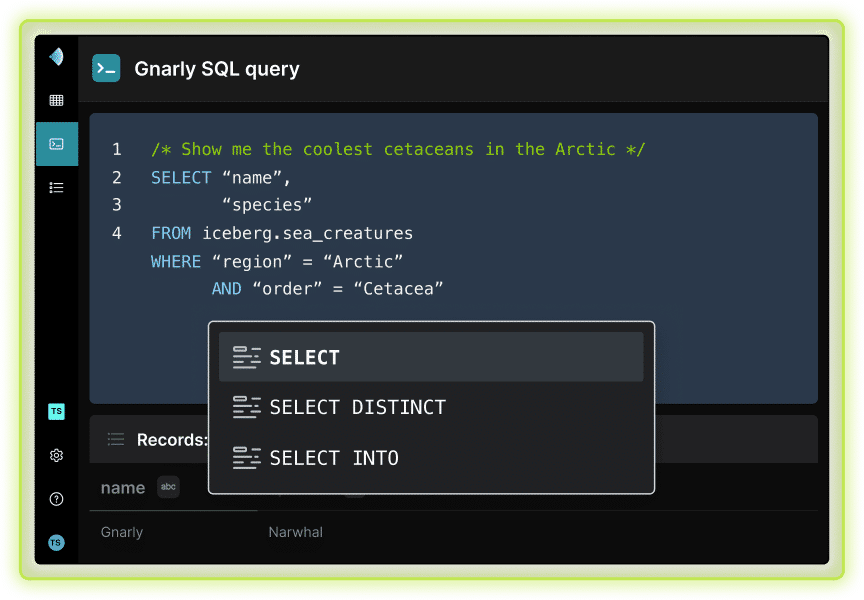

SQL QUERY ENGINE

High-performance analytics with built-in intelligence

Dremio's SQL Query Engine delivers lightning-fast performance, with Apache Arrow-based columnar processing, vectorized computation, cost-based optimization, and a massively parallel architecture, enabling analytical insights at scale while reducing TCO

- Autonomous Reflections automatically optimizes performance while ensuring queries run on live, up-to-date data

Learn more about Autonomous Reflections

- SQL Engine built on Apache Arrow leverages in-memory columnar data structures for high-speed analytics

- Text-to-SQL enables non-technical users to generate insights without knowing SQL

- Columnar Cloud Cache (C3) speeds queries by caching frequently used data in local SSD drives

- AI-Enabled Wiki Generation automatically creates clear documentation for datasets and columns

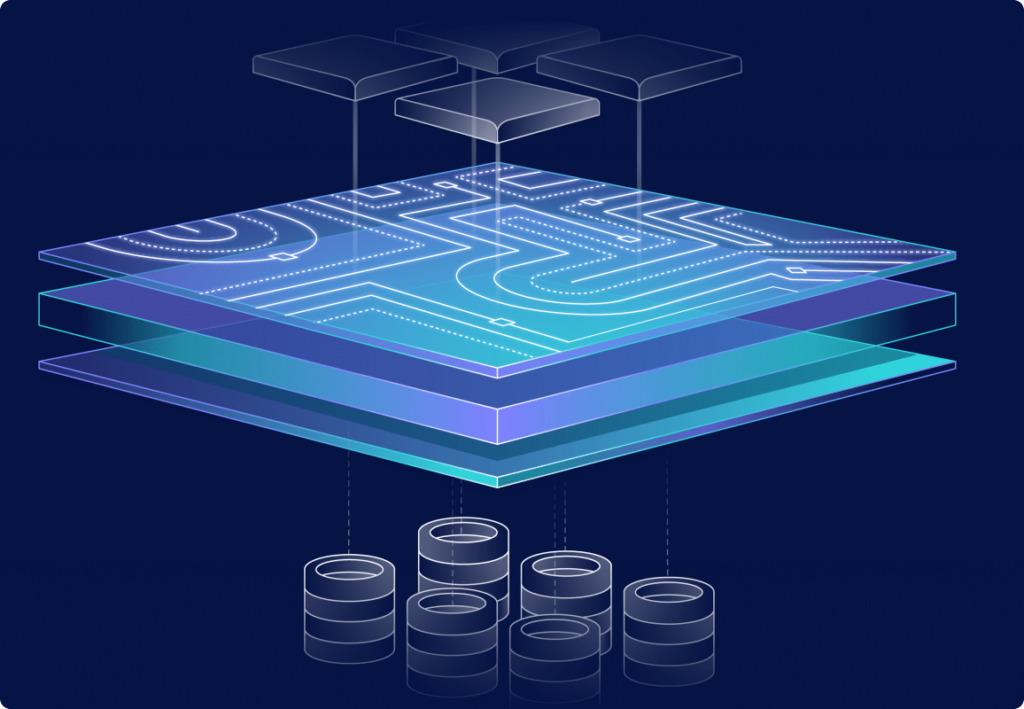

ENTERPRISE CATALOG

One metastore for all your tables

The Enterprise Catalog powered by Apache Polaris, is an Iceberg-native catalog that provides unified metadata management, fine-grained access controls, and data lineage tracking, enabling a fully governed and high-performance Iceberg lakehouse— deployable anywhere.

- Iceberg Clustering for intelligent data layout optimization and enhanced query performance

- Automatic Table Optimization for continuous maintenance-free performance tuning

- Fine-Grained Access Control with row and column level security capabilities

- Role Based Access Control for organization-wide permission management

- Unified Catalog Access to external systems including AWS Glue and Hive Metastore

- Support for Snowflake Open Catalog and Databricks Unity Catalog

- Data lineage tracking for comprehensive governance and auditability

CONNECTORS & INTEGRATIONS

Frictionless connectors and integrations across all your data, in the cloud and on-premises

BI TOOL PARTNERS

Discover how Dremio seamlessly integrates with leading BI platforms

Dremio and Power BI accelerate self-service business intelligence

Achieving business-led analytics is crucial for enabling data-driven decision making across the organization. The Dremio one click integration with Power BI delivers lightning-fast queries, direct data access, and simplified governance that's intuitive and easy to implement, regardless of your data architecture.

Dremio and Tableau transform data visualization capabilities

Achieving deep data insights is crucial for enabling visual analytics on large and complex datasets. The Dremio integration with Tableau delivers one click access, with sub-second dashboard performance, live query capabilities, and seamless data access that's powerful and easy to visualize, regardless of data location.

Dremio and Looker enhance enterprise analytics adoption

Achieving organization-wide data fluency is crucial for enabling a true data culture within businesses. The Dremio connection for Looker delivers consistent data governance, accelerated semantic modeling, and unified metrics that's collaborative and easy to scale, regardless of user technical expertise.

Dremio and Qlik streamline associative data discovery

Achieving comprehensive data exploration is crucial for enabling users to uncover hidden insights and relationships. The Dremio integration with Qlik delivers in-memory performance, interactive visualizations, and associative analytics that's intuitive and easy to navigate, regardless of data complexity or volume.

STORAGE PARTNERS

Discover how storage partners power the Dremio Intelligent Lakehouse Platform

Dremio and NetApp enable enterprise-grade data management

Achieving seamless data integration is crucial for enabling analytics across hybrid environments. The NetApp platform for Dremio leveraging NetApp StorageGrid delivers enterprise-grade scale, consistent performance, and simplified data management that's efficient and easy to deliver analytics from edge to core to cloud, regardless of workload complexity.

Dremio and Pure Storage deliver high-performance analytics

Achieving sub-second query performance is crucial for enabling real-time analytics on large datasets. The Pure Storage FlashBlade platform for Dremio delivers accelerated insights, reduced infrastructure costs, and simplified data access that's powerful and easy to optimize, regardless of data volume.

Dremio and MinIO provide cloud-native object storage

Achieving high-throughput analytics is crucial for enabling modern data workloads on S3-compatible storage. MinIO for Dremio delivers consistent performance, unlimited scalability, and industry-leading security that's flexible and easy to deploy, regardless of environment.

Dremio and VAST deliver the next-generation Hybrid Iceberg Lakehouse

Achieving scalable performance is crucial for enabling real-time queries for both on-premises and cloud-based data. The VAST Data Platform for Dremio delivers a cost-effective, enterprise-grade, all-flash solution that is robust and easy to manage, regardless of scale.