Experimentation in Machine Learning

Unlike the software engineering field, which is usually backed by established theoretical concepts, the world of machine learning (ML) takes a slightly different approach when it comes to productionizing a data product (model). Like with any new scientific discipline, machine learning leans a bit more toward the empirical aspects to determine the success or failure of a project. So what exactly does that mean? It means that machine learning models rely heavily on a series of experiments to achieve promising results. Also, there is a huge probability of having to discard certain experiments to come to a point where the model finally makes non-generalized decisions.

Whether or not a model makes it into production, data scientists need to spend considerable time in experimentation. Typically, an experiment involves the process of changing the values of one or more variables and observing the effect on the outcome (e.g., a classification model on a different training set can yield different levels of accuracy). In machine learning experimentation, these variables can be:

- Different types of models

- Different segments of training and test sets

- Various hyperparameters of a model

- Different features

So, providing data scientists and machine learning engineers the flexibility to run various experiments in changing environments is critical. One of the core ideas behind providing this flexibility is to keep track of all the changing variables and the resulting artifacts (data + model), which becomes extremely difficult as you scale and the number of experiments increases. In fact, most of the machine learning projects without proper tracking mechanisms have a common problem: figuring out what variables (datasets, features, environments) resulted in the desired accuracy (or any set evaluation metrics).

Reproducibility in Machine Learning

Another critical aspect of machine learning is building reproducible models, i.e., achieving similar results as the original model on specific datasets. Reproducibility is essential in both research and industry-specific projects. One of the main problems with scientific research, and specifically machine learning research, is that it is tough to verify the empirical results and claims made by a specific research paper and be able to reproduce the experiments carried out in them. Also, for machine learning models to be put into production in an enterprise, it is crucial to be able to replicate the results based on standard evaluation metrics. In general, a ML model’s reproducibility will depend on the dataset and the environment it is trained on.

In this blog, we will focus on one of the core elements of a model, the dataset, and support ML experimentation and reproducibility use cases in a data lakehouse architecture using Dremio Arctic. Typically, machine learning-based workloads are run on the data in a data lake. However, a common problem with a data lake is that it gets extremely difficult to keep track of the data files stored in the cloud object stores (such as Amazon S3). This can lead to data quality issues, and identifying the right set of data for training the models can be tricky. Table formats such as Apache Iceberg efficiently deal with this problem by acting as the metadata layer and managing all the datasets in a lakehouse.

What is Dremio Arctic?

Dremio Arctic is a data lakehouse management service that makes it easy to manage all the tables in a data lakehouse. Arctic automatically handles the various data management activities in a lakehouse, such as compacting files, repartitioning, indexing, etc., to abstract these tedious tasks from the data consumers. Most importantly, since it is built on top of the open source Project Nessie, Arctic enables the use of Git-like branching to perform various data-specific tasks in isolation without impacting any production workloads.

Dremio Arctic introduces a new paradigm to manage data as code by facilitating the following:

- Isolation – Data experiments

- Version control – ML models/dashboard reproducibility, mistake recovery

- Governance – Changes to data trackable, fine-grained privileges

In the following sections, we will explore how Dremio Arctic enables data versioning and branch isolation capabilities to support experimentation and reproducibility use cases for machine learning.

Hands-on Exercise Setup

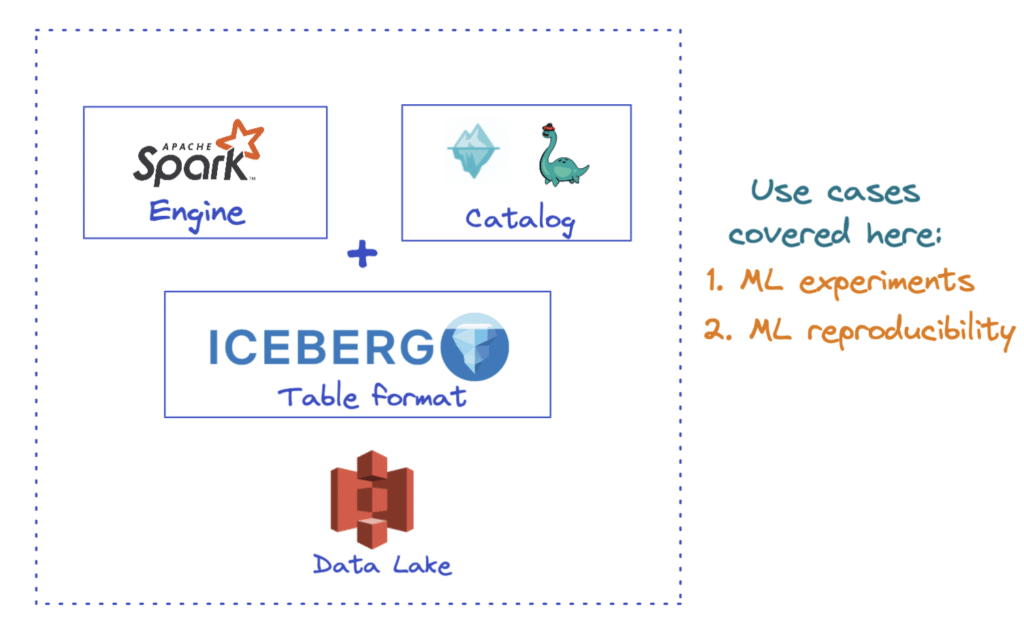

Our setup is illustrated in the image below. The goal is to have all the configurations for this setup in a Jupyter Notebook so we can quickly play around with things and work on the two use cases.

Please note that the notebooks for the below two use cases can be found here.

- Dremio Cloud – The data lakehouse platform

- Catalog – Dremio Arctic

- Query engine for initial load – Dremio Sonar

- Table format – Apache Iceberg

- Processing engine – Spark

- Data lake – Amazon S3

- Authentication – AWS with Bearer token

- Dataset – Telecom churn dataset loaded into a S3 bucket

Step 1: Dremio Cloud Sign-up

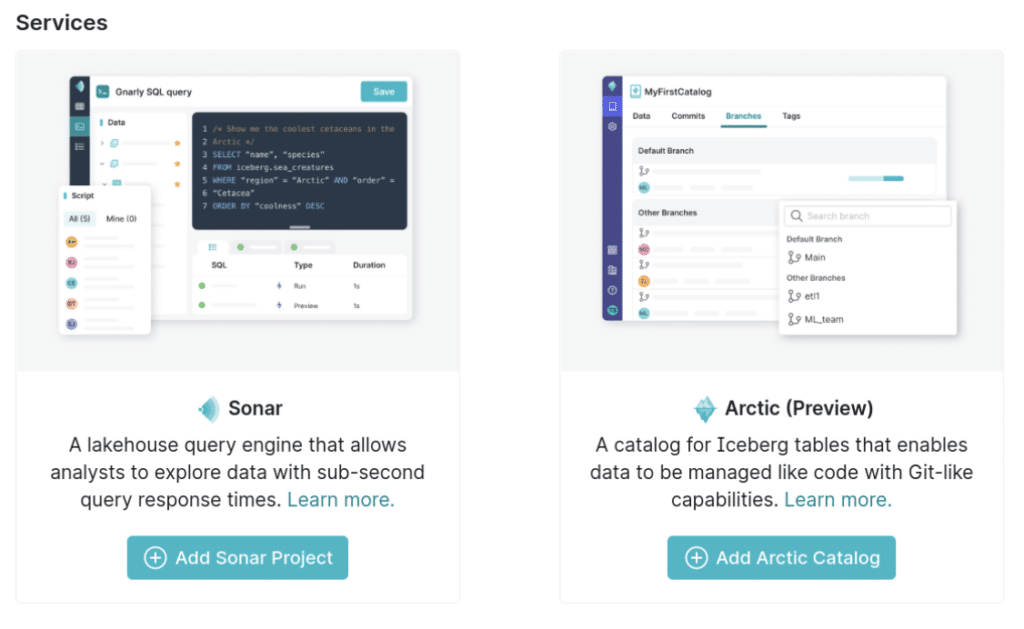

The first step is to sign up for a free Dremio Cloud account to take advantage of the lakehouse platform and leverage Arctic as the catalog. Refer to this guide for detailed instructions on signing up. Once you have the Dremio Cloud account ready, you should be able to create a Sonar project and add an Arctic catalog, as shown below. You can also watch this video to learn how to setup an Arctic catalog.

Step 2: Connecting to the Data Lake

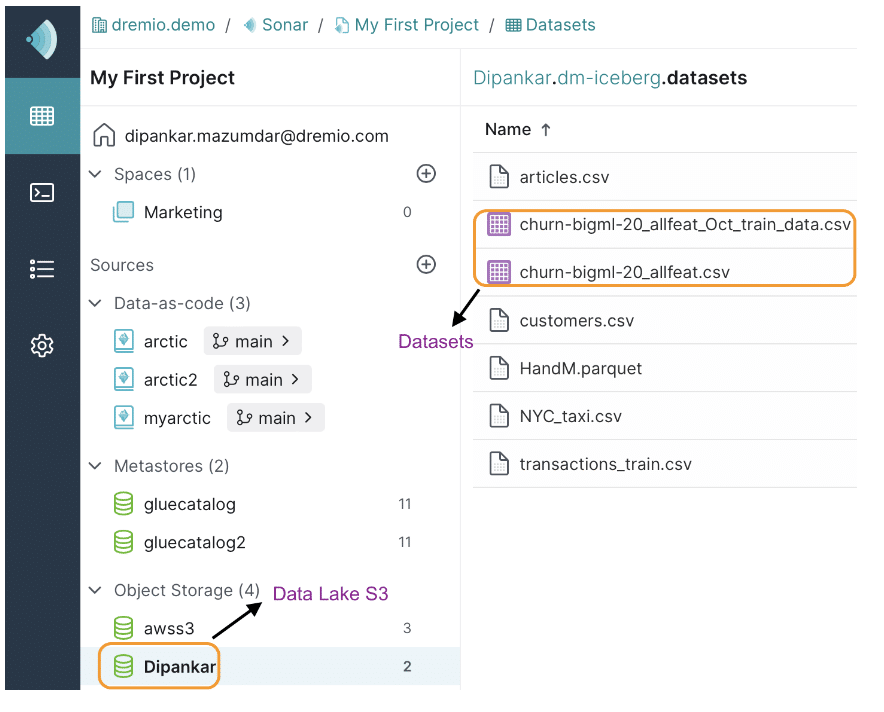

The next step is to connect to the data lake (Amazon S3), access the data, and create Iceberg tables to work on the two use cases. You can use any processing engine to do so. For this case, we will use Dremio’s query engine Sonar, which provides an intuitive UI to create Iceberg tables and run DML operations directly on the data lake.

- Go to the Dremio UI and add a new S3 data source.

- After successfully adding the data source, you should see it under the “Object Storage” section of Dremio.

- The dataset files named

churn-bigml-20_allfeat.csvandchurn-bigml-20-allfeat_Oct_train_data.csvshould be accessible now, as shown below. These are the two datasets that we are going to use for the ML workloads.

The datasets can be downloaded from here.

Now let’s create the two Iceberg tables by clicking on these dataset files and running the following statements:

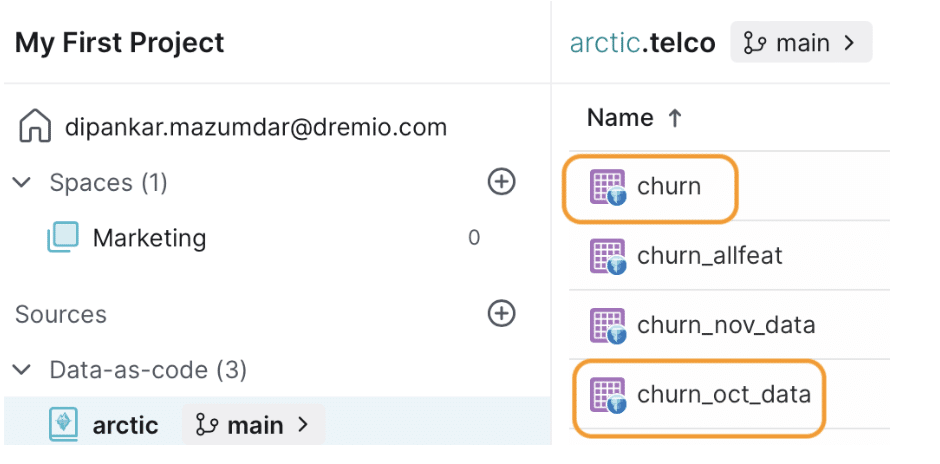

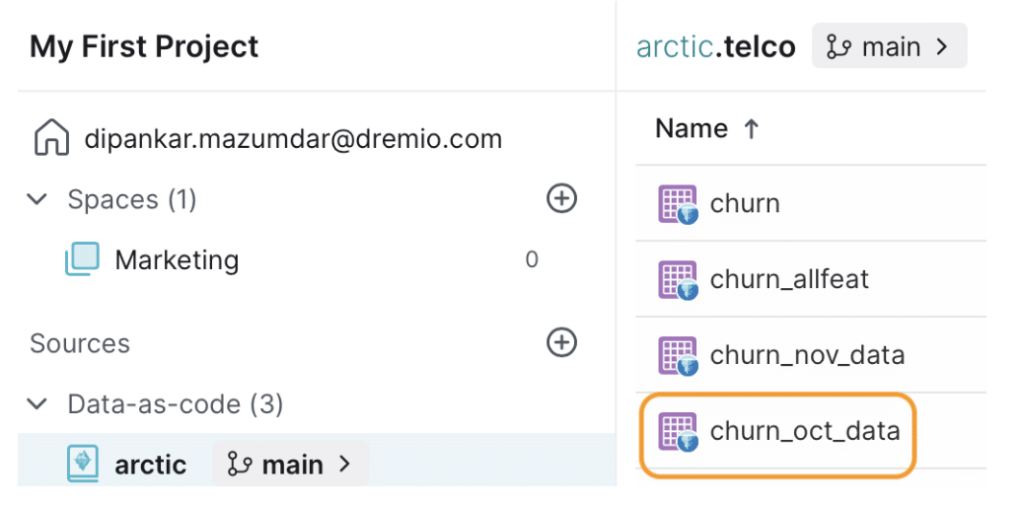

CREATE TABLE arctic.telco.churn AS SELECT * FROM "@username"."churn-bigml-20_allfeat" CREATE TABLE arctic.telco.churn_oct_data AS SELECT * FROM "@username"."churn-bigml-20_allfeat_Oct_train_data"

This will convert the CSV files into two Iceberg tables in the Arctic catalog named “arctic.” If you go to the “arctic” catalog => main branch, you should now see the two tables under the “telco” folder.

**Please note that in a real-world setup, data engineers would typically be responsible for creating Iceberg tables and making a dataset available. However, Dremio’s lakehouse platform provides a self-serve experience for data consumers to do this themselves as well.

Step 3: Jupyter Notebook setup

First, let’s install the two libraries for setting up PySpark as shown below:

pip install pyspark pip install findspark

Finally, we will define the PySpark + Arctic + Iceberg configuration in our notebook. Here are a few of them:

# we need iceberg spark runtime libraries & nessie spark extensions

conf.set(

"spark.jars.packages",

f"org.apache.iceberg:iceberg-spark-runtime-3.3_2.12:1.0.0,org.projectnessie:nessie-spark-extensions-3.3_2.12:0.44.0,software.amazon.awssdk:bundle:2.17.178,software.amazon.awssdk:url-connection-client:2.17.178"

)

# create catalog named 'arctic' as an iceberg catalog

conf.set("spark.sql.catalog.arctic", "org.apache.iceberg.spark.SparkCatalog")

# enable the extensions for both Nessie and Iceberg

conf.set(

"spark.sql.extensions", "org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions,org.projectnessie.spark.extensions.NessieSparkSessionExtensions"

)Please note that these are not the complete list of configurations. For a detailed description and complete set of parameters, refer to this blog.

Once we have all the configuration ready, we should be good to proceed with our first use case.

Use Case 1: Machine Learning Experimentation

Goal: Our first use case aims to train a churn classification model on a dataset with reduced features (compared to the original) and compare its accuracy with our baseline model (hypothetically 92%). If we gain improvements on this dataset, we will make the dataset available in the “main” branch for usage by other data scientists and roll out the model to different environments. Alternatively, if we see no significant improvements, we will discard the experiment.

Create an Isolated Branch 'ML_exp_new'

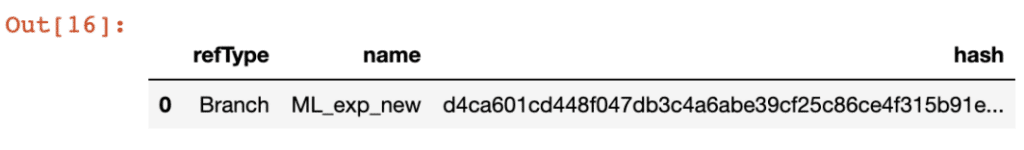

spark.sql("CREATE BRANCH ML_exp_new IN arctic").toPandas()Once we execute this statement, we should see the following output:

This confirms that we now have a new branch “ML_exp_new” and it currently points to a hash value (Nessie). It is worth noting that we don’t have to explicitly create a “main” branch since it is the default one that we set when configuring.

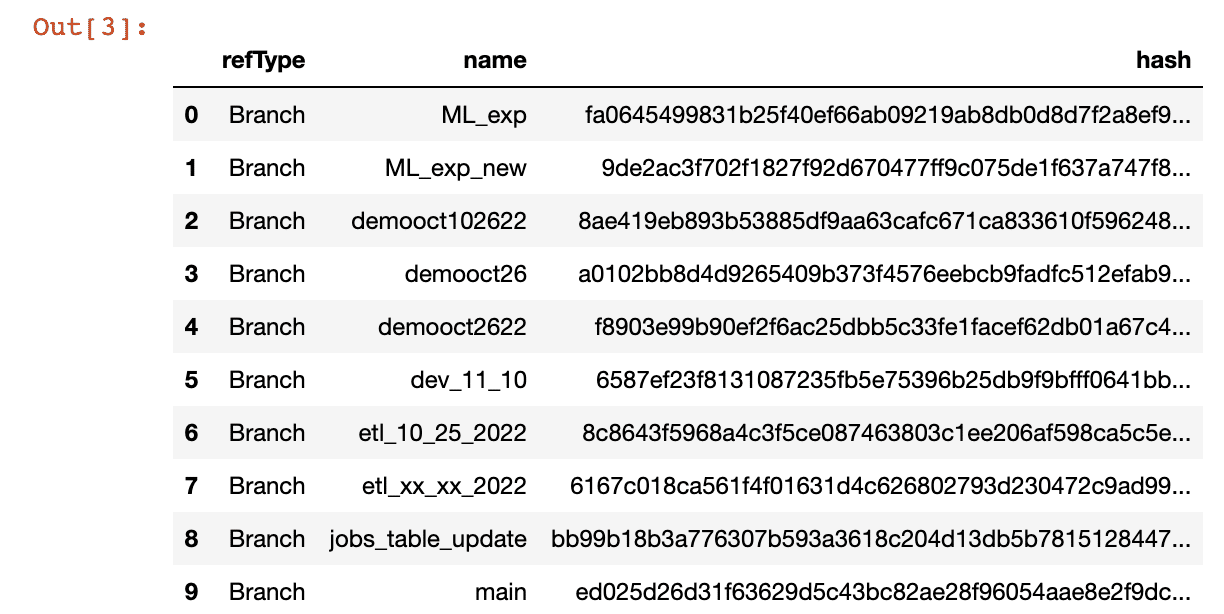

We can also list out all the branches in our catalog “arctic” using the command below:

spark.sql("LIST REFERENCES IN arctic").toPandas()And the output shows all of them.

Use the New branch for Experiment

At this point, we are in the default branch “main.” So, let’s switch to the new branch to proceed with our experiment using the following command:

spark.sql("USE REFERENCE ML_exp_new IN arctic")Dataset for Experimentation

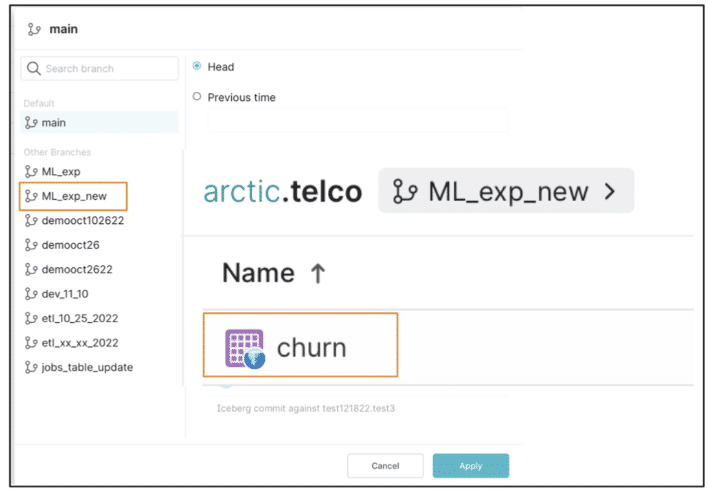

Please note that when we created the new branch “ML_exp_new,” all the datasets present in the “main” branch are now available in the new one. We can verify that by going to the Dremio Sonar UI and selecting the new branch from the catalog “arctic,” as shown below.

Based on our exploratory data analysis and feature selection work, we discovered that some features are highly correlated and some are not important. So, let’s drop the unessential features from our dataset.

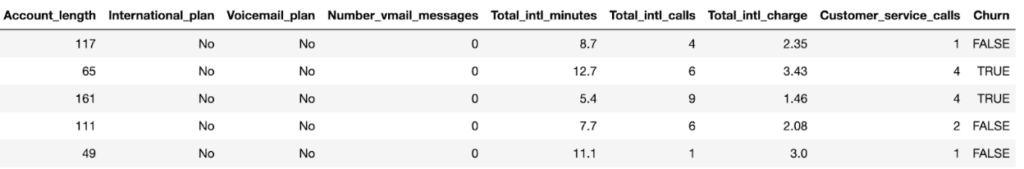

spark.sql( "ALTER TABLE arctic.telco.churn_allfeat DROP COLUMN State, Area_code, Total_day_calls, Total_day_minutes, Total_day_charge, Total_eve_calls, Total_eve_minutes, Total_eve_charge, Total_night_calls, Total_night_minutes, Total_night_charge" ).toPandas()

If we check the dataset now, the data should only have the features that we want to use with our classification model.

The one important thing to note here is that the dataset telco.churn_allfeat with fewer features is available only in the “ML_exp_new” branch and has no impact on the original dataset on the “main” branch.

This level of flexibility is critical in conducting experiments with different datasets without impacting production workloads. Another advantage of this approach is that we didn’t have to create additional dataset copies, which are usually hard to manage in a data science workflow and can lead to issues such as data and model drift. Once we are satisfied with our experiment on this dataset, we can merge the branch to make it available on the “main” branch.

Build the Churn Classification Model

Now, let’s train our model on this dataset.

df_telco_new = spark.read.table("arctic.telco.churn_allfeat").toPandas()

#prepare data

target = df_telco_new.iloc[: , -1].values

features = df_telco_new.iloc[: , : -1].values

target.reshape(-1,1)

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(features, target, test_size=0.20,random_state=101)

# train the model

rfc = RandomForestClassifier(n_estimators=600)

rfc.fit(X_train,y_train)

predictions = rfc.predict(X_test)

# evaluate the model

from sklearn.model_selection import cross_val_score

from sklearn.metrics import accuracy_score, classification_report

from sklearn.metrics import confusion_matrix

acc = accuracy_score(y_test, predictions)

classReport = classification_report(y_test, predictions)

confMatrix = confusion_matrix(y_test, predictions)

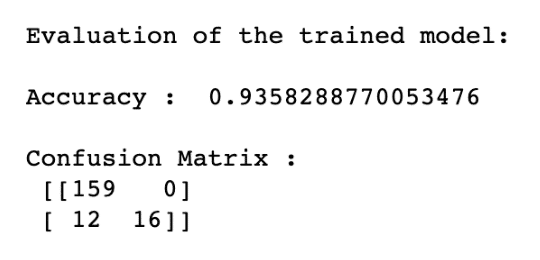

print(); print('Evaluation of the trained model: ')

print(); print('Accuracy : ', acc)

print(); print('Confusion Matrix :n', confMatrix)

print(); print('Classification Report :n',classReport)

The output shows that the model performs poorly on the dataset with fewer features.

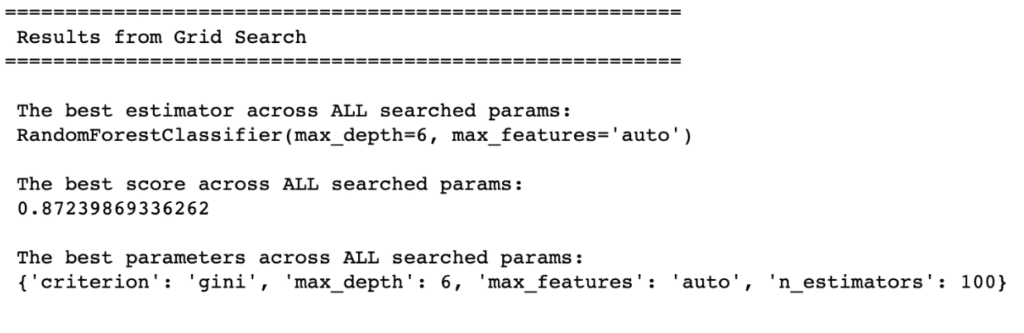

Find the Best Parameters

parameters = {'max_depth' : [6,10],

'criterion' : ['gini', 'entropy'],

'max_features' : ['auto', 'sqrt', 'log2'],

'n_estimators' : [50,100]

}

grid = GridSearchCV(estimator=model, param_grid = parameters, cv = 2, verbose = 1, n_jobs = -1, refit = True)

grid.fit(X_train, y_train)

# Results from Grid Search

print("n The best estimator across ALL searched params:n",

grid.best_estimator_)

print("n The best score across ALL searched params:n",

grid.best_score_)

print("n The best parameters across ALL searched params:n",

grid.best_params_)

Drop the Branch

Since our experiment didn’t meet the set expectations, we will drop the branch “ML_exp_new.” To do so, we will use the below command:

spark.sql("DROP BRANCH ML_exp_new IN arctic")Values gained

We gained several advantages from leveraging the Dremio Arctic catalog to conduct experiments with ML models in a data lakehouse, including:

- Greater flexibility in experimentation with the isolated branches feature.

- Ability to work with data without needing extra copies for each and every experiment.

- Capacity to establish the viability of the dataset (as part of the experiment) to ensure the data is good enough for the model.

Use Case 2: Machine Learning Reproducibility

Goal: We aim to reproduce a selected classification model (with an accuracy of 92.8%) on two different datasets to see if we can still achieve the desired results. As with any real-world scenario, datasets change with the addition of new training data, which presents challenges in reproducing models. Let’s see how Dremio Arctic addresses this issue.

Dataset

As described in step 2 of the exercise setup above, the dataset that we will use for this specific use case is the churn_oct_data Iceberg table as shown below:

Tag a Training Dataset

We can train a classification model on this dataset and continue with our analysis; however, the problem is that this source Iceberg table will change over time with new training data being added based on the scheduled ETL jobs.

So, even if we built a robust model that meets all our evaluation criteria, there is no tracking ability to trace the data the model was trained on. This presents significant challenges to the reproducibility of the model. To deal with this, Arctic allows us to create name tags to use for reference and offers data versioning abilities.

Since we are currently in the “main” branch, we will create a separate table to ensure we don’t impact the churn_oct_data table. As an alternative, we can create an isolated “work” branch for ourselves, as shown in the previous use case.

spark.sql( "CREATE TABLE arctic.telco.churn_oct_training AS SELECT * FROM arctic.telco.churn_oct_data" )

Now, let’s create a tag called “Oct_data” that only has the training data up to the month of October.

spark.sql("CREATE TAG Oct_data IN arctic")As such, Dremio Arctic allows us to mark a point in time, so we can refer to it later for our analysis.

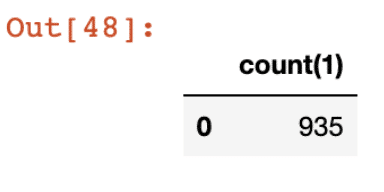

If we use this tag reference to check the count of the records, it now has 935 records. To do so, below are the commands:

spark.sql("USE REFERENCE Oct_data IN arctic")

spark.sql("SELECT COUNT(*) FROM arctic.telco.churn_oct_training").toPandas()

New ETL Data and New Tag

Let’s say hypothetically, for this specific exercise, we insert some new data to the churn_oct_training table to simulate a real-world setting where the data engineering team does ETL to ingest new data.

The new dataset is a CSV file. We will first load it to a temporary VIEW and then insert the records into the table.

spark.sql(

"""CREATE OR REPLACE TEMPORARY VIEW new_train_data USING csv

OPTIONS (path "newtrainingdata.csv ", header true)"""

) spark.sql(

"INSERT INTO arctic.telco.churn_oct_training SELECT * FROM new_train_data"

)Since our original table has now changed, let’s create a new tag so we can keep a track of the new training data. We will tag it as “Nov_data” which represents training data until the month o f November.

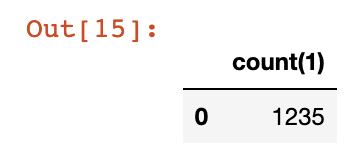

spark.sql("CREATE TAG Nov_data IN arctic")Now, let’s check the record count using the new tag reference and validate if the new training data has been added.

spark.sql("USE REFERENCE Nov_data IN arctic")

spark.sql("SELECT COUNT(*) FROM arctic.telco.churn_oct_training").toPandas()

Great! We now have the updated training data and are able to tag it to refer to it later for the model reproducibility task.

Reproducing the Selected Model on the Two Tagged Datasets

Finally, let’s use the tags to refer to the two checkpoints – Oct_data and Nov_data and use the selected churn classification model on them.

We will start with the October training data, so let’s make sure we use that tag reference:

spark.sql("USE REFERENCE Oct_data IN arctic")df_telco_new = spark.read.table("arctic.telco.churn_oct_training").toPandas()

target = df_telco_new.iloc[: , -1].values

features = df_telco_new.iloc[: , : -1].values

target.reshape(-1,1)

rfc = RandomForestClassifier(n_estimators=600)

rfc.fit(X_train,y_train)

predictions = rfc.predict(X_test)If we evaluate the model, we obtain the following result: The model’s accuracy is 93.5%.

This is pretty close to our model and the accuracy has improved a bit.

Now, let’s use the Nov_data tag reference and see how the model does. The steps for building the model are exactly the same. We just need to reproduce the model on this new dataset.

spark.sql("USE REFERENCE Nov_data IN arctic")After evaluating the model, we achieve the following result: The model’s accuracy is 92.7%.

Again, the model is very close to our selected model’s accuracy.

Values gained

- Reproducing the model on these two different datasets helps us understand the effectiveness of our model for further deployment in other environments.

- Dremio Arctic provides an easy way to create tagged datasets to reproduce results and keep track of data versions.

Conclusion

Model experimentation and reproducibility are two critical aspects in the machine learning world. With Dremio Arctic, we can now use features, such as isolated branching, to ensure no impact on the production data and workloads. Data versioning helps us keep track of all the commits to the data in the lakehouse and allows tracing back to them using named tags. Dremio Arctic can be accessed by multiple engines (Spark, Dremio Sonar, Flink, etc.), depending on the workload you want to run. If you want to learn how Dremio Arctic can support other tasks in a lakehouse, such as data quality, check out this blog post on managing data as code with Dremio Arctic.