15 minute read · May 26, 2022

Dremio 21.0: The Open Lakehouse Platform Just Got a Whole Lot Faster!

· Staff Product Manager, Dremio

· Senior Director, Dremio Sonar

· Senior Architect, Dremio

· Software Engineer, Dremio Sonar

Executive Summary

Dremio is the easy and open data lakehouse, providing an intuitive UI that enables data teams to get started with analytics in minutes, as well as the flexibility to use Dremio’s lightning-fast SQL query service and any other data processing engine.

Dremio Sonar is the SQL engine on the lakehouse platform that delivers best in class performance for the benefit of our customers. With key performance enhancements in the query engine, Dremio 21.0 has shown nearly 30% improvement in overall query latency in order to complete all 99 queries in TPC-DS benchmarks when compared to the prior releases.

This blog post gives a deep dive look into the key enhancements that we have implemented in Dremio 21.0 to achieve significant performance improvement on TPC-DS benchmarking, including:

- Common subexpression elimination

- Native hash-table and native copiers

- Switching to TSC Timer

- Reducing exchanges with UnionAll

Our Approach

To start, we began with the 99 queries in TPC-DS and analyzed the performance of Dremio on different AWS instance types (m5d.8xlarge, i3.4xlarge, etc.). We collected query profiles and looked at both query metrics and system metrics (i.e., CPU/memory/disk/network usage) to identify bottlenecks and potential areas for improvement.

When addressing performance issues, it’s possible that a solution that looks good on paper does not work optimally in practice. We mandated that performance enhancements must first be prototyped within a strict time frame and tested with TPC-DS before we went ahead with a full-fledged implementation. Our engineering team ensures that our enhancements are always built with the highest bar for quality and stability throughout the development process.

Key Performance Enhancements in Dremio 21.0

After thoroughly analyzing the major bottlenecks we identified earlier, we implemented the following key features to improve the overall performance of the query engine. We validated the percentage gain through our internal TPC-DS benchmarking.

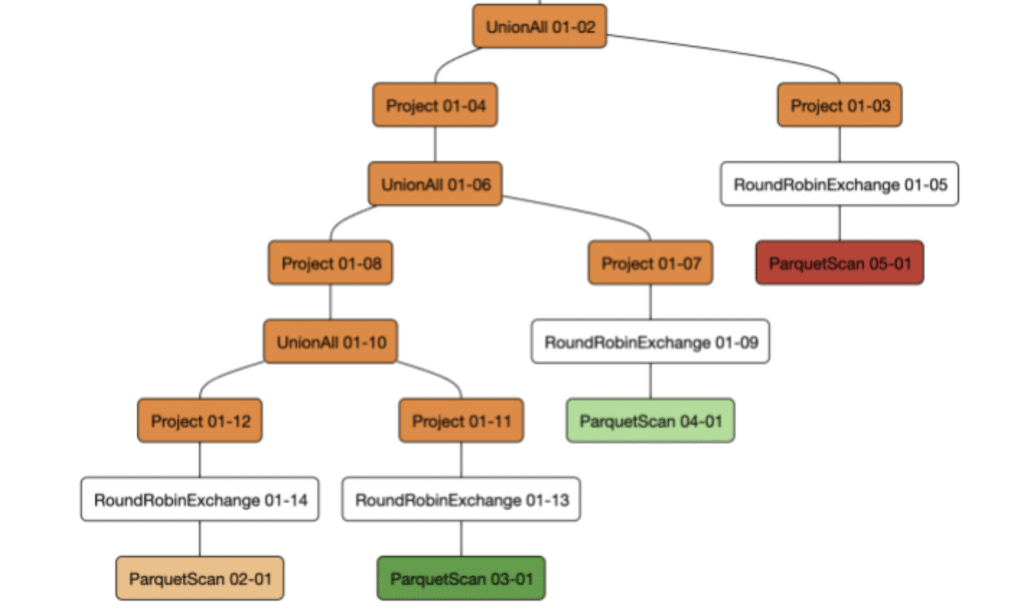

I. Common Subexpression Elimination

Multiple TPC-DS queries use a with clause, that results in a subexpression that is used in multiple parts of the query. The optimal solution to handle such a query is :

- for the planner to identify these identical/common subexpressions

- evaluate each common subexpression only once

- use the result of the common subexpression in the query multiple times.

The Dremio execution model did not fully optimize for this case, and the subexpression was evaluated multiple times during the query. If the subexpression was complex (e.g., if it had multiple aggregations and joins), the resulting duplicate work increased the resource usage.

There were two ways to address this :

- Break the single query to multiple standalone queries, and use the output of one query as input to subsequent queries.

- Enhance the query plan to support a directed acyclic graph (DAG) like structure and feed the output of one phase to multiple downstream phases.

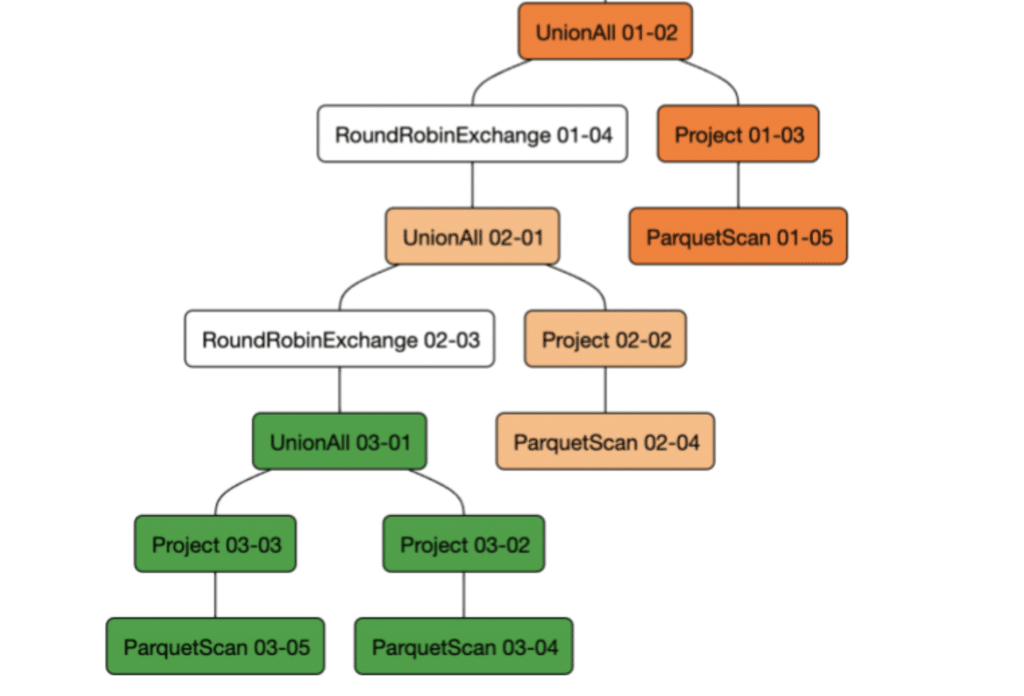

We chose the second approach primarily because it leads easily to early termination in a pipeline model. For example, if the query has a limit operator at the top of the graph and a common subexpression at the bottom, the subexpression evaluation can be terminated as soon as the limit condition is reached. In the first approach, this is not feasible since each of the constituent subqueries are run standalone.

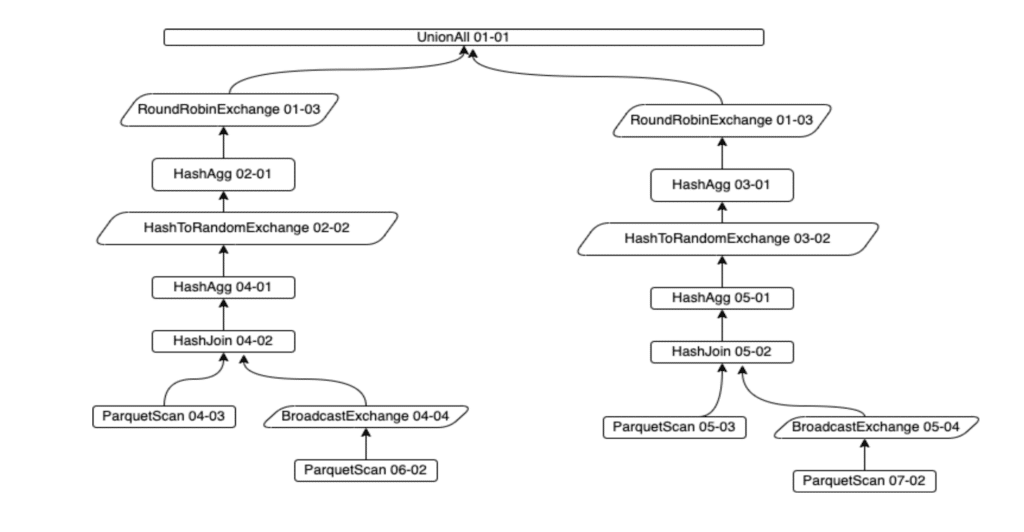

Fragment tree without optimization

Fragment tree after optimization

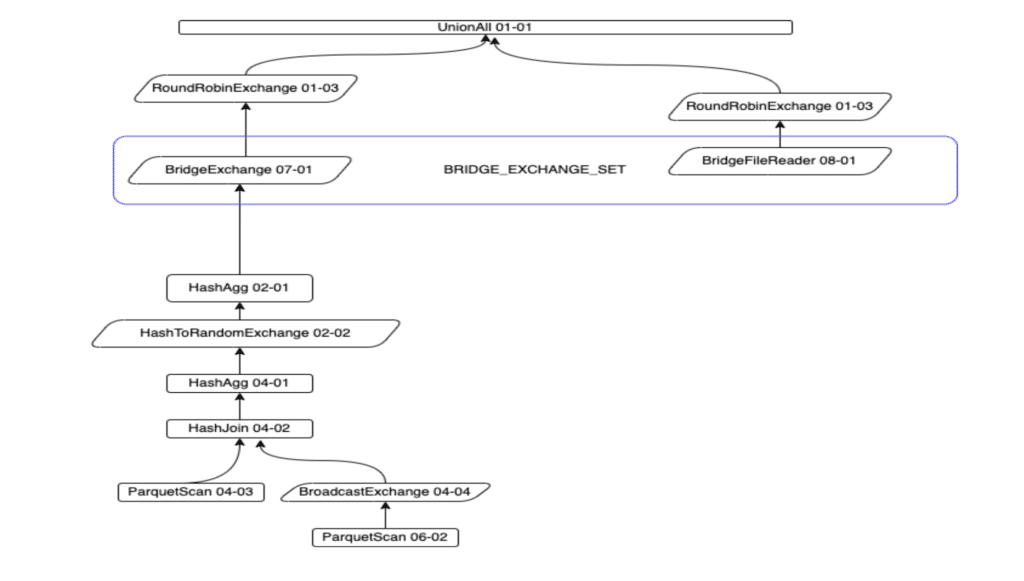

The chart below represents the query-level performance gain achieved through the implementation of this enhancement.

As expected, this optimization improved several of the TPC-DS queries by more than 50%. In this implementation, we handled several tricky issues where the query planner needed to optimally identify common subexpressions in the presence of runtime filters, partition pruning, and substitutions (reflections/materialized views).

II. Native Hash-Table and Native Copiers

The query profiles showed us that the query engine spent significant time in hash-join and hash-aggregation, and even a small improvement in that area would yield significant dividends. The Dremio execution engine was already heavily optimized in this area, with efficient columnar copiers custom-built for each field type, vectorized algorithms for hash computation, and custom hash-table implementation.

There was one case that we were not fully taking advantage of, however. At the start of the query, we knew the data-types of the key(s) in the hash-table and hence, the exact size of each entry in the table (at least for the fixed length types). We optimized for this case by having a different hash-table implementation for each specific key size (4 bytes, 8 bytes, 16 bytes, and so on). This reduces branches, improves pipelining, and gives better memory alignment.

The cleanest way to do this was by using templates in C++. Since Dremio uses Apache Arrow for all in-memory data representation, we are able to switch seamlessly between Java & C++ (similar to our strategy with Gandiva for expression evaluation in filters and projects). Along the way, we also evaluated newer libraries for hash computation and for handling hash collisions. However, our existing Java algorithm worked best, so we simply ported it to C++ and enhanced it with templates for each key size.

We also did similar optimizations for the data copiers. We use multiple variants of copiers extensively in filters, aggregates, joins, and exchanges. Again, pursuing a similar strategy of using efficient C++ code improved the copiers’ performance.

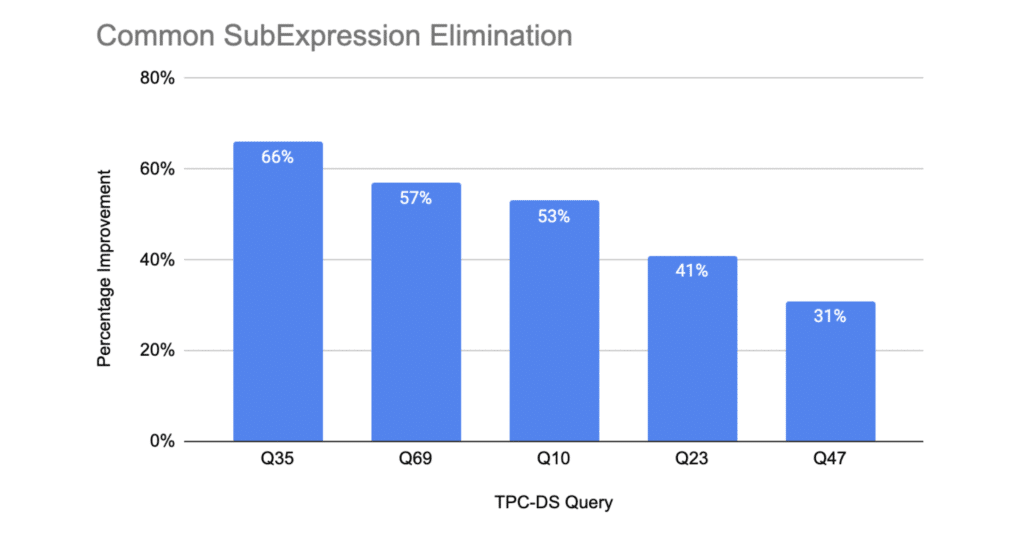

The chart below represents the query-level performance gain achieved through the implementation of this enhancement.

In addition to the above, almost all other queries improved between 5% to 10%.

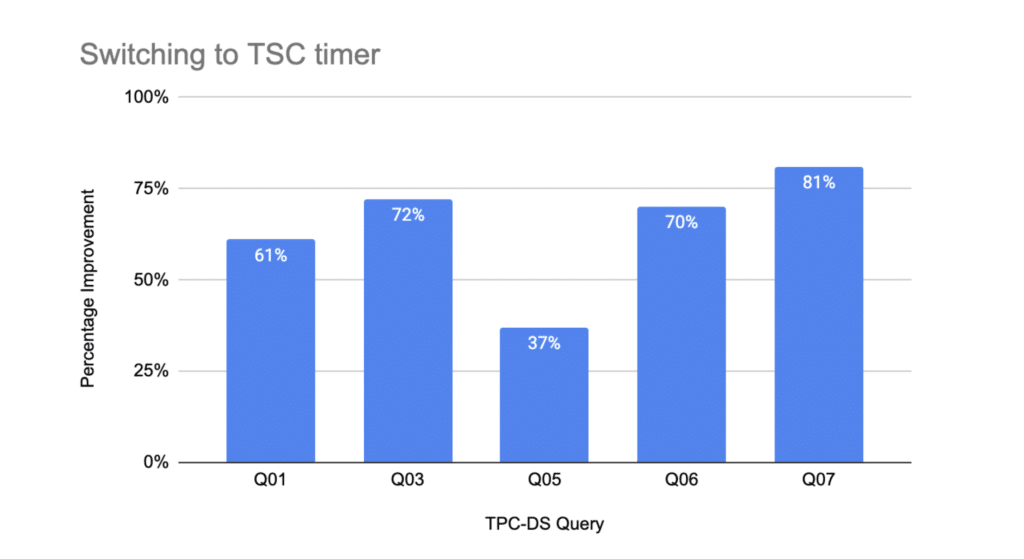

III. Switching to TSC Timer

We discovered that the query times on i3.4x instances were nearly five times higher than that on the m5d.8x instances, even though they have half the cores of the m5d.8x. This was surprising since we expected the query times to double (in proportion to cores). Getting good performance on i3 instances was important since they are cache-optimized and are specifically recommended by AWS for data-intensive applications.

Further debugging showed that the Dremio executor was making many system calls on the i3 instances, and the gettimeofday() calls were the trigger for this. We came across other databases that hit similar issues, and adopted the same solution: switching the clock source to TSC from XEN. This gave a boost to the performance on i3 instances and brought query latencies to expected values.

While experimenting with the timer, we realized that the executors were doing a lot of timer calls during query runtime. The overhead of these calls added up and caused a non-trivial overhead of the CPU usage. We eliminated these calls in the common paths for further improvement.

The chart below represents the query-level performance gain achieved through the implementation of this enhancement, on i3 instances.

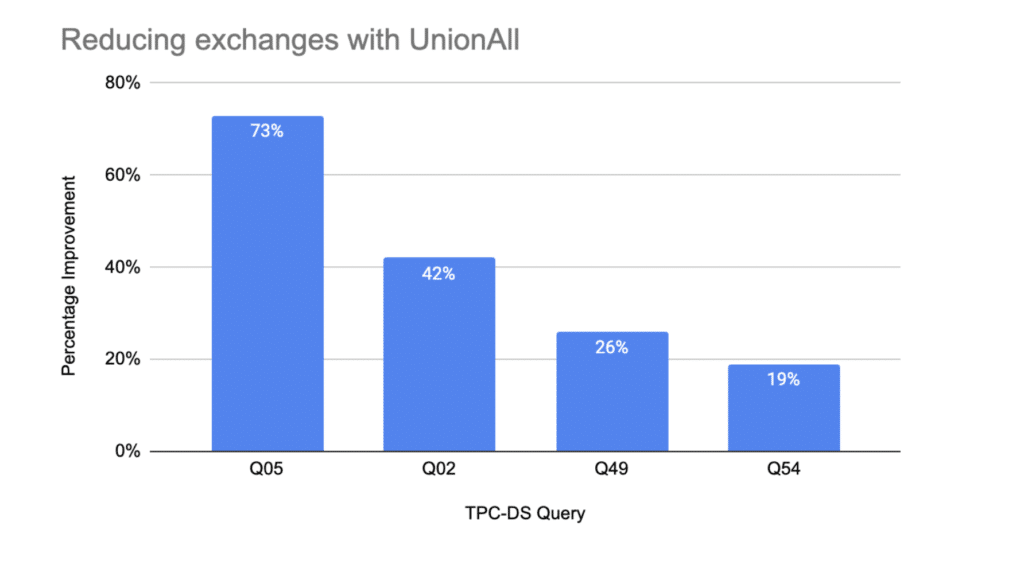

IV. Reducing Exchanges with UnionAll

We observed that queries that included a UnionAll were slower and showed a network bottleneck. The query plan for UnionAll included a mandatory round-robin exchange. Although this distributed the load uniformly, the network transfer overhead was significant. Also, the operator always processed the left-side input and then switched to the right side. However, the exchange was on the right side of the branch, and its batches would stay unprocessed till the left side was completed. This ended up causing excessive memory usage when the query had high parallelism.

The main reason for inserting exchanges under Union was to maximize parallelism. For example, if one branch of Union is simply reading a single file, parallelism of this branch becomes one. Without an additional exchange operator, parallelism of a phase that includes this branch is restricted by this branch. To overcome this parallelism limit, we insert an exchange to that branch so that Union can be executed without the limit imposed on a specific branch. However, forcing this in every case would cause the problem mentioned above.

We improved this parallelism logic by using a priority queue that keeps track of parallelism of each branch. We first get two branches with the lowest parallelism. If the difference in parallelism is small (under a certain threshold), we don't insert any exchanges on the children. Otherwise, we insert an exchange on the smaller side. In this way, binary union operators are built by checking and comparing parallelism in ascending order, which results in a left deep tree with exchanges inserted only when they are needed. This significantly reduced the network traffic and improved the latency for several queries.

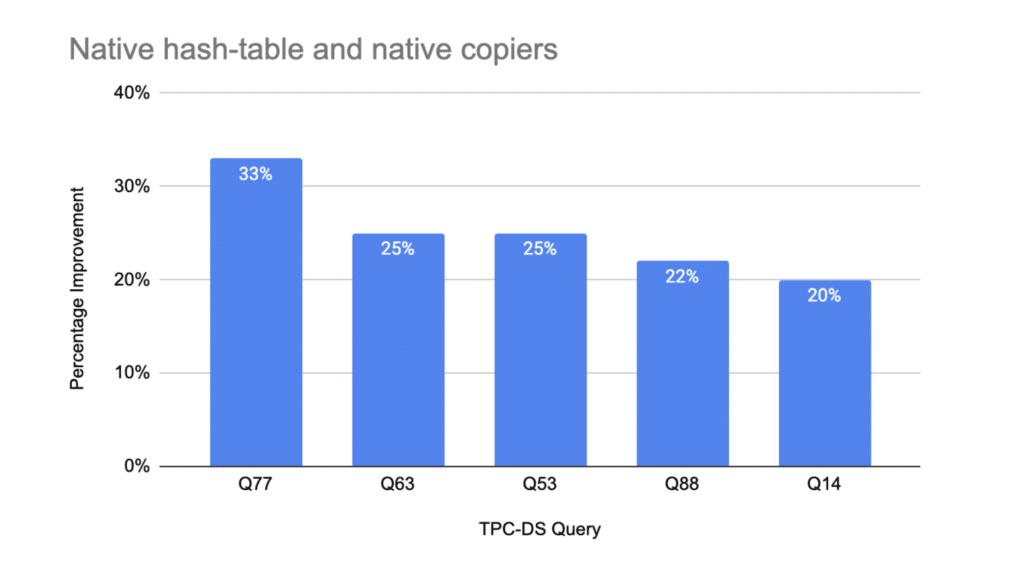

Query planning tree without the optimization

Query planning tree with the optimization

The chart below represents the query-level performance gain achieved through the implementation of this enhancement.

Conclusion

With all of the above enhancements, Dremio’s query engine got close to 30% improvement in query latency to complete all the 99 queries in the TPC-DS test suite. On some instance types (e.g., i3.4xlarge), the improvements shown by some queries were even more dramatic: we got close to 3x improvement in query latency. In addition, several of the enhancements resulted in more optimal resource usage on the executors – i.e., lesser memory envelopes and lesser network traffic – which improved the overall stability of the engine.

What’s Coming Next

As we mentioned at the beginning of the article, we are relentlessly looking for ways to improve the performance of the Dremio Query Engine. Some future areas for optimization include:

- Newer garbage collection algorithms to optimize heavy workloads.

- Adding spill support for more operators (join, windows, etc.)

- Dealing with skews in data, for example, uneven data files, hash-join when most of the records are skewed towards one or more “popular” keys.

- Improve support for wide tables

- Improve join-ordering to use primary key details

- Improve runtime filters for non-partitioned datasets

Get started with Dremio today!

Sign up for AI Ready Data content