12 minute read · October 25, 2024

Breaking Down the Benefits of Lakehouses, Apache Iceberg and Dremio

· Head of DevRel, Dremio

Organizations are striving to build architectures that manage massive volumes of data and maximize the insights drawn from it. Traditional data architectures, however, often fail to handle the scale and complexity required. The modern answer lies in the data lakehouse, a hybrid approach combining the best aspects of data lakes and data warehouses.

This blog will delve into the core advantages of a data lakehouse, spotlighting the essential roles of Apache Iceberg and Dremio in making this architecture more accessible, flexible, and powerful. By exploring the unique capabilities of each component—how Iceberg transforms a data lake into a managed, structured environment and how Dremio simplifies data access and acceleration—you’ll gain a deeper understanding of why this pairing is pivotal to modern data strategy.

Key Takeaways: By the end of this blog, you’ll see how building an Iceberg-based data lakehouse with Dremio at the center can revolutionize data management, improve data accessibility, and reduce both storage and compute costs.

You can also try building a lakehouse on your laptop and get hands-on!

What is a Data Lakehouse?

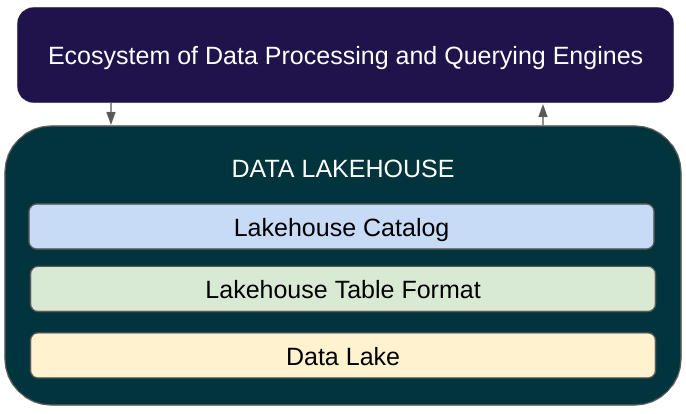

A data lakehouse is an architectural model that blends the flexibility of data lakes with the structured data management capabilities of data warehouses. By bringing together these two worlds, a lakehouse enables a single, unified platform where your data tools can interface with your datasets on the data lake like a data warehouse.

Key Benefits of a Data Lakehouse:

- Central Source of Truth: A lakehouse creates a centralized repository that becomes an organization's authoritative source of data. With your datasets primarily persisted in one place in an open format, you can ensure consistency, accuracy, and access to the most current information across all teams and applications becomes much more accessible.

- Reduced Storage and Compute Costs: Unlike traditional data warehouses, which can become costly as data volumes grow, a lakehouse minimizes the need to store data in a data warehouse and run workloads to ETL that data into a data warehouse reducing costs. You just work with the data using your favorite tools directly from the lake.

- Faster Data Delivery: A data lakehouse is optimized for performance, ensuring that end users can quickly access fresh and reliable data. Handling data transformations and governance directly within the lakehouse can streamline data flows, reduce processing time, and accelerate insights for data consumers across the organization.

In essence, the data lakehouse delivers a powerful blend of scalability, cost-effectiveness, and data accessibility, setting the foundation for efficient data operations and impactful business intelligence.

What is Apache Iceberg?

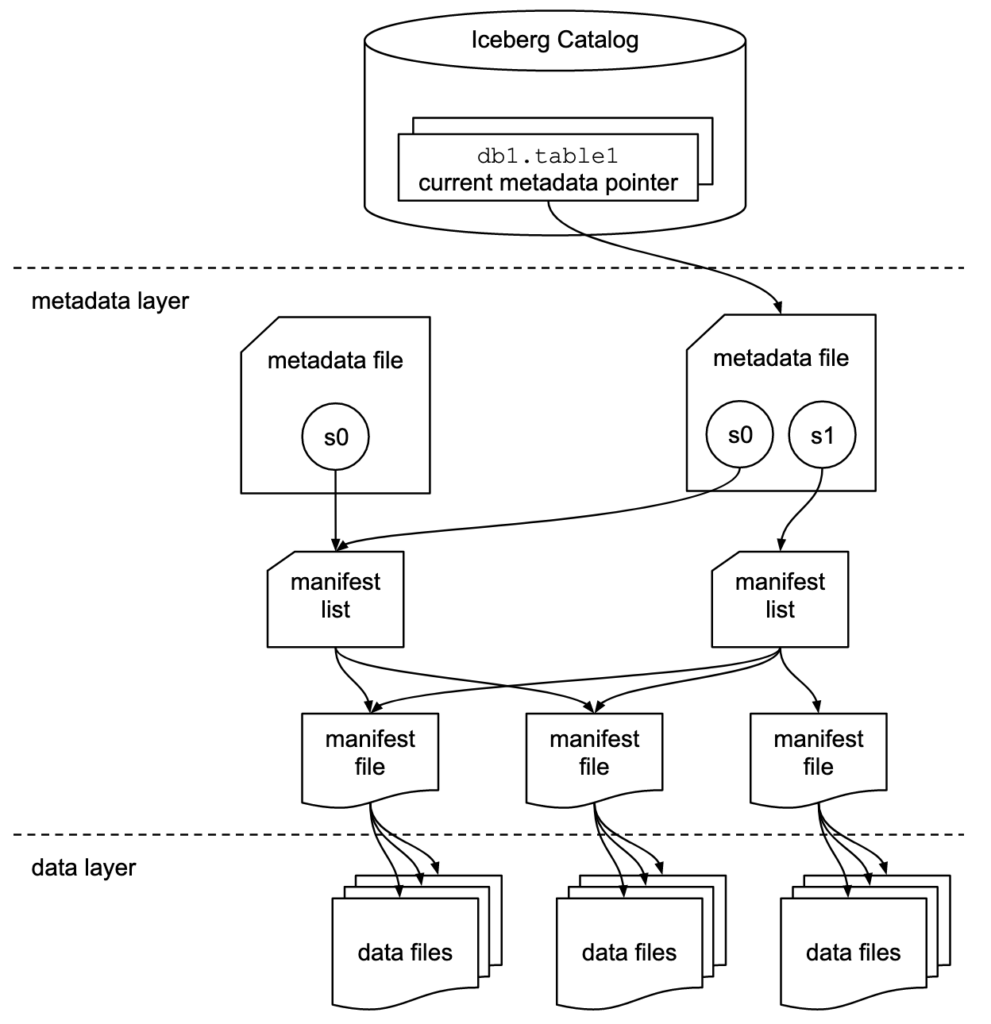

Apache Iceberg is an open-source table format designed to bring high performance and robust management to data lakes, effectively enabling them to function as structured, fully managed data lakehouses. By introducing features tailored for large-scale analytics, Iceberg addresses many of the challenges organizations face when trying to achieve efficient, reliable, and scalable data processing within a lakehouse.

Unique Features of Apache Iceberg:

- Partition Evolution & Hidden Partitioning: Traditionally, partitioning strategies require careful planning, and once set, they can be difficult to modify. Iceberg, however, enables partition evolution, allowing you to adjust partition schemes over time without re-partitioning or rewriting large datasets. Additionally, Iceberg’s hidden partitioning feature eliminates the need for manual partition management, letting users query data flexibly without knowing the partition layout—resulting in simpler, faster queries.

- Rich Ecosystem and Broad Support: Apache Iceberg has developed a strong ecosystem and is supported by many major data platforms, including Dremio, Snowflake, Upsolver, AWS, GCP, and others. This compatibility allows teams to use Iceberg seamlessly within various environments, enhancing data accessibility across their architecture.

Benefits for Lakehouses:

Iceberg enables datasets on the data lake to be seen as tables, the core functionality of the data lakehouse paradigm. These capabilities allow organizations to maintain a clean, structured, and high-performance data environment while scaling effortlessly to meet growing data demands. As a result, Iceberg is a foundational element in building a reliable lakehouse, empowering teams to manage large datasets with the structure and efficiency of a traditional data warehouse—without sacrificing the flexibility of a data lake.

How Dremio Makes Lakehouses Easier to Use

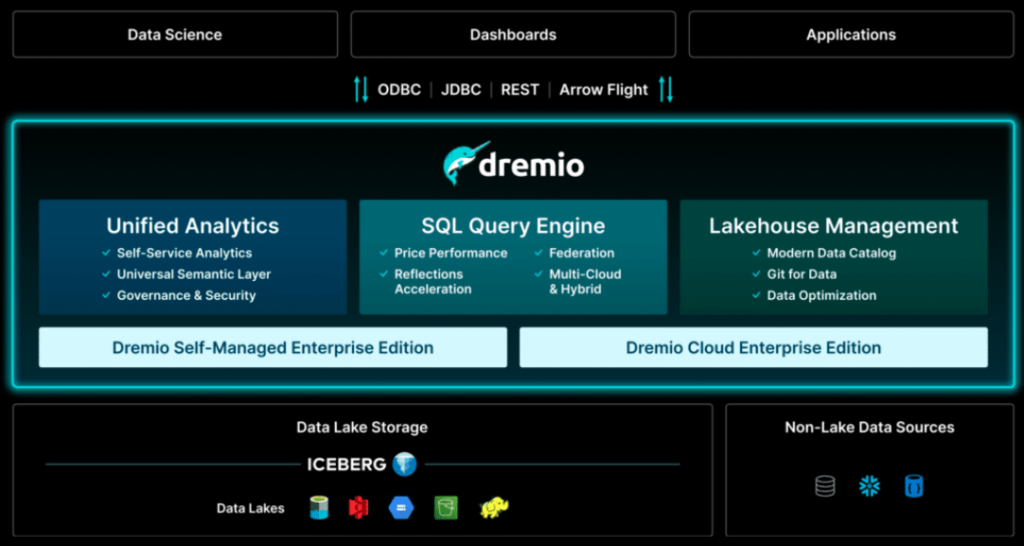

Dremio is a powerful data platform designed to simplify and accelerate data workflows. By providing seamless integration with various data sources and offering a user-friendly layer for accessing and managing data, Dremio enables data teams to unify and analyze data across cloud and on-prem environments efficiently.

Key Features of Dremio:

- Connectivity Across Sources: Dremio connects easily to a wide array of data sources, including:

- Databases: Integrates with relational databases like Postgres, MongoDB, MySQL, and others, allowing data from different transactional systems to be accessed and analyzed in a single interface.

- Data Lakes: Supports cloud and on-prem data lakes, such as HDFS, S3, ADLS, GCP, Vast Data, Minio, Pure Storage, and NetApp StorageGrid, making it easy to centralize data scattered across various storage platforms.

- Data Warehouses: Dremio also connects to data warehouses like Snowflake, Redshift and more, enabling data teams to access and blend data from both structured and semi-structured sources, achieving a unified view.

- Semantic Layer for Consistent Metrics: Dremio provides a semantic layer that allows organizations to define consistent business metrics that can be used across BI tools and Python notebooks. By creating universal metric definitions within Dremio, teams ensure that every user accesses data aligned with the organization’s standardized definitions, improving consistency and clarity in reporting.

- Reflections for Data Acceleration: Dremio’s Reflections feature accelerates data by precomputing and caching complex aggregations and joins as optimized Iceberg datasets on your data lake, reducing the time it takes to retrieve and process data for end users. This flexibility means data can be optimized on-demand, providing quick access to datasets even as they scale.

Making Lakehouses More Accessible with Dremio:

Dremio’s user-friendly platform bridges the gap between raw data and meaningful insights. With its robust connectivity, powerful semantic layer, and efficient query acceleration, Dremio simplifies data access and unification across environments, helping teams leverage their lakehouse’s full potential to provide decision-makers faster, more reliable data.

The Power of Using Dremio and Apache Iceberg Together

When combined, Dremio and Apache Iceberg create a compelling data lakehouse ecosystem, enabling advanced performance and flexibility that streamline data workflows. Using Dremio’s query acceleration and Iceberg’s table format together brings additional capabilities that help teams achieve higher efficiency and responsiveness in their data operations.

Enhanced Reflection Capabilities with Iceberg:

- Live Reflections: When data is stored in Iceberg tables, Dremio can leverage live reflections, which automatically refresh whenever the underlying data changes. This feature ensures that end users always access the most current data without requiring extensive manual intervention, enhancing both accuracy and timeliness in data delivery.

- Incremental Reflections: Iceberg tables also enable incremental reflections, where only newly modified data is refreshed. This selective update mechanism saves both time and computational resources, allowing for faster, more cost-effective refresh cycles, particularly beneficial when working with large datasets that are frequently updated.

Synergistic Efficiency for Scalable Data Operations:

By combining Dremio’s capabilities with the Iceberg table format, data teams can optimize their lakehouse in ways that wouldn’t be possible using either tool independently. Iceberg’s flexible partitioning and schema evolution features pair seamlessly with Dremio’s accelerated query capabilities, allowing organizations to scale their data operations without compromising on performance or increasing costs. This integration ultimately leads to faster, more responsive data processing, helping teams deliver insights in near real-time.

Together, Dremio and Iceberg enable a streamlined, highly optimized data lakehouse that not only enhances data accessibility but also maximizes efficiency and reduces operational complexity.

Conclusion: Building a Modern Data Platform with Iceberg and Dremio

In a landscape where data accessibility, speed, and cost-efficiency are essential, building a modern data platform with Apache Iceberg and Dremio at the center can provide a significant competitive advantage. By adopting a lakehouse architecture, organizations can unify their data into a single, reliable source of truth that balances the flexibility of data lakes with the structure of data warehouses.

Apache Iceberg brings crucial capabilities to the lakehouse, enabling advanced features like partition evolution, time travel, and hidden partitioning that make data management more efficient and adaptable. With Dremio, data teams gain seamless connectivity across databases, data lakes, and warehouses, along with a powerful semantic layer for consistent metrics and reflections that accelerate query performance. Together, they offer a highly responsive, scalable lakehouse solution that reduces storage and compute costs, optimizes data workflows, and speeds up data access.

For organizations looking to modernize their data architecture, an Iceberg-based data lakehouse with Dremio provides a future-ready approach that ensures reliable, high-performance data management and analytics at scale.

Sign up for AI Ready Data content