34 minute read · February 1, 2025

Building AI Agents with LangChain using Dremio, Iceberg, and Unified Data

· Head of DevRel, Dremio

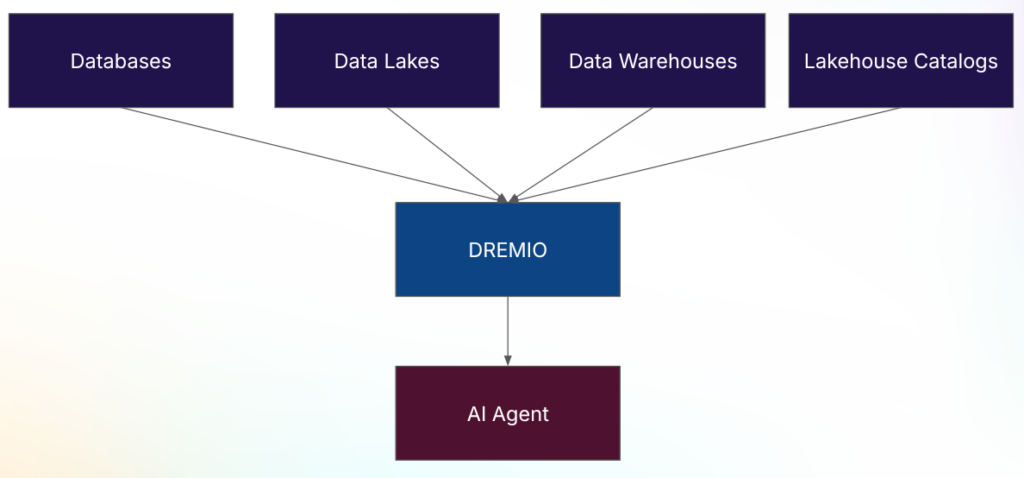

AI-driven applications have revolutionized the way businesses extract value from data. AI models thrive on structured, well-organized data, from virtual assistants to predictive analytics. However, one of the biggest challenges in building AI-powered solutions is efficiently accessing and integrating data from multiple sources—data lakes, data warehouses, relational databases, and streaming platforms.

This is where Dremio shines. As a self-service data platform, Dremio enables organizations to unify data across different sources, model it in a semantic layer, and accelerate queries using Reflections, making it an ideal foundation for AI applications. Moreover, Apache Iceberg, the next-generation table format, allows AI models to work with consistent, transactional data, ensuring accuracy and reliability.

In this blog, we’ll explore how to build AI agents using LangChain, a popular Python framework for developing applications powered by large language models (LLMs). We’ll demonstrate how AI agents can leverage Dremio to:

- Connect to data across multiple sources, including Iceberg, relational databases, and data lakes.

- Query and process structured datasets with minimal overhead.

- Enhance performance with Dremio Reflections, making AI-driven analytics faster.

- Seamlessly integrate an Iceberg lakehouse with other enterprise data sources.

To bring these concepts to life, we’ll walk through two real-world AI-powered applications:

- A finance-focused AI assistant that helps analysts retrieve portfolio insights and risk assessments.

- A healthcare AI tool that enables medical professionals to query patient records and lab results.

By the end of this blog, you’ll understand how to build AI-powered tools that leverage Dremio’s data unification, LangChain’s intelligent agents, and the scalability of Apache Iceberg to deliver advanced analytics and decision-making. Let’s dive in!

Benefits of Dremio for Integrating and Wrangling Data

In AI-driven applications, the quality and accessibility of data play a crucial role in model accuracy and performance. AI agents must process structured, reliable data from various sources—data lakes, data warehouses, and transactional databases—to provide meaningful insights. However, integrating and querying this data efficiently can be a major challenge.

This is where Dremio excels. By serving as a unified data access layer, Dremio enables organizations to break down data silos, optimize query performance, and provide real-time access to structured datasets. Below are the key benefits of using Dremio as the foundation for AI applications:

1. Seamless Data Unification Across Sources

Dremio connects directly to a wide range of data sources, including:

✅ Lakehouse Table Formats like Apache Iceberg (Storage, Nessie, Polaris, Unity, Glue, REST) and Delta Lake (Storage)

✅ Data Warehouses such as Redshift, Snowflake and more

✅ Cloud and on-prem object stores such as Amazon S3, Azure Data Lake, and Google Cloud Storage

✅ Traditional RDBMS like PostgreSQL, MySQL, and SQL Server

✅ Semi-structured data such as JSON, CSV, and Parquet

By providing a single SQL interface across these diverse data sources, Dremio eliminates the need for complex ETL pipelines, enabling AI applications to access data in its native location.

2. Semantic Layer for AI-Ready Data

AI models work best when they operate on well-structured, high-quality datasets. Dremio’s semantic layer allows organizations to:

🔹 Define virtual datasets that present business-friendly views of raw data

🔹 Ensure consistent data definitions across different AI models and applications

🔹 Enable governance and security policies without duplicating data

By structuring raw datasets into curated, AI-ready datasets, data teams can enable faster, more reliable insights without the complexities of traditional data wrangling.

3. Query Acceleration with Dremio Reflections

AI applications often require real-time or near-real-time access to large volumes of data. Dremio’s Reflections feature optimizes query performance by precomputing and caching results, significantly speeding up analytics. Benefits include:

⚡ Faster queries for AI-driven decision-making

⚡ Reduced cloud compute costs by minimizing redundant queries

⚡ Optimized performance across federated datasets

By accelerating AI-driven queries, Reflections ensure that LangChain-powered agents can process large datasets in real-time without performance bottlenecks.

4. Apache Iceberg & Lakehouse Integration

Dremio is built for lakehouse architectures, providing native support for Apache Iceberg, an advanced table format designed for:

✔ ACID transactions ensuring data consistency

✔ Schema evolution without data migration

✔ Partition pruning & metadata indexing for faster queries

With Dremio, AI models can seamlessly integrate Iceberg tables with other structured and semi-structured datasets, unlocking the power of unified, transactional data for machine learning and AI applications.

This means that AI developers can fetch large datasets efficiently and process them using LangChain, with minimal effort.

The Basics of LangChain and Working with LangChain

Now that we’ve established how Dremio enables seamless data access and integration, let’s turn our attention to LangChain, a powerful framework for building AI-driven applications powered by Large Language Models (LLMs).

LangChain provides a structured way to develop AI agents that can reason, retrieve data, and interact dynamically with various tools—including databases, APIs, and structured data sources like Dremio. By combining LangChain’s AI capabilities with Dremio’s data access layer, we can create AI agents that query structured data, analyze results, and provide intelligent insights.

What is LangChain?

LangChain is a Python library designed to simplify the development of applications using LLMs. It provides a modular way to connect LLMs with external tools, memory, and data retrieval mechanisms, making building AI assistants, chatbots, and data-driven AI agents easier.

LangChain is built on four core components:

1️⃣ Models – The LLM that powers the AI agent (e.g., OpenAI's GPT-4, Anthropic's Claude).

2️⃣ Memory – A mechanism for tracking past interactions to create context-aware conversations.

3️⃣ Tools – Functions that extend AI capabilities, such as querying databases or calling APIs.

4️⃣ Agents – AI-driven decision-makers that use tools dynamically based on user input.

Using LangChain, we can build AI agents that query Dremio, retrieve structured data, and respond to user questions with data-backed insights.

Setting Up LangChain and Connecting to Dremio

Before we can build an AI-powered agent, we need to set up our environment and establish a connection to Dremio using the dremio-simple-query library.

Step 1: Install Dependencies

First, install the required Python packages:

pip install langchain langchain_openai langchain_community dremio-simple-query pandas polars duckdb flask dotenv

Step 2: Configure Environment Variables

Create a .env file to store your API keys and connection details securely:

DREMIO_TOKEN=your_dremio_PAT_token_here DREMIO_ARROW_ENDPOINT=grpc://your_dremio_instance:32010 OPENAI_API_KEY=your_openai_api_key_here SECRET_KEY=your_flask_secret_key

Step 3: Establish a Connection to Dremio

Use the dremio-simple-query library to authenticate and connect to Dremio’s Arrow Flight API:

from dremio_simple_query.connect import DremioConnection

from dotenv import load_dotenv

import os

# Load environment variables

load_dotenv()

# Get Dremio credentials

TOKEN = os.getenv("DREMIO_TOKEN")

ARROW_ENDPOINT = os.getenv("DREMIO_ARROW_ENDPOINT")

# Establish connection

dremio = DremioConnection(TOKEN, ARROW_ENDPOINT)

# Test query

df = dremio.toPandas("SELECT * FROM my_iceberg_table LIMIT 5;")

print(df)If your credentials are correct, this will return sample data from an Apache Iceberg table in Dremio.

Building AI Agents with LangChain

With our Dremio connection established, we can now build a LangChain-powered AI agent that:

✅ Queries structured data from Dremio

✅ Understands user questions and provides contextual answers

✅ Uses memory to track conversations

Step 4: Define LangChain Tools for Querying Dremio

LangChain Tools allow us to extend an AI agent’s functionality by enabling structured data retrieval from Dremio’s semantic layer.

Here’s an example of a tool that fetches a list of financial accounts from a dataset:

from langchain.agents import Tool

# Function to fetch financial account data

def get_financial_accounts(_input=None):

query = "SELECT account_id, account_name FROM finance.accounts;"

df = dremio.toPandas(query)

if not df.empty:

return f"Financial Accounts:\n{df.to_string(index=False)}"

return "No accounts found."

# Define LangChain Tool

get_financial_accounts_tool = Tool(

name="get_financial_accounts",

func=get_financial_accounts,

description="Retrieves a list of financial accounts and their IDs."

)

Step 5: Create an AI Agent with Memory

LangChain Agents allow AI models to reason dynamically and decide when to use tools.

from langchain.memory import ConversationBufferMemory

from langchain_openai import ChatOpenAI

from langchain.agents import initialize_agent

# Initialize memory for tracking conversations

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

# Create AI Model (using OpenAI GPT)

chat_model = ChatOpenAI(model_name="gpt-4o", openai_api_key=os.getenv("OPENAI_API_KEY"))

# Initialize AI Agent

tools = [get_financial_accounts_tool]

agent = initialize_agent(

tools,

chat_model,

agent="chat-conversational-react-description",

memory=memory,

verbose=True

)Step 6: Interacting with the AI Agent

Now, we can interact with the AI-powered financial assistant:

response = agent.run({"input": "Show me all available financial accounts."})

print(response)This will prompt the AI agent to:

1️⃣ Use the get_financial_accounts_tool to fetch structured data from Dremio.

2️⃣ Retrieve the financial account list from the Iceberg table.

3️⃣ Format and return the response to the user in natural language.

Now that we have the foundation for querying structured data from Dremio using LangChain, we will:

📌 Build an AI-driven finance assistant that retrieves portfolio performance and investment insights.

📌 Develop a healthcare AI tool that helps doctors query patient records and medical history using AI-powered natural language queries.

These practical examples will showcase how Dremio’s powerful data engine, combined with LangChain’s AI capabilities, enables businesses to build scalable, intelligent AI assistants.

Walkthrough: AI-Powered Finance Assistant

Financial analysts, wealth managers, and investors constantly analyze market trends, portfolio performance, and risk factors to make informed decisions. However, accessing and querying financial data across various data sources—data lakes, databases, and spreadsheets—can be complex and time-consuming.

With Dremio and LangChain, we can build an AI-powered finance assistant that enables users to:

✅ Query portfolio performance and risk assessments in real-time.

✅ Retrieve historical financial data from structured sources.

✅ Interact with financial data using natural language.

This section will walk through the steps to build a finance AI assistant using Dremio’s semantic layer and LangChain’s agent-based framework.

Step 1: Define the Use Case

For this demo, our AI-powered assistant will be able to:

- Retrieve a list of portfolios managed by an investment firm.

- Fetch performance metrics for a given portfolio.

- Provide a risk assessment summary based on historical data.

To accomplish this, we will define three LangChain tools that fetch relevant data from Dremio.

Step 2: Connect to Dremio and Retrieve Financial Data

As demonstrated earlier, we first establish a Dremio connection using dremio-simple-query:

from dremio_simple_query.connect import DremioConnection

from dotenv import load_dotenv

import os

# Load environment variables

load_dotenv()

# Retrieve Dremio credentials

TOKEN = os.getenv("DREMIO_TOKEN")

ARROW_ENDPOINT = os.getenv("DREMIO_ARROW_ENDPOINT")

# Establish connection

dremio = DremioConnection(TOKEN, ARROW_ENDPOINT)With this connection, we can query structured financial data from Dremio.

Step 3: Define LangChain Tools for Financial Queries

Tool 1: Retrieve a List of Investment Portfolios

We first define a LangChain Tool that fetches a list of available portfolios.

from langchain.agents import Tool

# Function to fetch investment portfolios

def get_portfolio_list(_input=None):

query = "SELECT portfolio_id, portfolio_name FROM finance.portfolios;"

df = dremio.toPandas(query)

if not df.empty:

return f"Investment Portfolios:\n{df.to_string(index=False)}"

return "No portfolios found."

# Define LangChain Tool

get_portfolio_list_tool = Tool(

name="get_portfolio_list",

func=get_portfolio_list,

description="Retrieves a list of investment portfolios and their IDs."

)Tool 2: Retrieve Portfolio Performance Metrics

Next, we define a tool that fetches performance metrics for a specific portfolio.

# Function to fetch portfolio performance

def get_portfolio_performance(portfolio_id: str):

query = f"""

SELECT portfolio_name, annual_return, volatility, sharpe_ratio

FROM finance.performance

WHERE portfolio_id = '{portfolio_id}';

"""

df = dremio.toPandas(query)

if not df.empty:

return df.to_string(index=False)

return "No performance data found for this portfolio."

# Define LangChain Tool

get_portfolio_performance_tool = Tool(

name="get_portfolio_performance",

func=get_portfolio_performance,

description="Retrieves financial performance metrics for a given portfolio."

)Tool 3: Retrieve Portfolio Risk Assessment

Finally, we create a tool that assesses portfolio risk based on historical data.

# Function to fetch risk assessment

def get_portfolio_risk(portfolio_id: str):

query = f"""

SELECT portfolio_name, risk_level, max_drawdown, beta, var_95

FROM finance.risk_assessment

WHERE portfolio_id = '{portfolio_id}';

"""

df = dremio.toPandas(query)

if not df.empty:

return df.to_string(index=False)

return "No risk assessment data found for this portfolio."

# Define LangChain Tool

get_portfolio_risk_tool = Tool(

name="get_portfolio_risk",

func=get_portfolio_risk,

description="Retrieves the risk assessment for a given portfolio."

)Step 4: Create the AI Agent

Now that we have three financial tools, we define a LangChain-powered AI agent that dynamically queries this data.

from langchain.memory import ConversationBufferMemory

from langchain_openai import ChatOpenAI

from langchain.agents import initialize_agent

# Initialize memory for tracking conversations

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

# Define the AI model (using OpenAI GPT)

chat_model = ChatOpenAI(model_name="gpt-4o", openai_api_key=os.getenv("OPENAI_API_KEY"))

# Initialize AI Agent with financial tools

tools = [get_portfolio_list_tool, get_portfolio_performance_tool, get_portfolio_risk_tool]

agent = initialize_agent(

tools,

chat_model,

agent="chat-conversational-react-description",

memory=memory,

verbose=True

)Step 5: Interacting with the AI-Powered Finance Assistant

Now, we can ask the AI assistant financial questions:

response1 = agent.run({"input": "Show me a list of available investment portfolios."})

print(response1)

response2 = agent.run({"input": "What is the performance of portfolio ID 1001?"})

print(response2)

response3 = agent.run({"input": "Give me a risk assessment for portfolio ID 1001."})

print(response3)Example AI Responses

Query: "Show me a list of available investment portfolios."

Investment Portfolios: portfolio_id portfolio_name ----------- -------------- 1001 Growth Fund 1002 Dividend Income Fund 1003 Global Equity Fund

Query: "What is the performance of portfolio ID 1001?"

portfolio_name annual_return volatility sharpe_ratio -------------- ------------- ---------- ------------ Growth Fund 12.5% 8.3% 1.45

Query: "Give me a risk assessment for portfolio ID 1001."

portfolio_name risk_level max_drawdown beta var_95 -------------- ---------- ------------ ---- ------ Growth Fund Moderate -15.2% 1.1 -6.5%

Step 6: Deploying the AI Agent as a Flask Web App

For a user-friendly experience, we can deploy our AI-powered finance assistant as a Flask-based chatbot where financial analysts can interact with the AI via a web interface.

from flask import Flask, request, render_template, session

app = Flask(__name__)

app.secret_key = os.getenv("SECRET_KEY")

@app.route("/", methods=["GET", "POST"])

def index():

response = None

if request.method == "POST":

user_question = request.form["question"]

agent_inputs = {"input": user_question}

response = agent.run(agent_inputs)

return render_template("index.html", response=response)

if __name__ == "__main__":

app.run(debug=True)Conclusion & Next Steps

✅ We successfully built an AI-powered finance assistant that integrates Dremio, LangChain, and Iceberg.

✅ We retrieved financial data dynamically using structured queries.

✅ We enabled natural language interactions with financial insights.

Next, we’ll apply the same principles to build an AI-powered healthcare assistant that queries patient records, diagnostics, and treatment history.

Walkthrough: AI-Powered Healthcare Assistant

In the healthcare industry, doctors, nurses, and administrators need quick access to patient records, diagnostics, and treatment histories to make informed medical decisions. However, data is often fragmented across electronic health records (EHR), insurance claims, and lab systems—making it difficult to consolidate and query efficiently.

By combining Dremio’s unified data access with LangChain’s AI capabilities, we can build an AI-powered healthcare assistant that enables:

✅ Quick retrieval of patient records across different data sources.

✅ Natural language queries for lab results and treatment histories.

✅ AI-powered insights into potential health risks based on historical data.

This section will walk through the steps to build a healthcare AI assistant using Dremio’s semantic layer and LangChain’s agent-based framework.

Step 1: Define the Use Case

Our AI-powered healthcare assistant will be able to:

- Retrieve patient details based on a patient ID.

- Fetch recent lab results for a given patient.

- Provide an overview of a patient’s medical history with diagnoses and treatments.

We will define three LangChain tools that fetch relevant healthcare data from Dremio to accomplish this.

Step 2: Connect to Dremio and Retrieve Healthcare Data

As before, we first establish a Dremio connection using dremio-simple-query:

from dremio_simple_query.connect import DremioConnection

from dotenv import load_dotenv

import os

# Load environment variables

load_dotenv()

# Retrieve Dremio credentials

TOKEN = os.getenv("DREMIO_TOKEN")

ARROW_ENDPOINT = os.getenv("DREMIO_ARROW_ENDPOINT")

# Establish connection

dremio = DremioConnection(TOKEN, ARROW_ENDPOINT)This will allow our AI agent to query patient records from structured datasets in Dremio.

Step 3: Define LangChain Tools for Healthcare Queries

Tool 1: Retrieve Patient Details

We first define a LangChain Tool that fetches patient demographic details.

from langchain.agents import Tool

# Function to fetch patient details

def get_patient_details(patient_id: str):

query = f"""

SELECT patient_id, full_name, date_of_birth, gender, primary_physician

FROM healthcare.patients

WHERE patient_id = '{patient_id}';

"""

df = dremio.toPandas(query)

if not df.empty:

return df.to_string(index=False)

return "No patient record found."

# Define LangChain Tool

get_patient_details_tool = Tool(

name="get_patient_details",

func=get_patient_details,

description="Retrieves basic demographic details for a patient given their patient ID."

)Tool 2: Retrieve Lab Test Results

Next, we define a tool that fetches recent lab test results for a given patient.

# Function to fetch lab test results

def get_lab_results(patient_id: str):

query = f"""

SELECT test_name, result_value, result_unit, test_date

FROM healthcare.lab_results

WHERE patient_id = '{patient_id}'

ORDER BY test_date DESC

LIMIT 5;

"""

df = dremio.toPandas(query)

if not df.empty:

return df.to_string(index=False)

return "No lab results found for this patient."

# Define LangChain Tool

get_lab_results_tool = Tool(

name="get_lab_results",

func=get_lab_results,

description="Retrieves the latest lab test results for a patient given their patient ID."

)Tool 3: Retrieve Medical History & Treatments

Finally, we create a tool that retrieves the patient’s medical history, including diagnoses and treatments.

# Function to fetch medical history

def get_medical_history(patient_id: str):

query = f"""

SELECT diagnosis, treatment, treatment_date, prescribing_doctor

FROM healthcare.medical_history

WHERE patient_id = '{patient_id}'

ORDER BY treatment_date DESC;

"""

df = dremio.toPandas(query)

if not df.empty:

return df.to_string(index=False)

return "No medical history found for this patient."

# Define LangChain Tool

get_medical_history_tool = Tool(

name="get_medical_history",

func=get_medical_history,

description="Retrieves a patient's medical history and treatments given their patient ID."

)Step 4: Create the AI Agent

Now that we have three healthcare tools, we define a LangChain-powered AI agent that dynamically queries this data.

from langchain.memory import ConversationBufferMemory

from langchain_openai import ChatOpenAI

from langchain.agents import initialize_agent

# Initialize memory for tracking conversations

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

# Define the AI model (using OpenAI GPT)

chat_model = ChatOpenAI(model_name="gpt-4o", openai_api_key=os.getenv("OPENAI_API_KEY"))

# Initialize AI Agent with healthcare tools

tools = [get_patient_details_tool, get_lab_results_tool, get_medical_history_tool]

agent = initialize_agent(

tools,

chat_model,

agent="chat-conversational-react-description",

memory=memory,

verbose=True

)Step 5: Interacting with the AI-Powered Healthcare Assistant

Now, we can ask the AI assistant healthcare-related questions:

response1 = agent.run({"input": "Show me the details for patient ID 5678."})

print(response1)

response2 = agent.run({"input": "What are the most recent lab results for patient ID 5678?"})

print(response2)

response3 = agent.run({"input": "Show me the medical history for patient ID 5678."})

print(response3)Example AI Responses

Query: "Show me the details for patient ID 5678."

patient_id full_name date_of_birth gender primary_physician ---------- -------------- ------------- ------ ------------------- 5678 Jane Doe 1985-06-15 Female Dr. Smith

Query: "What are the most recent lab results for patient ID 5678?"

test_name result_value result_unit test_date -------------- ------------ ----------- ---------- Glucose Level 98 mg/dL 2024-01-10 Cholesterol 180 mg/dL 2024-01-05 Hemoglobin A1c 5.2 % 2023-12-20

Query: "Show me the medical history for patient ID 5678."

diagnosis treatment treatment_date prescribing_doctor -------------- ------------------- ------------- ------------------- Hypertension Lisinopril 10mg daily 2024-01-08 Dr. Smith Diabetes Type 2 Metformin 500mg daily 2023-12-15 Dr. Lee

Step 6: Deploying the AI Assistant as a Flask Web App

For a user-friendly experience, we can deploy our AI-powered healthcare assistant as a Flask-based chatbot where healthcare professionals can query patient records using a web interface.

from flask import Flask, request, render_template, session

app = Flask(__name__)

app.secret_key = os.getenv("SECRET_KEY")

@app.route("/", methods=["GET", "POST"])

def index():

response = None

if request.method == "POST":

user_question = request.form["question"]

agent_inputs = {"input": user_question}

response = agent.run(agent_inputs)

return render_template("index.html", response=response)

if __name__ == "__main__":

app.run(debug=True)Conclusion & Next Steps

✅ We successfully built an AI-powered healthcare assistant that integrates Dremio, LangChain, and Iceberg.

✅ We retrieved patient records dynamically using structured queries.

✅ We enabled natural language interactions with medical history and lab data.

Next, we’ll summarize the benefits of using Dremio for AI-driven applications and discuss why it’s the ideal data platform for AI workloads.

Conclusion: The Power of Dremio for AI-Driven Applications

We explored how Dremio, LangChain, and Apache Iceberg can be seamlessly integrated to build AI-powered assistants interacting with structured enterprise data. Organizations can create intelligent, real-time, data-driven applications with minimal overhead by leveraging Dremio’s ability to unify data across sources, LangChain’s agent-based AI capabilities, and Iceberg’s scalable table format.

Key Takeaways

✅ Dremio provides a powerful data unification layer, allowing AI applications to access structured data across databases, data lakes, and warehouses—all via SQL.

✅ Reflections enable accelerated query performance, reducing response times and optimizing AI interactions with large datasets.

✅ Apache Iceberg ensures consistency and reliability, making AI models more accurate by providing a transactional, versioned dataset foundation.

✅ LangChain’s AI agents allow for dynamic decision-making, enabling users to query and analyze structured data using natural language.

✅ AI-powered assistants can be built for various industries, including:

- Finance: Automating portfolio analysis, risk assessment, and investment insights.

- Healthcare: Providing AI-driven access to patient records, lab results, and treatment histories.

Why Dremio is the Ideal Data Platform for AI Workloads

Dremio removes the barriers to integrating AI with enterprise data by offering:

🚀 A semantic layer that defines AI-ready datasets, simplifying structured data consumption.

🚀 Native Iceberg and data lake support, ensuring real-time, scalable analytics.

🚀 Federation across multiple data sources, reducing the need for complex ETL pipelines.

🚀 Fast query execution with Reflections, making AI responses more interactive and efficient.

By using Dremio as the backbone for AI applications, businesses can unlock new levels of efficiency, automation, and insight generation—without the high costs of traditional data warehouses.

Next Steps: Build Your Own AI-Powered Data Assistant

Ready to build your own AI-powered assistant? 🚀 Here’s how to get started:

1️⃣ Set up Dremio: Connect your databases, data lakes, and Iceberg tables.

2️⃣ Install LangChain & dremio-simple-query: Use Python to interact with structured data.

3️⃣ Define AI tools for your use case: Create LangChain agents for your industry.

4️⃣ Deploy as a chatbot or API: Use Flask, FastAPI, or Streamlit to bring your AI agent to life.

Sign up for AI Ready Data content