48 minute read · March 20, 2018

Building an Analytics Stack on AWS with Dremio

Webinar Transcript

Justin Bock:Welcome to another session to Bock Corp.’s strategic partner series. My name is Justin Bock, I’m the CEO and founder of Bock Corp., and we’re really pleased to have attendees from actually all over the world today for our session with Dremio. Speaking of the session, we’re really excited that Dremio have come on board again as part of our series. Today’s topic is “Building an Analytic Stack on AWS, Without Going Crazy.” Both Bock Corp. and Dremio are start-ups, so if you like what you see, please, please share it with your peers and particularly management through rapid growth phases in our business.Now the agenda, I’ve already mostly touched on what we’re going through today. I’ve done the welcome. Great to have Kelly Stirman back with us from Dremio, being the CEO, CMO and VP of Strategy, and a particularly busy man, because I know it’s absolutely event time in the U.S. at this point in time. Just before we currently hand over to Kelly, on Bock Corp., just who we are. We’re incorporated in Melbourne, and now covering the whole of Australia and New Zealand region. We have a broad range of best of breed big data, advanced analytics, and BI software, and people with related skillsets.That’s why, we’ve actually just set up a new contractor marketplace, for all forms of staff augmentation around data-centric role, for example, data scientists and data engineers. We’re keeping it really simple. We’re essentially matching customer requirements with industry experts in our region. We got a lab, which is proving to be really successful, and what we’ve done is just set up an agile lab, so you can prove out new technologies, such as Dremio, or challenges within weeks, using our resources or your own. Product services aside, we pride ourselves on building relationships built on trust and professionalism.I’m now going to hand you over to Kelly. Just briefly on Kelly. Kelly works closely with customers, partners, and the open source community, to articulate how Dremio is quickly becoming the world’s most popular BI’s tool. For over 15 years, he’s worked at the forefront of vast analytics, analytical technologies. Prior to Dremio, Kelly worked at Mongo DB, Hadapt, and MarkLogic in executive and leadership roles. On Dremio, as I pass over to you, Kelly, congratulations on the Series B of funding, announced in January. A nice little $25 million boost, so well done. Kelly Stirman:Thanks, Justin. Justin Bock:Now I’m going to pass control over to you. Welcome, from California. Kelly Stirman:Okay, thank you so much, and hello to everyone. I’m not sure you can see the screen here. All right, so let me just, I have a couple of slides to talk through, and then we’ll move to look at the product itself and just sort of explore the idea of using Dremio on AWS, with data lake and some other services. Let me sort of lay out the stage first. Well, this is a little big. Justin, are we able to make this fit the screen? If not I can just sort of jump over to my slides. It’s no big deal. Kelly Stirman:Okay, so just a little bit about us. We have founders, you know, a group of people in leadership positions at Dremio, from a mix of big data, distributed systems, open source, and individuals who have been working with large Fortune 1000 companies to build next generation infrastructure around data analytics, with terrific investors from Lightspeed, Redpoint, and Norwest, about 40 million in VC investment so far. There’s a couple things that are maybe worth following up on after the webinar, for those of you attending. One is a project called Apache Arrow. That’s a project that we started, and you can think of as the in-memory counterpart to file formats like Parquet and ORC. It’s a project we worked very closely with the inventor of Pandas, Wes McKinney, which is a foundational library in the world of Python that almost everyone uses to marshal data into Python environments from different sources. Arrow is a really interesting, important part of the overall story. It will not be the focus of this webinar, probably something we’ll talk about in-depth in the future, but it’s something that if you’re interested about cutting edge in memory analytics, Apache Arrow is a really interesting project that, like I said, is something that we lead and have used extensively in our products. Let’s talk about what’s going with AWS. You know, the title of the webinar is “How to Build an Analytics Stack on AWS, Without Going Crazy,” the implication being that it’s easy to go crazy. Let me say a little bit about why I think that is.I was a DBA in the nineties, and you know, we built data warehouses and datamarts. We have many different sources that we brought together. We used ETL tools, we used BI products like Cognos and MicroStrategy and Business Objects. That model of building an enterprise data warehouse is still something that virtually every company on the planet has done, has one or more data warehouses that they manage. For about the past decade, as companies have built their businesses in the cloud, and as AWS has become popular, as well as Azure and Google and other cloud providers, the options have started to change. Companies have embraced to varying degrees new paradigms for managing their analytics. Most companies now are either in the midst of or considering a replatforming exercise. That replatforming is typically either into a data lake that’s maybe built on Hadoop, for example, or into one or more of the cloud services available from platform vendors like AWS.

Arrow is a really interesting, important part of the overall story. It will not be the focus of this webinar, probably something we’ll talk about in-depth in the future, but it’s something that if you’re interested about cutting edge in memory analytics, Apache Arrow is a really interesting project that, like I said, is something that we lead and have used extensively in our products. Let’s talk about what’s going with AWS. You know, the title of the webinar is “How to Build an Analytics Stack on AWS, Without Going Crazy,” the implication being that it’s easy to go crazy. Let me say a little bit about why I think that is.I was a DBA in the nineties, and you know, we built data warehouses and datamarts. We have many different sources that we brought together. We used ETL tools, we used BI products like Cognos and MicroStrategy and Business Objects. That model of building an enterprise data warehouse is still something that virtually every company on the planet has done, has one or more data warehouses that they manage. For about the past decade, as companies have built their businesses in the cloud, and as AWS has become popular, as well as Azure and Google and other cloud providers, the options have started to change. Companies have embraced to varying degrees new paradigms for managing their analytics. Most companies now are either in the midst of or considering a replatforming exercise. That replatforming is typically either into a data lake that’s maybe built on Hadoop, for example, or into one or more of the cloud services available from platform vendors like AWS. This is a topic that is, been unfolding for a number of years. I don’t think the story is complete or finished for anyone, but it is front and center and a very large initiative, right, because companies manage enormous amounts of data and analytics and making sense of data is core to almost every company today. This is a picture from one of Amazon’s annual user conferences which is called Reinvent, where each year they announce new products. If you haven’t been to one of these things, it’s absolutely incredible how large it is now, how vibrant, how it sells out standing-room-only in lots of the talks, but the point I want to make here, when you go to this conference, you are overwhelmed with the number of new services and offerings available from AWS. If you think about what AWS is doing, it’s every layer of the technology stack. They are reinventing as a new cloud service, everything from storage to compute to networking, security, application servers, databases, et cetera, et cetera, et cetera.I don’t know the exact number of services that Amazon has to offer right now, but I think they are either at or past 100 different services that they provide. If you are an executive at a large organization, or even a medium-sized organization, and you’re replatforming to AWS, it can be a little overwhelming. Because you need to figure out how to navigate this incredible diversity of services that Amazon offers. By the way, they don’t offer just one service for each thing you do in IT, they offer multiple services. It’s not obvious in many cases what the right one is for the particular problem that you’re trying to solve. If you think about Amazon’s core philosophy, it’s having one of choice. The core user they think about is a software engineer.One of the philosophies of UNIX has always been, you know, multiple tools, each tool, highly specific and optimized for a specific task, and then you glue tools together to build a solution. The same ideology exists in AWS, that there’s lots of parts and you assemble the parts to solve the problem that you need to solve for your particular needs. That’s not, while it’s incredibly flexible, it’s not always easy for companies to make sense of this new world on AWS. That applies especially I think to analytics.Here if you go to AWS and look at their product menu, which I encourage everyone to do. Here, I don’t know if I can even fit the whole thing in one screen, but this is a taste of what AWS has to offer, across, like I said, basically every area of IT. If we think about analytics, let’s just sort of zero in on what matters. You’ve got products in storage, and you’ve got products in databases, and you’ve got products in analytics. Of course you need to think about other areas of this menu to actually build a solution, but these are the three areas we’re going to focus on today. You can see that for each of these, there are many options. Let’s think about how to make sense of some of those options on AWS.

This is a topic that is, been unfolding for a number of years. I don’t think the story is complete or finished for anyone, but it is front and center and a very large initiative, right, because companies manage enormous amounts of data and analytics and making sense of data is core to almost every company today. This is a picture from one of Amazon’s annual user conferences which is called Reinvent, where each year they announce new products. If you haven’t been to one of these things, it’s absolutely incredible how large it is now, how vibrant, how it sells out standing-room-only in lots of the talks, but the point I want to make here, when you go to this conference, you are overwhelmed with the number of new services and offerings available from AWS. If you think about what AWS is doing, it’s every layer of the technology stack. They are reinventing as a new cloud service, everything from storage to compute to networking, security, application servers, databases, et cetera, et cetera, et cetera.I don’t know the exact number of services that Amazon has to offer right now, but I think they are either at or past 100 different services that they provide. If you are an executive at a large organization, or even a medium-sized organization, and you’re replatforming to AWS, it can be a little overwhelming. Because you need to figure out how to navigate this incredible diversity of services that Amazon offers. By the way, they don’t offer just one service for each thing you do in IT, they offer multiple services. It’s not obvious in many cases what the right one is for the particular problem that you’re trying to solve. If you think about Amazon’s core philosophy, it’s having one of choice. The core user they think about is a software engineer.One of the philosophies of UNIX has always been, you know, multiple tools, each tool, highly specific and optimized for a specific task, and then you glue tools together to build a solution. The same ideology exists in AWS, that there’s lots of parts and you assemble the parts to solve the problem that you need to solve for your particular needs. That’s not, while it’s incredibly flexible, it’s not always easy for companies to make sense of this new world on AWS. That applies especially I think to analytics.Here if you go to AWS and look at their product menu, which I encourage everyone to do. Here, I don’t know if I can even fit the whole thing in one screen, but this is a taste of what AWS has to offer, across, like I said, basically every area of IT. If we think about analytics, let’s just sort of zero in on what matters. You’ve got products in storage, and you’ve got products in databases, and you’ve got products in analytics. Of course you need to think about other areas of this menu to actually build a solution, but these are the three areas we’re going to focus on today. You can see that for each of these, there are many options. Let’s think about how to make sense of some of those options on AWS. What I’m basically going to do is double-click on each of those areas and try and give you a little bit of a sense on how to navigate that. Back to the idea of replatforming from a traditional enterprise data warehouse and whatever you’re doing in analytics to the services that Amazon has to offer that are relevant to this space, here are the key ones that I think you need to be aware of. You know, I didn’t intend to make this an exercise in trying to make sense of the AWS icon family, but I will tell you, I don’t know that those icons really tell me much about what each service provides, because even the colors don’t always make sense.

What I’m basically going to do is double-click on each of those areas and try and give you a little bit of a sense on how to navigate that. Back to the idea of replatforming from a traditional enterprise data warehouse and whatever you’re doing in analytics to the services that Amazon has to offer that are relevant to this space, here are the key ones that I think you need to be aware of. You know, I didn’t intend to make this an exercise in trying to make sense of the AWS icon family, but I will tell you, I don’t know that those icons really tell me much about what each service provides, because even the colors don’t always make sense. In the top you’ve got the databases that you should be aware of. Then under that, you’ve got the file systems, different places you can store data, just sort of raw data. Then you have the ability to do analysis with EMR and Athena, and then some data movement and transformation, which is the two bottom icons. Let’s look at each of these areas in more detail. First of all, let’s look at file systems, because for many people that are replatforming to AWS, that’s really the first step, is how do I land my data in the cloud, before I start to use it in any of the services? One of the reasons why that’s such a good and safe first step for companies is because the options especially S3 are incredibly cost-effective.Let’s, the three to really be aware of are S3, EBS, the Amazon elastic block storage, and EFS. The way to think about this is, you know, S3 is for storing objects, essentially with unlimited capacity. None of us that are on this call are really ever going to exceed the capacity of S3. One of the things about S3 is it’s not just a place to store, it’s also a place to access those files. There’s probably an enormous number of websites that you visit on the web everyday whose HTML files and Javascript files and CSS files are simply files sitting on S3, with a webserver sitting in front of them.

In the top you’ve got the databases that you should be aware of. Then under that, you’ve got the file systems, different places you can store data, just sort of raw data. Then you have the ability to do analysis with EMR and Athena, and then some data movement and transformation, which is the two bottom icons. Let’s look at each of these areas in more detail. First of all, let’s look at file systems, because for many people that are replatforming to AWS, that’s really the first step, is how do I land my data in the cloud, before I start to use it in any of the services? One of the reasons why that’s such a good and safe first step for companies is because the options especially S3 are incredibly cost-effective.Let’s, the three to really be aware of are S3, EBS, the Amazon elastic block storage, and EFS. The way to think about this is, you know, S3 is for storing objects, essentially with unlimited capacity. None of us that are on this call are really ever going to exceed the capacity of S3. One of the things about S3 is it’s not just a place to store, it’s also a place to access those files. There’s probably an enormous number of websites that you visit on the web everyday whose HTML files and Javascript files and CSS files are simply files sitting on S3, with a webserver sitting in front of them. S3 is good in terms of performance, it’s very cost effective, as I said, and it’s also something you can access from multiple processes, multiple services on Amazon. You can put your files, one copy of your files in one place, and then access it for many different types of processes. It’s the most tolerant of the options in terms of AWS, so you can tolerate up to two availabilities on failures and not really impact the accessibility of the data. One consequence of that is there are some eventual consistency issues with S3, but that’s not a topic for this webinar.EBS is something that you use for database-style workloads, or latency sensitive workloads, where you can attach capacity to a single EC2 instance. It’s something that you can access from one instance at a time. You couldn’t put your data in one EBS area and have that access from multiple servers. It’s only for one at a time. It’s the best performance option on AWS, so for workloads where latency is a real concern, like I said, for database workloads, [EWS 00:19:05] is the best option. It’s also the most expensive. Of course, the performance and cost depend on the IOPS that you choose when you pick EBS. It doesn’t tolerate any availabilities on failure. In that sense, it may feel similar to like a high performance SAN or NAS, that if your data center goes down, that storage is down.Then the newest of these three, the first two have been around for many years. The newest is EFS, which is somewhere in between. It allows you to access files for many different, for many different environments in AWS. It can tolerate one availability’s own failure. Its cost is fairly high compared to EBS, but you can access one copy of the data from multiple servers. Those are some of the ways to think about this. S3, like I said, you can access through HTTP from any computer on the internet, whereas EBS you can only access that data from one of the EC2 instances. EFS has options for both.That’s how to think about the file system options on AWS, in terms of your needs for performance, for availability by different protocols, from availability to tolerate different availabilities’ own failures. This sort of break down in a table should help you make some sense of your options and which are best to choose. Either of the three you choose, and typically it’s going to be S3, for most people, it’s a great way to start the journey, where to just land your raw data before you begin to use some of the more expensive services in AWS to do your analytics.Okay, so let’s take a look at databases, because sometimes you have very specific workloads that require a database. So for example, if you need to do SQL queries, S3 doesn’t give you that kind of an interface. S3 just lets you open up a file and close a file and add a file and delete a file, the kinds of things you have on a file system. In terms of databases, Amazon has the whole RDS family, so if you want to run Oracle or SQL server or MySQL or Postgres on Amazon, that is part of their relational database service. They also have a product called Aurora, which is a modified high-performance version of MySQL. They also have it available as Postgres, that is proprietary to Amazon, but it has a lot of great, nice features, and Amazon would love you to move off of RDS onto their Aurora family of products.

S3 is good in terms of performance, it’s very cost effective, as I said, and it’s also something you can access from multiple processes, multiple services on Amazon. You can put your files, one copy of your files in one place, and then access it for many different types of processes. It’s the most tolerant of the options in terms of AWS, so you can tolerate up to two availabilities on failures and not really impact the accessibility of the data. One consequence of that is there are some eventual consistency issues with S3, but that’s not a topic for this webinar.EBS is something that you use for database-style workloads, or latency sensitive workloads, where you can attach capacity to a single EC2 instance. It’s something that you can access from one instance at a time. You couldn’t put your data in one EBS area and have that access from multiple servers. It’s only for one at a time. It’s the best performance option on AWS, so for workloads where latency is a real concern, like I said, for database workloads, [EWS 00:19:05] is the best option. It’s also the most expensive. Of course, the performance and cost depend on the IOPS that you choose when you pick EBS. It doesn’t tolerate any availabilities on failure. In that sense, it may feel similar to like a high performance SAN or NAS, that if your data center goes down, that storage is down.Then the newest of these three, the first two have been around for many years. The newest is EFS, which is somewhere in between. It allows you to access files for many different, for many different environments in AWS. It can tolerate one availability’s own failure. Its cost is fairly high compared to EBS, but you can access one copy of the data from multiple servers. Those are some of the ways to think about this. S3, like I said, you can access through HTTP from any computer on the internet, whereas EBS you can only access that data from one of the EC2 instances. EFS has options for both.That’s how to think about the file system options on AWS, in terms of your needs for performance, for availability by different protocols, from availability to tolerate different availabilities’ own failures. This sort of break down in a table should help you make some sense of your options and which are best to choose. Either of the three you choose, and typically it’s going to be S3, for most people, it’s a great way to start the journey, where to just land your raw data before you begin to use some of the more expensive services in AWS to do your analytics.Okay, so let’s take a look at databases, because sometimes you have very specific workloads that require a database. So for example, if you need to do SQL queries, S3 doesn’t give you that kind of an interface. S3 just lets you open up a file and close a file and add a file and delete a file, the kinds of things you have on a file system. In terms of databases, Amazon has the whole RDS family, so if you want to run Oracle or SQL server or MySQL or Postgres on Amazon, that is part of their relational database service. They also have a product called Aurora, which is a modified high-performance version of MySQL. They also have it available as Postgres, that is proprietary to Amazon, but it has a lot of great, nice features, and Amazon would love you to move off of RDS onto their Aurora family of products. That’s for kind of traditional OLTP relational workloads. The main API is SQL, so to the extent, it’s essentially the same thing as a relational database that you’ve lived with and loved for decades, but available on Amazon’s cloud. It is not a service that you pay for by the drink, like some of the Amazon services. For RDS, it’s something you provision the server, you provision the capacity, you decide when to scale, and it’s easy to do when you decide to do that, but it’s really still infrastructure that you still have some management overhead.Redshift is Amazon’s relational product for data warehouses. This is a column-oriented, MPP data warehouse technology based on Postgres and a product called ParAccel that they licensed a number of years ago. It’s very, very efficient for analytical workloads. It is not tuned or optimized for transactional workloads. Insofar as you might have SQL server and Oracle for your transactional workloads and something like Teradata or Vertica for your data warehouse, same kind of duality exists on AWS.Dynamo DB is the AWS no-SQL product, so this is a key value store that has a proprietary interface. This is a fully managed service. You pay for exactly how much of Dynamo DB you use. You don’t worry about scaling it. It’s a very interesting product that has its role in sort of the tool belt of options you have. Then Amazon recently, actually at the last Reinvent, announced a new graph database called Neptune. This is not generally available yet, but this is for analytics of a graph nature, so you can think of social networks, for example. If you’re unfamiliar with graph databases, it’s a specialized type of database, it’s very interesting. The interface here is not SQL. It’s based on something called Gremlin. Another interface is Sparkle. All of these have, you know, roughly equivalent costs at a very high level, especially relative to something like S3. Like I said, Aurora and Redshift are things you need to manage, whereas Dynamo and Neptune are fully managed services. You can run your BI tools on Aurora and Redshift, but you can’t connect Tableau, for example, to Dynamo DB and Neptune. These have proprietary interfaces.Let’s look in terms of analytics. You’ve got all your data, and let’s say you land it in S3. Now you need to take that raw data and transform it or enrich it or query it with SQL. Well, Amazon has their version of Hadoop, which is called Elastic MapReduce which gives you all of the sort of Hadoop tools at your disposal. Typically people use EMR with the data being in S3. You can use EBS if you like, but most people are using S3. You’re going to do the kind of ETL or batch processing complex analytics, a certain amount of machine learning using EMR. This is not a, you know, subsecond response times for your queries that’s suitable for BI workloads, for example. It’s the lowest cost of the three options here, but you basically provision nodes for as much capacity you need, and you pay for that in node seconds plus the underlying EC2 costs.

That’s for kind of traditional OLTP relational workloads. The main API is SQL, so to the extent, it’s essentially the same thing as a relational database that you’ve lived with and loved for decades, but available on Amazon’s cloud. It is not a service that you pay for by the drink, like some of the Amazon services. For RDS, it’s something you provision the server, you provision the capacity, you decide when to scale, and it’s easy to do when you decide to do that, but it’s really still infrastructure that you still have some management overhead.Redshift is Amazon’s relational product for data warehouses. This is a column-oriented, MPP data warehouse technology based on Postgres and a product called ParAccel that they licensed a number of years ago. It’s very, very efficient for analytical workloads. It is not tuned or optimized for transactional workloads. Insofar as you might have SQL server and Oracle for your transactional workloads and something like Teradata or Vertica for your data warehouse, same kind of duality exists on AWS.Dynamo DB is the AWS no-SQL product, so this is a key value store that has a proprietary interface. This is a fully managed service. You pay for exactly how much of Dynamo DB you use. You don’t worry about scaling it. It’s a very interesting product that has its role in sort of the tool belt of options you have. Then Amazon recently, actually at the last Reinvent, announced a new graph database called Neptune. This is not generally available yet, but this is for analytics of a graph nature, so you can think of social networks, for example. If you’re unfamiliar with graph databases, it’s a specialized type of database, it’s very interesting. The interface here is not SQL. It’s based on something called Gremlin. Another interface is Sparkle. All of these have, you know, roughly equivalent costs at a very high level, especially relative to something like S3. Like I said, Aurora and Redshift are things you need to manage, whereas Dynamo and Neptune are fully managed services. You can run your BI tools on Aurora and Redshift, but you can’t connect Tableau, for example, to Dynamo DB and Neptune. These have proprietary interfaces.Let’s look in terms of analytics. You’ve got all your data, and let’s say you land it in S3. Now you need to take that raw data and transform it or enrich it or query it with SQL. Well, Amazon has their version of Hadoop, which is called Elastic MapReduce which gives you all of the sort of Hadoop tools at your disposal. Typically people use EMR with the data being in S3. You can use EBS if you like, but most people are using S3. You’re going to do the kind of ETL or batch processing complex analytics, a certain amount of machine learning using EMR. This is not a, you know, subsecond response times for your queries that’s suitable for BI workloads, for example. It’s the lowest cost of the three options here, but you basically provision nodes for as much capacity you need, and you pay for that in node seconds plus the underlying EC2 costs. If all your data is in S3, Amazon last year announced a product called Athena, which is based on Presto. That gives you SQL access to the raw data in S3. It could be JASON, it could be Parquet, it could be Avro. There are many different file formats that can exist on S3 that are all equally queriable via SQL, which is great, because that means you can use all your existing SQL-based tools. The latency on Athena is very much proportional to the amount of data that you’re querying. It’s not necessarily suitable to most BI workloads, but it is good for batch reporting and ad hoc analysis, especially of kind of more raw data.It’s, I would categorize it as sort of medium latency overall. Then the cost of course is higher than just storing your data in S3, because not only do you pay for the data storage on S3, but every time you scan the data with a query, you pay. If you do a scan of 10 terabytes of data to answer some question, you’re going to pay for that entire scan. If you run that query over and over again, you’re going to pay for scanning that 10 terabytes of data each time you do it. Those costs can really add up.Redshift Spectrum is a relatively new offering that lets you basically go through the Redshift infrastructure that you’ve provisioned to query the data in S3. The idea here is, you start with your data in S3, you use something like EMR to refine and transform and cleanse the data in the same way that you would think about doing that with ETL. That kind of curated, vetted data you would then put in Redshift for high-performance access from your BI tools. To the extent you’ve put that data in Redshift, what if you want to join it to some of the raw data that’s in S3, or maybe it’s some of the detailed data that’s in S3. Well, Redshift Spectrum lets you issue that query through your Redshift instances and reach over into S3 and perform your analysis as a mix of data in Redshift and S3.I think that’s my, I mean, that’s my best understanding of when you would use it and why you would use it instead of Athena. Similar sorts of latency, because you’re scanning the S3 data. There are no indexes, it’s not necessarily in a calm or compressed format. That’s sort of a rundown of the different AWS analytics services. If you think about it, you’ve got Redshift on the last slide, and you’ve got Redshift Spectrum here. Well, as I said, Amazon wants you to put your refined, high-performance data in Redshift, and then they want you to think of your raw data in S3 as something you’re going to query with Athena on an ad hoc basis, or as a occasionally join the Redshift data into your raw data in S3.Last but not least, you gotta think about data movement, because while your data is in S3, there are potentially other systems you want to move the data, there are transformations you want to do in sort of typical ETL-type workloads. Of course, Amazon has multiple choices here as well. Data Pipeline at a high level is more of an orchestration framework, where you can choose to write transformation in whatever language you like, and you actually, Data Pipeline spins up EC2 instances that you have low-level access to. Whereas AWS Glue is more of like a fully managed ETL service, where you can write your processing in Scala or Python. It runs on Spark in the background. You have a lot less control, but you have a lot less that you worry about, and it’s a more convenient option. Most of the time, you’re going to use Glue, but you might in some cases have some elaborate needs that require the use of Data Pipeline.To sort of summarize, I think about, you know, the file systems, database, and analytics services that relate to the world of analytics as being the things that we just covered. So files systems, S3, EFS, EBS. Databases are RDS, Redshift, and Dynamo DB. Analytics are EMR, Athena, and Redshift Spectrum. The costs vary, the interfaces vary, the latency expectations vary, and you should also think about your operational costs. What does it take you to manage each of these options on AWS? Because some of them are fully managed, and some of them still have things that you need to do. Depending on the workloads you’re trying to support, if it’s all just data science, then S3 may be all you need. But if you’re trying to support your traditional BI workloads with products like Tableau, then you have a more elaborate set of services that you need to put together to meet the needs of the business.Okay, so that is a lot of material, I realize. And again, I’m not a representative of AWS, I’m not an expert in these things. This is my sense based on taking to companies and based on my read of the offerings and use of some of these offerings on AWS. By the way, we’re focusing on AWS, but a very similar conversation with just different tools and services exist for Azure and for Google. They all have multiple options, specialized capabilities, and nontrivial exercise to make sense of them and pick the right tool for your needs as a company.One of the things you see as companies, you know, venture down this path of replatforming to the cloud is the BI users get left behind. Because the offerings are very enticing in their flexibility, in their costs, in their elasticity, but when it comes to actually meeting the needs of the traditional BI user, you sort of end up with a scenario where they’re very dependent on IT to make sense of these different services and to move the data into an environment where they can access it with the kind of latency expectations that they expect. What you want to avoid, I think what every company wants to avoid is that loss of self-service, because the idea of business users doing things for themselves is a great idea, and I think every company wants their data consumers to be more independent and self-directed and the real challenge of replatforming is that you lose that optionality in the process.

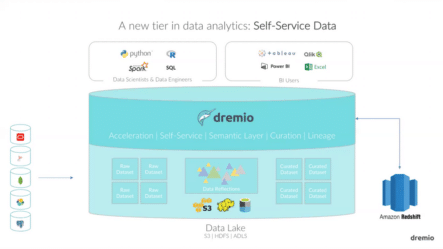

If all your data is in S3, Amazon last year announced a product called Athena, which is based on Presto. That gives you SQL access to the raw data in S3. It could be JASON, it could be Parquet, it could be Avro. There are many different file formats that can exist on S3 that are all equally queriable via SQL, which is great, because that means you can use all your existing SQL-based tools. The latency on Athena is very much proportional to the amount of data that you’re querying. It’s not necessarily suitable to most BI workloads, but it is good for batch reporting and ad hoc analysis, especially of kind of more raw data.It’s, I would categorize it as sort of medium latency overall. Then the cost of course is higher than just storing your data in S3, because not only do you pay for the data storage on S3, but every time you scan the data with a query, you pay. If you do a scan of 10 terabytes of data to answer some question, you’re going to pay for that entire scan. If you run that query over and over again, you’re going to pay for scanning that 10 terabytes of data each time you do it. Those costs can really add up.Redshift Spectrum is a relatively new offering that lets you basically go through the Redshift infrastructure that you’ve provisioned to query the data in S3. The idea here is, you start with your data in S3, you use something like EMR to refine and transform and cleanse the data in the same way that you would think about doing that with ETL. That kind of curated, vetted data you would then put in Redshift for high-performance access from your BI tools. To the extent you’ve put that data in Redshift, what if you want to join it to some of the raw data that’s in S3, or maybe it’s some of the detailed data that’s in S3. Well, Redshift Spectrum lets you issue that query through your Redshift instances and reach over into S3 and perform your analysis as a mix of data in Redshift and S3.I think that’s my, I mean, that’s my best understanding of when you would use it and why you would use it instead of Athena. Similar sorts of latency, because you’re scanning the S3 data. There are no indexes, it’s not necessarily in a calm or compressed format. That’s sort of a rundown of the different AWS analytics services. If you think about it, you’ve got Redshift on the last slide, and you’ve got Redshift Spectrum here. Well, as I said, Amazon wants you to put your refined, high-performance data in Redshift, and then they want you to think of your raw data in S3 as something you’re going to query with Athena on an ad hoc basis, or as a occasionally join the Redshift data into your raw data in S3.Last but not least, you gotta think about data movement, because while your data is in S3, there are potentially other systems you want to move the data, there are transformations you want to do in sort of typical ETL-type workloads. Of course, Amazon has multiple choices here as well. Data Pipeline at a high level is more of an orchestration framework, where you can choose to write transformation in whatever language you like, and you actually, Data Pipeline spins up EC2 instances that you have low-level access to. Whereas AWS Glue is more of like a fully managed ETL service, where you can write your processing in Scala or Python. It runs on Spark in the background. You have a lot less control, but you have a lot less that you worry about, and it’s a more convenient option. Most of the time, you’re going to use Glue, but you might in some cases have some elaborate needs that require the use of Data Pipeline.To sort of summarize, I think about, you know, the file systems, database, and analytics services that relate to the world of analytics as being the things that we just covered. So files systems, S3, EFS, EBS. Databases are RDS, Redshift, and Dynamo DB. Analytics are EMR, Athena, and Redshift Spectrum. The costs vary, the interfaces vary, the latency expectations vary, and you should also think about your operational costs. What does it take you to manage each of these options on AWS? Because some of them are fully managed, and some of them still have things that you need to do. Depending on the workloads you’re trying to support, if it’s all just data science, then S3 may be all you need. But if you’re trying to support your traditional BI workloads with products like Tableau, then you have a more elaborate set of services that you need to put together to meet the needs of the business.Okay, so that is a lot of material, I realize. And again, I’m not a representative of AWS, I’m not an expert in these things. This is my sense based on taking to companies and based on my read of the offerings and use of some of these offerings on AWS. By the way, we’re focusing on AWS, but a very similar conversation with just different tools and services exist for Azure and for Google. They all have multiple options, specialized capabilities, and nontrivial exercise to make sense of them and pick the right tool for your needs as a company.One of the things you see as companies, you know, venture down this path of replatforming to the cloud is the BI users get left behind. Because the offerings are very enticing in their flexibility, in their costs, in their elasticity, but when it comes to actually meeting the needs of the traditional BI user, you sort of end up with a scenario where they’re very dependent on IT to make sense of these different services and to move the data into an environment where they can access it with the kind of latency expectations that they expect. What you want to avoid, I think what every company wants to avoid is that loss of self-service, because the idea of business users doing things for themselves is a great idea, and I think every company wants their data consumers to be more independent and self-directed and the real challenge of replatforming is that you lose that optionality in the process. I think that’s part of why we put this talk together and why Dremio is so compelling for companies is it gives you a way to take advantage of all that AWS has to offer, or any of these cloud vendors, without losing that self-service vision. Let me talk about kind of the next step as you start to rationalize these different services in AWS and build a strategy for yourselves. This is kind of the pattern I see over and over again, is companies move their data from their different operational systems whether those are in the cloud or on prem, into this data lake. Here I’m basically stepping back to say, this idea applies not just to S3, but it also applies to Hadoop and it applies to ADLS, which is the Azure Data Lake Store available now. Same story. Whichever of these three, whichever of these journeys that you’re going on.Data gets moved in its raw form into the data lake. At this point, your data engineers and data scientists can still access the data, but your BI users cannot. What companies start to do is they say, “Hey, I’ve got data in this raw form. I need to curate it, I need to transform it, cleanse it, summarize it. I need to make sense of it and get it ready for analysis.” They start to look at a data prep tool for that. Then as they start to get the data into a better shape from its raw form, they say, “You know what? We don’t even know what we have. We need to build an inventory of the different data assets that we have available and make sense of them and define and rationalize the semantics around that data in a way that is easy for the business user to make sense of.”They look at data catalog tools. Then they’ve got data prep and data catalog and they’re figuring out how to use those tools together with their data lake, but their BI users are unable to access the data because it’s too slow. So they say, “You know what? We need a way to accelerate the data for our BI workloads.” Depending on the tool, you might say, “Well, I could do something like a Tableau extract.” That’s one approach, but that really only works for Tableau, and it’s something that creates its own set of challenges. There are specialized products that help you with BI workloads in your data lake, that kind of follow the traditional paradigm of cubing that I remember building in the nineties.Once you start to kind of figure out a strategy for those aggregation queries that are so fundamental to BI workloads, you still have a need for the ad hoc queries, where a user wants to get all 10,000 records for a particular customer, or get down to the low-level detail that’s not in the cube. You find yourself saying, “You know what? I still need a data warehouse for that, I guess I’m going to move data from my data lake into Redshift, or I’m going to move it into RDS instance.”It’s at that point that your BI users start to have access at the speed that they need, but if you step back, you’ve created an environment where they have to decide, “Okay, am I going to connect to this BI acceleration technology, or to the ad hoc acceleration technology? Well, first of all, I need to go connect to that data catalog to find what I even have to work with, and then the data engineers and data scientists are connecting to the data prep tools and some of these other things.” I didn’t even finish drawing all the arrows, but it starts to be pretty complicated. My point here is that it’s not self-service, and it’s not the experience that the BI user really wants, and it puts a lot of pressure on IT to keep all of this working and working together as one system.This is really kind of the background of how we started Dremio, is there’s gotta be a better way to do this. We said, “What would that be? What would the table stakes be for that?” Well, it would need to work with any data lake, because some companies are on Hadoop on prem, some are on Azure, some are on S3, and nobody wants to be locked into one proprietary option. Let’s build something that works for everybody and gives them flexibility to change the data lake down the road. We wanted something that would work with any BI or data science tool, because companies have those tools. They have their Tableau, they have their Python and R, and that’s not the issue. They like those tools. We want to support those and make those tools better.This solution would need to handle the data acceleration, because interactive access to the data is so foundational to any sort of data consumers workflow. If they have to wait minutes or hours to run a query, they can’t actually do their jobs. Dremio needs to solve that really hard data acceleration challenge. We think a product like this has to be self-service. It has to be something that the data consumer, the business users can use themselves and can start to define and organize and manage their data assets on their own terms, without being dependent on IT. We think every user has their own sense of the data that they need for a particular job at hand. Traditionally, what that’s meant is IT has to make a copy for each person’s needs, and then you end up with thousands of copies of the data, and nobody wants that. We want Dremio to give end users the ability to get the data any way they want, exactly the way they want it for their particular job, but without making copies in the background.It has to scale, right? Companies have massive amounts of data, so this needs to scale to thousands of nodes. We think something like this has to be open source in 2018. At a really high level, one way to think about Dremio is a new tier in data analytics that we call “self-service data,” that sits on your data lake and gives you access to all the raw data that’s in your data lake, accelerates that data for interactive speed, no matter what the underlying format is, and no matter how big the datasets are. It lets end users have a self-service experience for discovering data through easy-to-use, Google-like search, for organizing the data through their own semantic layer, where they can describe the datasets, share the datasets with one another, all through something that’s really easy to use in their browser. The ability to curate and transform and blend different datasets without making copies, and then of course, tracking the lineage of these datasets as they’re used by any tool, whether that’s a datascience environment that’s Python, R, or Spark, or any BI tool, Dremio tracks the use and lineage of the data through all those different workloads.

I think that’s part of why we put this talk together and why Dremio is so compelling for companies is it gives you a way to take advantage of all that AWS has to offer, or any of these cloud vendors, without losing that self-service vision. Let me talk about kind of the next step as you start to rationalize these different services in AWS and build a strategy for yourselves. This is kind of the pattern I see over and over again, is companies move their data from their different operational systems whether those are in the cloud or on prem, into this data lake. Here I’m basically stepping back to say, this idea applies not just to S3, but it also applies to Hadoop and it applies to ADLS, which is the Azure Data Lake Store available now. Same story. Whichever of these three, whichever of these journeys that you’re going on.Data gets moved in its raw form into the data lake. At this point, your data engineers and data scientists can still access the data, but your BI users cannot. What companies start to do is they say, “Hey, I’ve got data in this raw form. I need to curate it, I need to transform it, cleanse it, summarize it. I need to make sense of it and get it ready for analysis.” They start to look at a data prep tool for that. Then as they start to get the data into a better shape from its raw form, they say, “You know what? We don’t even know what we have. We need to build an inventory of the different data assets that we have available and make sense of them and define and rationalize the semantics around that data in a way that is easy for the business user to make sense of.”They look at data catalog tools. Then they’ve got data prep and data catalog and they’re figuring out how to use those tools together with their data lake, but their BI users are unable to access the data because it’s too slow. So they say, “You know what? We need a way to accelerate the data for our BI workloads.” Depending on the tool, you might say, “Well, I could do something like a Tableau extract.” That’s one approach, but that really only works for Tableau, and it’s something that creates its own set of challenges. There are specialized products that help you with BI workloads in your data lake, that kind of follow the traditional paradigm of cubing that I remember building in the nineties.Once you start to kind of figure out a strategy for those aggregation queries that are so fundamental to BI workloads, you still have a need for the ad hoc queries, where a user wants to get all 10,000 records for a particular customer, or get down to the low-level detail that’s not in the cube. You find yourself saying, “You know what? I still need a data warehouse for that, I guess I’m going to move data from my data lake into Redshift, or I’m going to move it into RDS instance.”It’s at that point that your BI users start to have access at the speed that they need, but if you step back, you’ve created an environment where they have to decide, “Okay, am I going to connect to this BI acceleration technology, or to the ad hoc acceleration technology? Well, first of all, I need to go connect to that data catalog to find what I even have to work with, and then the data engineers and data scientists are connecting to the data prep tools and some of these other things.” I didn’t even finish drawing all the arrows, but it starts to be pretty complicated. My point here is that it’s not self-service, and it’s not the experience that the BI user really wants, and it puts a lot of pressure on IT to keep all of this working and working together as one system.This is really kind of the background of how we started Dremio, is there’s gotta be a better way to do this. We said, “What would that be? What would the table stakes be for that?” Well, it would need to work with any data lake, because some companies are on Hadoop on prem, some are on Azure, some are on S3, and nobody wants to be locked into one proprietary option. Let’s build something that works for everybody and gives them flexibility to change the data lake down the road. We wanted something that would work with any BI or data science tool, because companies have those tools. They have their Tableau, they have their Python and R, and that’s not the issue. They like those tools. We want to support those and make those tools better.This solution would need to handle the data acceleration, because interactive access to the data is so foundational to any sort of data consumers workflow. If they have to wait minutes or hours to run a query, they can’t actually do their jobs. Dremio needs to solve that really hard data acceleration challenge. We think a product like this has to be self-service. It has to be something that the data consumer, the business users can use themselves and can start to define and organize and manage their data assets on their own terms, without being dependent on IT. We think every user has their own sense of the data that they need for a particular job at hand. Traditionally, what that’s meant is IT has to make a copy for each person’s needs, and then you end up with thousands of copies of the data, and nobody wants that. We want Dremio to give end users the ability to get the data any way they want, exactly the way they want it for their particular job, but without making copies in the background.It has to scale, right? Companies have massive amounts of data, so this needs to scale to thousands of nodes. We think something like this has to be open source in 2018. At a really high level, one way to think about Dremio is a new tier in data analytics that we call “self-service data,” that sits on your data lake and gives you access to all the raw data that’s in your data lake, accelerates that data for interactive speed, no matter what the underlying format is, and no matter how big the datasets are. It lets end users have a self-service experience for discovering data through easy-to-use, Google-like search, for organizing the data through their own semantic layer, where they can describe the datasets, share the datasets with one another, all through something that’s really easy to use in their browser. The ability to curate and transform and blend different datasets without making copies, and then of course, tracking the lineage of these datasets as they’re used by any tool, whether that’s a datascience environment that’s Python, R, or Spark, or any BI tool, Dremio tracks the use and lineage of the data through all those different workloads. To the extent that you have some of your data in the data lake and some of it in a system like Amazon Redshift, Dremio gives you equal access to a source like Redshift, or even things like Mongo DB and Elasticsearch, so you can join across those sources with data in your data lake as well. That’s at a really high level what Dremio is all about and what is so exciting to me and what brought me to the company. What I’d like to do now is jump over into the demonstration. By the way, if you have questions along the way, I hope that you will ask them in the Q&A feature in the webinar, and I will do my best to get to those at the end of the talk.Let’s take a look at, I’m logged in through my browser here to Dremio. You know, one of the things you’ll notice here, I’m connected as an administrator, so I can see the whole world, but this, just to give you a sense, is a four-node cluster running on AWS, where I’ve already connected up a number of different sources. Maybe the only source that you connect Dremio to is your raw data in S3, but like I said earlier, you could connect to data in Redshift. Here I’ve also got some RDS in Oracle and Postgres. I’ve got a Mongo DB cluster, I’ve got HDFS, and I’ve got Elasticsearch. The point is, you can kind of connect to all these different things. These are sort of the raw physical connections to these sources.What I have above are what we call spaces. This is the area, if you were a Tableau user and you logged in, you wouldn’t see these sources. You would see the spaces you have access to based on your LDAP permissions. You can think of a space as, you know, a project area for a group of users to share and work on things together. How they organize data and describe data and make it usable for their particular jobs happens in these spaces. Then finally, every individual has what we call a home space. That lets you do things like upload a spreadsheet and then join that to your enterprise sources without talking to IT. That’s a hugely powerful feature, because I think almost everyone is proficient in Excel, and sometimes you already have data in Excel, or you want to use Excel to do things that are very easy for you to do as an individual and you don’t want to have to involve IT. That’s a very nice feature.Let’s take an example. Let’s say I’m a Tableau user, and I’ve been assigned to do some work on analyzing taxi rides in New York City. I’m going to work through a couple of different scenarios, the first one being a Tableau user. I don’t know where that data is on my data lake, I just know that this is a job I need to do and if I have to ask IT where the data is, it may take several days to get that back by email, or might be pointed at some outdated Wiki or who knows what will happen. One of the nice things about Dremio is when you connect to these sources, we collect all the metadata from those sources, and we index it and make it searchable through a Google-like search interface.I can, I remember about this data that there’s a column for Metro Transit Authority, MTA. So I can just go in here and do a search on MTA and get back a set of search results, and jump into a sample of this data by just clicking on one of those results. Here the idea is not that I’m going to do all my analysis in Dremio, but it’s how I’m going to begin that journey. Very quickly, I was able to find a candidate dataset and see if this is what I’m looking for. If I look here, each row corresponds to a taxi ride in New York City. You can see the pick-up and drop-off date times, the number of people that were in the taxi ride, how long the taxi ride was in terms of miles. Actually, if I scroll over to the right, I have a breakdown of the fees. I have the total amount, the tolls, the tip, and the MTA tax. This is why this particular search matched on this dataset is, MTA is the name of one of the columns.From here, there’s sort of two journeys that I could take. One is I could say, “Hey, this is exactly the data that I was looking for. I want to analyze it with Tableau.” Or I could say, “You know what? This isn’t quite the data I was looking for. I need to transform it or blend it or join it with another data source.” We’ll look at both of those scenarios, but let’s just start by saying, “Yeah, this is exactly what I want. I want to analyze it with my favorite tool.” You know, that could be Tableau, it could be Power BI, it could be Click, it could be any tool that supports SQL. That could be Spotfire, Business Objects, MicroStrategy, Cognos. Couple of these tools, we have the ability to launch the tool directly from Dremio. So by clicking on this little file that gets downloaded, that sets up the ODBC connection from Tableau to Dremio.Here I’m going to basically do a couple of quick things, so first of all, let’s just see how much data I have to work with. You can see that this is a little over a billion rows of data. This is a billion rows of CSV files in my data lake. That query came back in about a second. Even though the data is nontrivial in size, I’m able to work with it at sort of the speed of thought. The kind of latency that I expect, and I would get in something like a Tableau extract, but without going through all the hassle of building that extract. This performance that I get is the same whether I’m using Tableau or any of other tools that support standard SQL. Every time I do one of these clicks, I’m issuing a new SQL query into Dremio. Dremio is running that query and returning results back very quickly. I could just do a couple of things here, just edit this color to be orange and blue, so it’s a little bit easier to see, and then I’ll change this from a sum to an average.Very quickly I can see that the number of taxi rides, year over year, is roughly flat, somewhere between 150 and 175 million. But the amount people are paying per taxi ride is going from, you know, is increasing, and the tip amount is going up as well. So part of what’s causing people to spend more on average per ride appears to be tipping. I can see tipping is increasing from, by a factor of almost 3X over this four or five year period. There are different reasons why that could be. Maybe people feel better about the economy after 2008. I can quickly look at seasonal variations by just looking at the months and see that in terms of tipping, looks like it’s better to be a taxi driver in the winter months as long as it’s before January. Because it looks like after the holidays, people stop tipping.I can easily go into, you know, very low-level detail to look across all the week numbers across all five years and look for patterns. The point is, there are no limitations really here in terms of my features and functionality that I love in Tableau. The benefit is just that Dremio is making the data faster and easier to use for the Tableau user. If I go back over into Dremio, basically looked at one scenario, which is I’m a Tableau user, I was able to easily go in through my browser, search the catalog of different datasets I have to work with. Find one and quickly visually inspect it, determine, “Hey, this is the data I’m looking for.” Click a button to launch my favorite tool connected to that dataset, and then get really fast access to the data. Have a great experience in terms of my favorite tool and how I’m analyzing a billion rows of data.Without Dremio, if I was using something like, I don’t know, Athena or Hive, each of those clicks would have taken, I don’t know, five to 10 minutes per click in this environment that I’m working in. With Dremio, I’m able to get that down into subsecond. That’s the first scenario. The second scenario I want to take a look at is how do I work with the data if it’s not quite in the shape that I need for the task at hand. I just want to very quickly go into my datasets and just sort of look at one of my spaces, which is this AWS webinar. In here, I’ve got a sandbox environment and a production environment. Here I have a HR dataset that is sitting as CSV files in my, in S3. I could pretty easily say, “You know, let’s go look at employees.” Get a quick view of those employees and see, you know, I’ve got first name, last name, hire date, job ID, et cetera, et cetera. Maybe I want to blend this with data about the departments that the employee is working.I could join this to another dataset. If I go in my webinar and I go into the sandbox, I have the department’s dataset. I can click “next” to build this join between these two datasets, so I can pick join key, meaning department ID. That’s an inner join, different join options here. Click “apply.” So now I’ve got this new, what we call a virtual dataset, I didn’t move any data, I’ve simply blended these two datasets together. I can save this in my production space and call this “employee salary two,” for example. Now I can go into my production folder in my AWS webinar space, look at that “employee salary two,” and start to work with that with Tableau.Again, when I click on that file, it just launches Tableau connected over RDBC to this dataset. I didn’t build an extract, I didn’t move any data. I’m now going to look at the list of departments and see who gets paid the most. I can quickly see that it looks like sales is paid the most, no surprise to many of you on the webinar, I expect. Now if I go back and say, “You know what? I’ve got another, I want to look at, make better sense of the sort of geographic breakdown, not just the departments, but where are people being paid the most.” I can go into here, and I could join this to another dataset, that if I go down into S3 and I go down into my tutorials bucket and go into HR, I have data about the locations in JSON. I can click that, click “next” and join this based on location ID to the location ID in my JSON dataset. Click “apply,” and save this as “employee location” and put that in my production folder in my AWS webinar space.If I go into here now, I see “employee location,” and I can connect to this with Tableau. Right? So this again, writing no code, I’m not moving any data around, just a couple of clicks. I’m able to blend these different datasets together, log in with my LDAP credentials and look at the state or province by salary. Very quickly see that people in Oxford are getting paid the most as a sum. If I change this to average, it looks like California, this data makes no sense. People on average can’t be the least paid in California, especially compared to Bavaria. But this data is what it is.That’s just a quick example of data that wasn’t already in a refined state. I was basically able to take the raw data and CSV or JSON or it could be Parquet or it could be Avro or ORC or even Excel spreadsheets and quickly make it accessible to a business user to connect to with their favorite tool. It could be data in Redshift, it could be data in Mongo DB. Like I said, it doesn’t really matter. We are, you know, we have a few minutes left in the webinar and I wanted to sort of stop there and go take a look at some questions.While I do that, let me just sort of bring up this last slide, which is those of you who are interested in taking a closer look at Dremio, we have a community edition that’s easy to download. You can try it out on your laptop, you could try it in your Hadoop cluster, you can try it on AWS or Azure. We also have an enterprise edition. You can fill out a form to ask us for more information about the enterprise edition. Documentation is all out there, a number of tutorials. We have a very vibrant community at community.dremio.com, where you can ask questions as you start to use the product.