Agentic AI is an artificial intelligence system that is designed to operate autonomously. With minimal human supervision it can be expected to make decisions and perform tasks with specifically trained agents. This is thanks in large part to Large Language Models (LLMs) which provide agentic AI with enhanced reasoning and the ability to understand context.

In terms of Data Analytics, the typical ask of an AI agent would be to review your organisation's data to identify patterns and generate insights, executed by writing and optimising queries to explore, study, and refine your data. However, there are three key factors that can limit their performance. No matter how powerful your AI model, or smart your human analyst, to truly deliver powerful data insights they need:

- Data Access,

- Fast Query Execution, and

- Comprehensible Data Structures.

All three of these factors can be traced back to how your data is being stored and managed. The single solution to all three is the Data Lakehouse. Read on to hear how the Dremio Data Lakehouse can provide the centralised, performant, and governed data foundation for your Agentic AI systems.

Access

Dremio’s query federation allows your AI agents to connect to and query data from multiple sources across your entire organisation - including data lakes, databases, data warehouses, and even other lakehouse catalogs. By centralising data access through Dremio, AI system can easily work with whatever data the user wants, from historical data for training and pattern recognition to operational data for business critical decision making.

Data access remains secure and governed through Dremio’s Enterprise Data Catalog. Building on top of the powerful features provided by Apache Polaris (incubating), Dremio provides robust data security through Role-Based Access Control (RBAC) privileges as well as table-level data masking of rows and columns. Combined with a variety of authentication methods and comprehensive auditing capabilities you can control what data your AI agent can access with confidence.

Speed

Providing an AI agent with all the data from across the organisation enables it to produce powerful insights and suggestions to users. However, all the data is a lot of data, and if your data infrastructure isn’t performant, your agent won’t be either. The quality of the insights won’t matter if they take hours or days to generate, as the data will have stagnated and your organisation will have moved on.

Dremio utilises several techniques to reduce query times and compute costs, to ensure that data queries remain highly performant and your data insights fresh:

- Apache Iceberg collects and stores a variety of statistics that enable the query engine to prune files and entire data partitions that do not match the query predicates - leading to more efficient data access and reduced I/O operations.

- With Iceberg Clustering Dremio automatically reorganises data within partitions, sorts files for faster queries, compacts small files, and optimises metadata all to ensure optimal query performance without compromising flexibility or fault tolerance.

- Dremio’s Reflections act as an Iceberg-based relational cache transparently substituting optimised datasets for queries that would benefit from them. Now as a fully autonomous process, Dremio will intelligently review your query history to define, create, and refresh these optimised datasets.

These features all combine to enable Dremio’s query engine to deliver the fastest performance on Iceberg tables available on the market - without manual tuning or specialised configuration.

Try Dremio’s Interactive Demo

Explore this interactive demo and see how Dremio's Intelligent Lakehouse enables Agentic AI

Understanding

Acronyms and abbreviations in attribute names are the bane of any data analyst. Context usually helps to decipher a column name, which LLMs are particularly adept at, but the clear identity of some columns can be lost to time. Is it a mixture of specific industry terminology? Or was this database created back when Fortran was in vogue? Or perhaps the abbreviations aren't English words at all? (These are all real world scenarios I’ve had to puzzle through in my career…).

The Dremio semantic layer puts an end to these types of scenarios by providing a business-friendly interface for your enterprise data, translating complex technical data structures into understandable business terms. This standardised view of your data ensures that all users, both human and AI, are aligned on consistent definitions with no ambiguity in data comprehension.

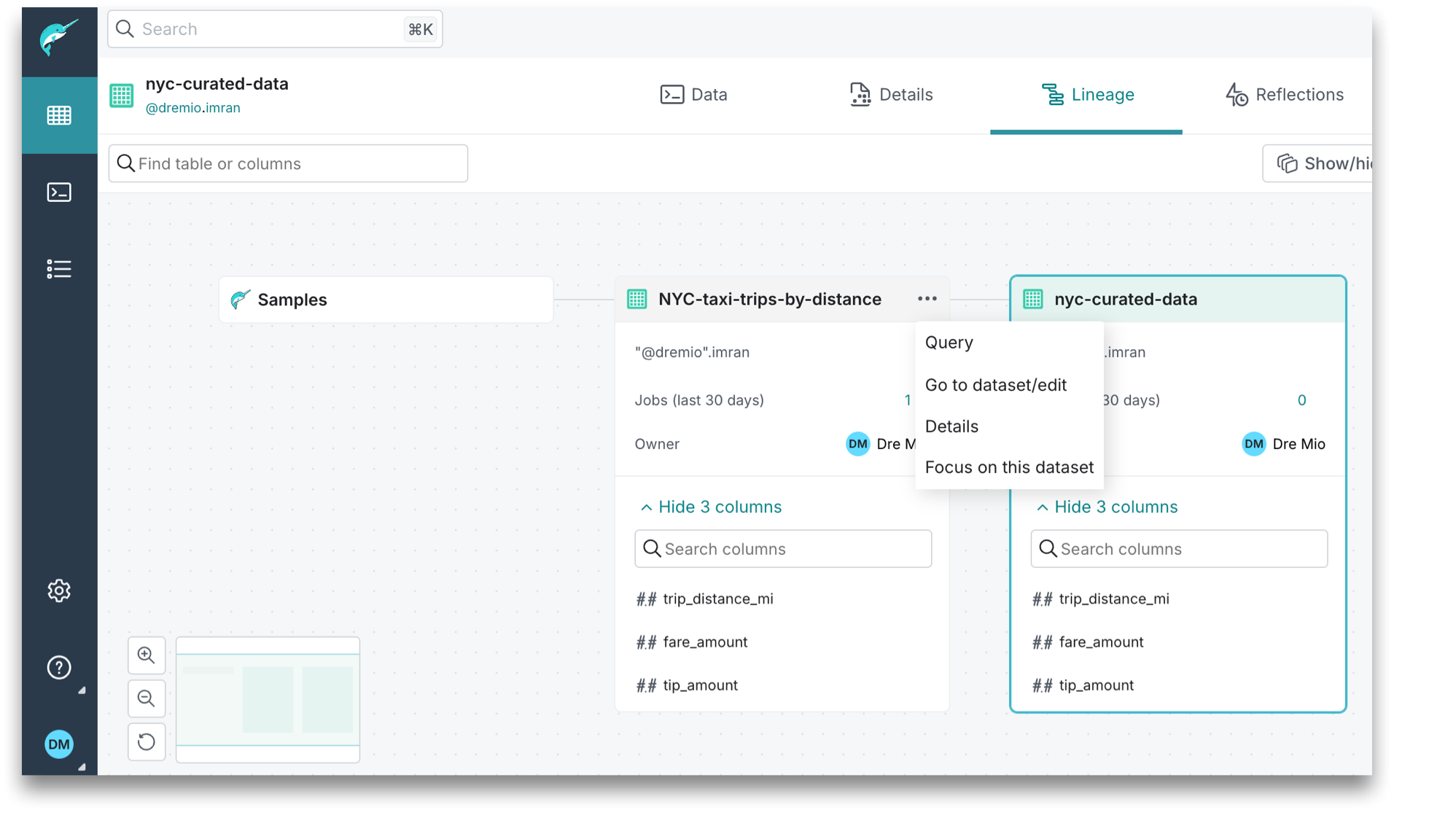

Dremio also tracks and displays, Figure 1.0, the lineage of your datasets, making clear the relationships, hierarchies, and dependencies of your datasets. This gives your AI agent confidence in the origin and usage of datasets and provides context for aggregations and transformations.

On the AI side of things, an agentic system needs to understand what functionalities it has available and what purpose these actions are for. Dremio’s MCP server acts as a communication hub between Dremio's data platform and the AI agents' LLM, exposing data capabilities as discoverable “tools” that the LLM can understand and invoke. To aid in this understanding and efficient use, the tools are defined with clear names and an informative schema for the LLM to review, for example RunSqlQuery and GetSchemaOfTable.

Summary

As agentic AI is becoming widely adopted across numerous industries, the infrastructure you choose today will determine whether your AI systems become transformative assets or expensive experiments. The Data Lakehouse provides definite solutions to three key blockers that can restrict the performance of even the most powerful AI models.

- Federated data access ensures that AI agents can traverse your entire organisational knowledge base, safely and securely, to provide holistic insights of your business, without being blinkered by partial data access or rigid data silos.

- The industry-leading performance of Apache Iceberg and Dremio's query engine transforms what's possible, enabling AI agents to process massive datasets in seconds rather than hours.

- The Semantic Layer acts as the cognitive bridge, turning your AI agent into a domain expert with

a clear understanding of the business logic and relationships of your data. Backed by the Dremio MCP server, it empowers your AI agent to deliver on what you want to achieve.

With a properly architected data lakehouse you can deploy AI agents without the worries of fragmented data, sluggish queries, and AI hallucinations born from misunderstood metrics.