23 minute read · September 25, 2025

Using Dremio’s MCP Server with Agentic AI Frameworks

· Head of DevRel, Dremio

The emergence of agentic AI, intelligent systems that can reason, plan, and act through connected tools, has fundamentally changed how engineers and organizations think about integrating data into intelligent workflows. These agents aren’t limited to answering questions. They can coordinate tasks, trigger business actions, and collaborate with other agents to execute complex processes.

Yet one major challenge remains: how do we give these agents secure, governed, and high-performance access to enterprise data?

This is where the Model Context Protocol (MCP) steps in. MCP is an open standard that provides a consistent way for AI models and agents to connect with external systems through structured, discoverable interfaces. By standardizing the conversation between AI and data platforms, MCP makes integrations portable, reusable, and secure across environments.

Dremio’s MCP Server extends this capability into the data lakehouse. Acting as a bridge between LLM-powered agents and enterprise datasets, it exposes governed data, semantic business definitions, and high-performance queries as agent-ready tools. Whether the goal is to run natural-language analytics against your lakehouse or to build multi-step workflows that use Dremio data for downstream automation, Dremio’s MCP Server enables a new level of agility and intelligence.

Understanding MCP and Dremio’s MCP Server

What is the Model Context Protocol (MCP)?

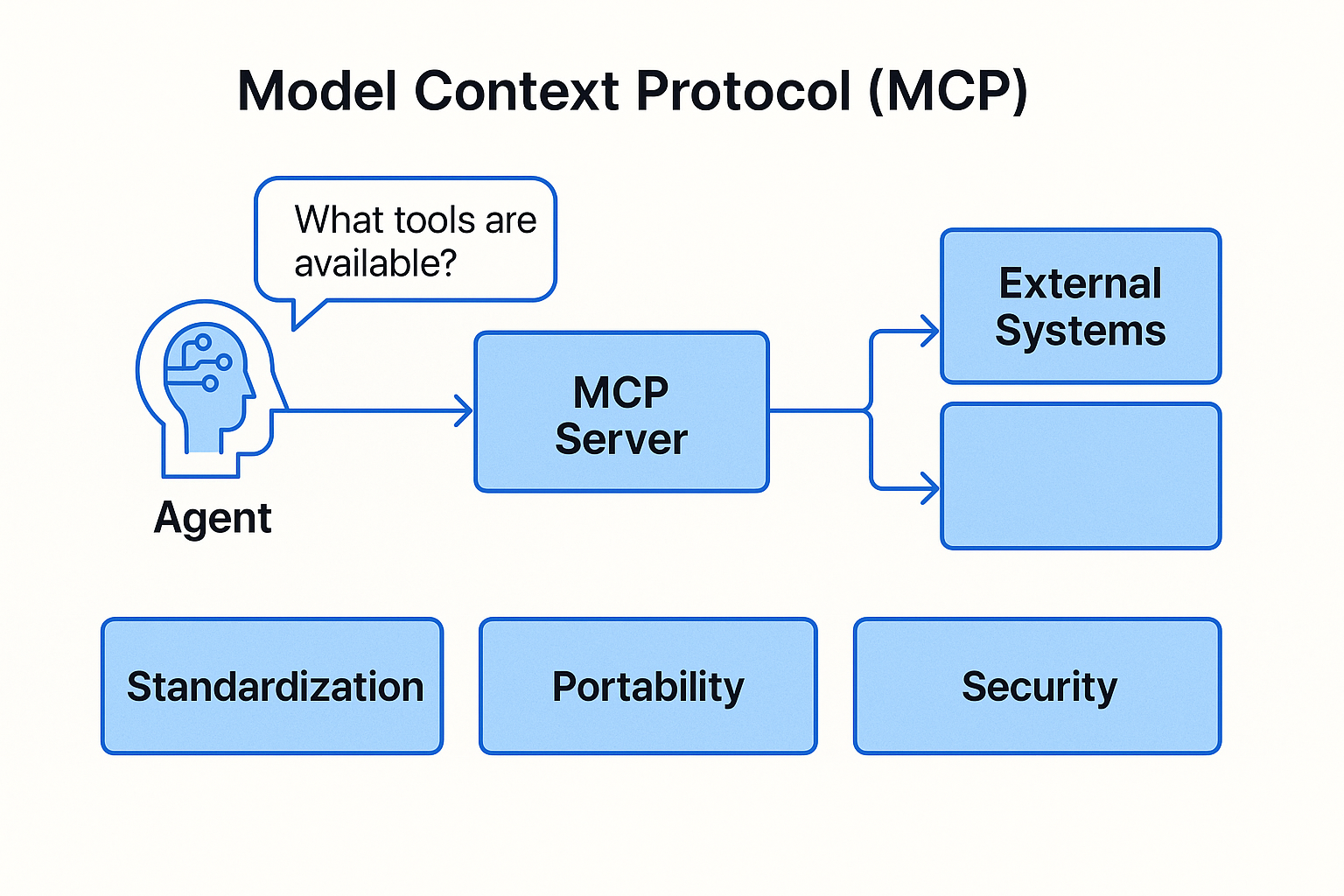

The Model Context Protocol (MCP) is an open standard that defines how AI models and agents connect with external systems. Traditionally, every new integration required building a custom connector, but MCP introduces a standardized contract that makes tool discovery and execution seamless.

With MCP, an agent can ask, “What tools are available?” and immediately receive a structured list of options. From there, it can call those tools, pass in arguments, and process the results, without needing custom glue code for each service.

At a high level, MCP delivers three main benefits:

- Standardization: Every integration follows the same JSON-RPC-based pattern, reducing complexity.

- Portability: Agents built to work with one MCP server can instantly use another without rewriting code.

- Security: Sensitive operations remain inside your infrastructure, while only approved functions are exposed to the agent.

Try Dremio’s Interactive Demo

Explore this interactive demo and see how Dremio's Intelligent Lakehouse enables Agentic AI

Dremio’s MCP Server

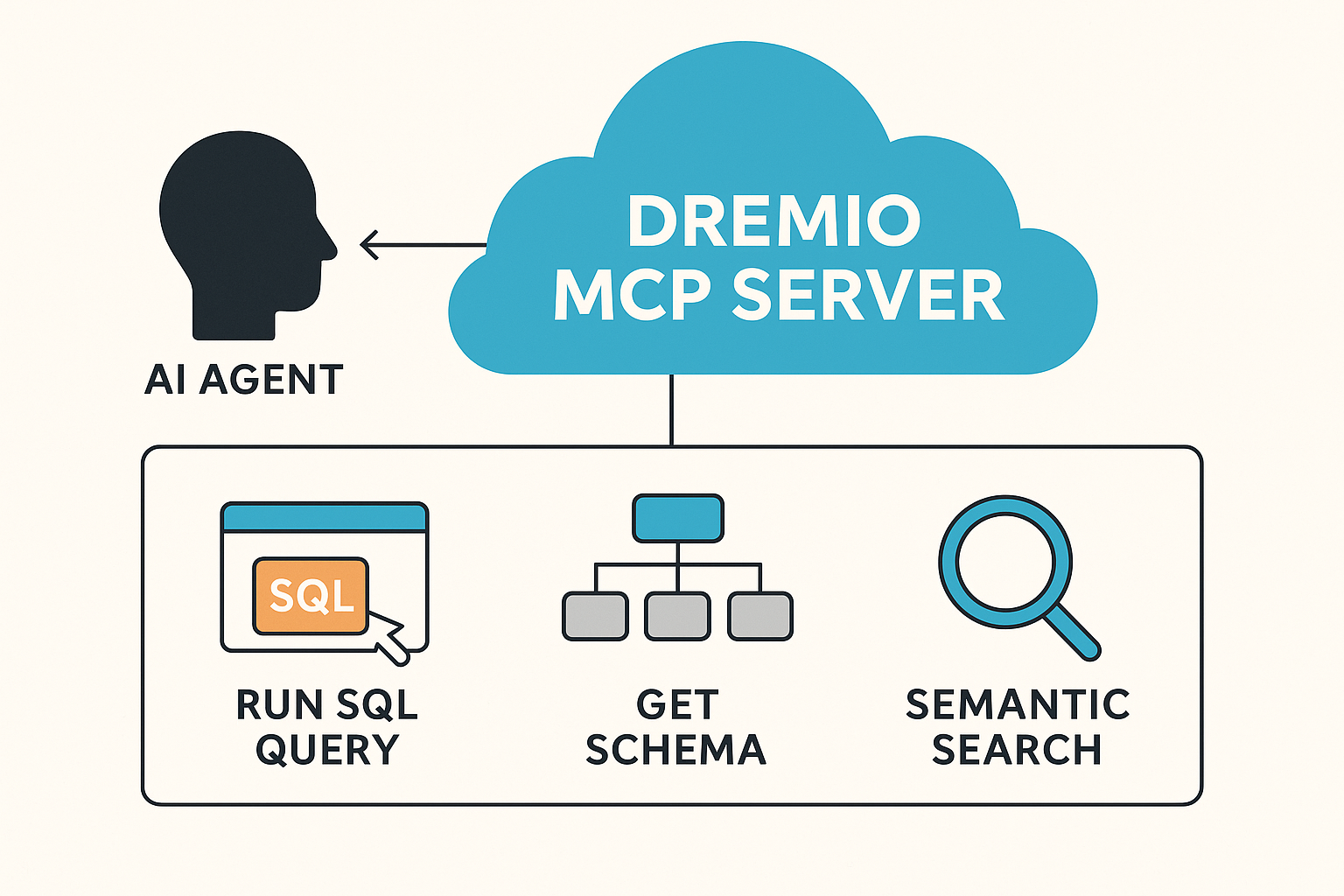

Dremio takes this open standard into the world of the data lakehouse. The Dremio MCP Server allows AI agents to query and understand enterprise data without requiring deep knowledge of SQL or internal schemas.

It provides a set of intuitive, analytics-ready tools, including:

- Run SQL Query – Execute governed queries against Dremio.

- Get Schema – Explore available tables, views, and columns.

- Semantic Search – Interact with data through business-friendly terms such as “monthly revenue” or “active customers,” rather than raw table names.

While these tools call Dremio’s APIs under the hood, the agent only sees them as discoverable, callable actions. This abstraction makes Dremio’s data platform feel like a natural extension of the agent’s capabilities.

Why This Matters

By combining MCP’s open standard with Dremio’s governed semantic layer, organizations gain a powerful new way to operationalize AI:

- Trustworthy data access: Agents rely on governed definitions instead of ad hoc queries.

- High performance: Dremio’s reflections accelerate queries to near real-time, even on massive datasets.

- Lower barriers to entry: Business users and AI systems alike can ask data questions without writing SQL.

In practice, Dremio’s MCP Server transforms enterprise data into a first-class citizen in the agentic AI ecosystem, enabling both conversational analytics and multi-step automated workflows.

Integrating LangChain with Dremio’s MCP Server

Why LangChain?

LangChain has become one of the most widely adopted frameworks for building agentic AI systems. Its flexible agent architecture and tool integration ecosystem make it a natural fit for connecting with MCP servers. With LangChain, you can create agents that reason about user prompts, decide which tool to use, and then call those tools automatically, all without hardcoding the logic.

Pairing LangChain with Dremio’s MCP Server brings governed enterprise data directly into these workflows. Instead of treating analytics as a silo, LangChain agents can access Dremio through standard MCP tools like run_sql or semantic_search, turning natural-language queries into governed analytics.

Getting Started Conceptually

Setting up this integration involves a few straightforward steps:

- Install prerequisites: Python environment, LLM API keys, and (optionally) Docker if you’re running Dremio locally.

- Clone and configure: Download the Dremio MCP Server and set your Dremio connection details, including authentication.

- Run the server: Start the MCP server so that it registers available tools like Run SQL Query or Get Schema.

- Connect LangChain: Update your MCP client configuration in LangChain to recognize Dremio as a tool server.

- Chat with your data: Launch a LangChain agent (e.g., a ReAct agent) and begin issuing natural language prompts that get translated into MCP tool calls.

How It Works in Practice

When you type a prompt like “Show me last quarter’s sales by region,” the LangChain agent evaluates your request, recognizes that it needs data, and selects the Run SQL Query tool exposed by the Dremio MCP Server. It then constructs a structured call, Dremio executes the query, and the results are returned to the agent for final presentation.

From the user’s perspective, there’s no SQL and no schema navigation, just conversational analytics. From the engineer’s perspective, the workflow is reliable, secure, and reusable thanks to MCP’s standardized protocol.

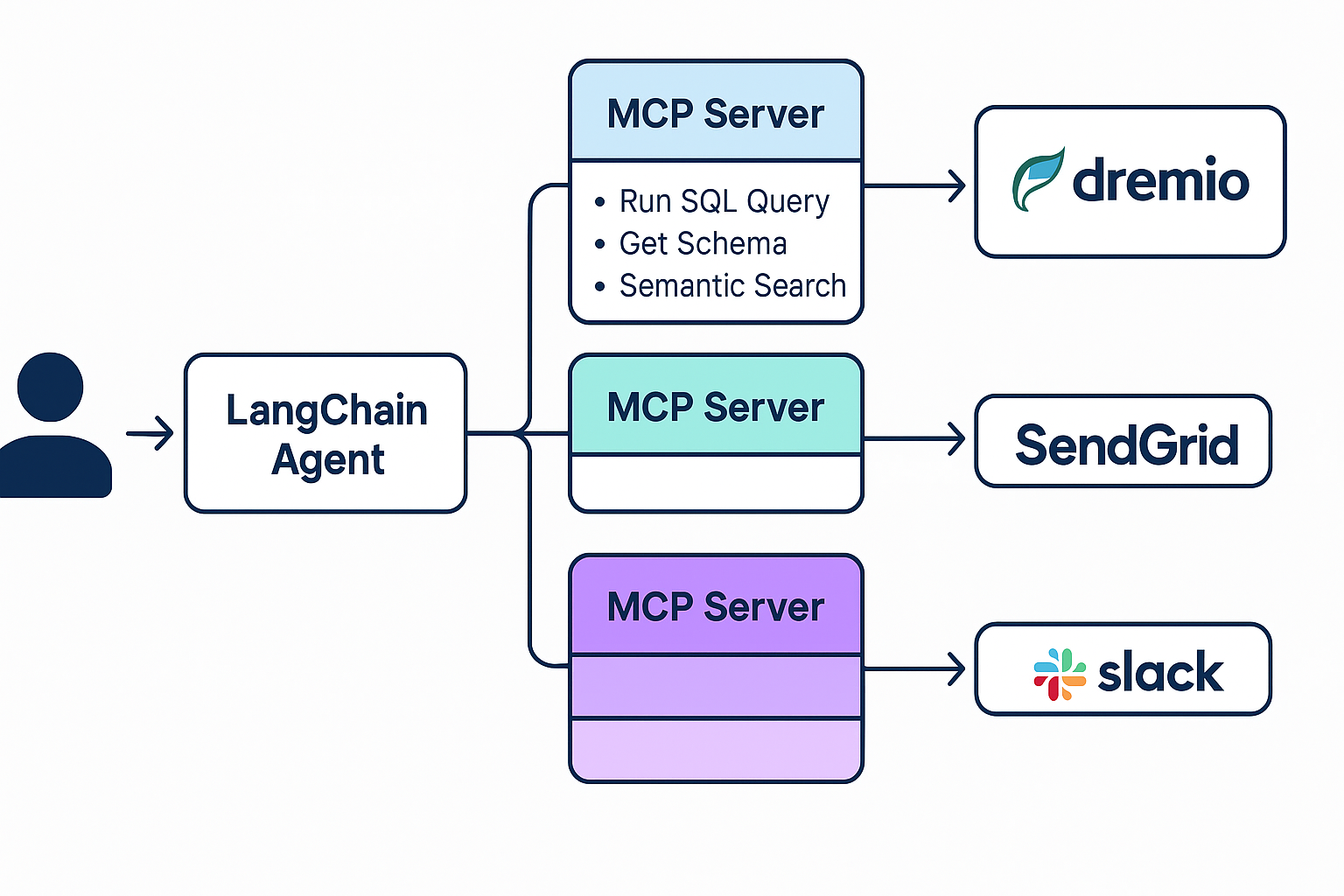

Extending the Setup

One of the strengths of MCP is its composability. You’re not limited to Dremio. The same LangChain agent can be configured to talk to multiple MCP servers, such as one for Dremio, one for email (e.g., SendGrid), and another for messaging (e.g., Slack). This allows you to create workflows like:

- Query customer churn from Dremio.

- Generate a list of at-risk customers.

- Trigger an email campaign through another MCP server.

The agent orchestrates the entire process, while MCP ensures each tool integration remains portable and standardized.

Agent-to-Agent (A2A) Protocol and Multi-Agent Workflows

What is A2A?

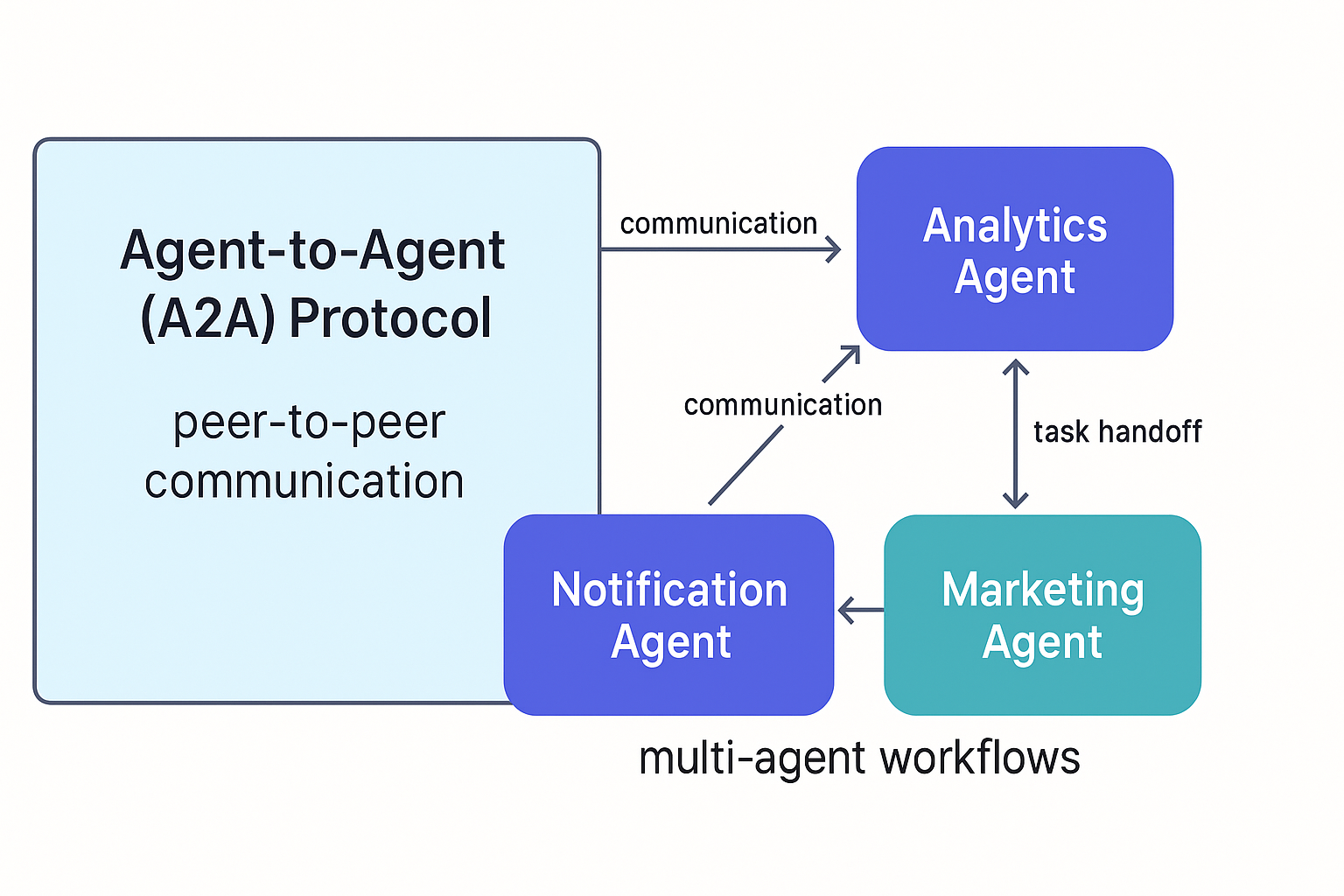

The Agent-to-Agent (A2A) Protocol is a newer open standard designed to let autonomous AI agents communicate directly with one another. Instead of a single agent trying to do everything, A2A makes it possible for multiple specialized agents, built by different teams or running on different platforms, to collaborate, share context, and coordinate tasks.

At its core, A2A is about peer-to-peer communication. Agents can introduce themselves, discover capabilities in other agents, exchange structured messages, and negotiate responsibilities in real time. It provides the connective tissue for multi-agent ecosystems where one agent’s output becomes another agent’s input.

How A2A Differs from MCP

While MCP and A2A may sound similar, they serve very different purposes:

- MCP is about tool integration. It standardizes how agents access external services (like Dremio or an email provider) through clearly defined tools.

- A2A is about agent collaboration. It defines how agents talk to one another, hand off tasks, and share progress.

In practice, you would use MCP when an agent needs to query Dremio, and A2A when that same agent needs to ask another agent, perhaps a notification agent or marketing agent, to act on the results.

Why Multi-Agent Workflows Matter

In real-world scenarios, no single agent can (or should) do everything. Separating responsibilities across agents has several benefits:

- Specialization: Each agent can focus on a narrow set of skills, making them easier to design, test, and maintain.

- Scalability: Multiple agents can work in parallel, reducing bottlenecks.

- Flexibility: Agents can be swapped out or upgraded without disrupting the larger ecosystem.

For example, imagine a system with:

- An analytics agent that queries customer data from Dremio using MCP.

- A messaging agent that handles emails or SMS campaigns.

- A CRM agent that updates lead statuses.

Using A2A, the analytics agent doesn’t have to know how to send an email, it simply passes the task to the messaging agent, which executes it using its own tools. This division of labor mirrors real-world teams and workflows, making agentic AI systems easier to scale in enterprise environments.

Best Practices for Using A2A

- Use MCP for tools and A2A for agents, keep their roles distinct to avoid complexity.

- Design agents with clear responsibilities so they collaborate rather than overlap.

- Establish trust and governance for inter-agent communication, just as you would for human teams.

By combining MCP’s structured tool access with A2A’s communication layer, organizations can create agent ecosystems that are both modular and powerful, capable of handling analytics, automation, and orchestration at scale.

Combining MCP and A2A in Practice

Why Use Both Together?

While MCP and A2A serve different purposes, their real power emerges when they are used side by side. MCP ensures that every agent has reliable, standardized access to the tools it needs, such as querying data in Dremio, while A2A provides the communication fabric for agents to collaborate with each other. Together, they form the foundation for modular, interoperable agent ecosystems.

A Practical Example

Consider a sales automation workflow:

- Analytics agent (MCP-enabled): Queries Dremio for a list of customers whose engagement has dropped in the last 90 days.

- Messaging agent (A2A-enabled): Receives that customer list from the analytics agent and sends a targeted email campaign.

- CRM agent (A2A-enabled): Updates customer records in Salesforce or HubSpot to reflect the outreach.

In this flow, MCP ensures the analytics agent can securely and efficiently query governed data, while A2A ensures the results are passed along to the right downstream agents. The system behaves like a well-orchestrated team, each agent focused on its specialty, yet collaborating to achieve a larger business outcome.

When to Combine MCP and A2A

- Use MCP alone when a single agent can handle a self-contained task (e.g., running analytics queries against Dremio).

- Use A2A alongside MCP when tasks span multiple domains or require specialized agents to hand off work.

By clearly separating tool integrations (MCP) from agent collaboration (A2A), you avoid overlap and complexity while enabling greater scalability and flexibility.

The Enterprise Advantage

For organizations, this approach provides:

- Governed intelligence: Data access always flows through Dremio’s semantic layer.

- Composable automation: Agents can be swapped in or out without breaking workflows.

- Future readiness: As new MCP servers or A2A-compatible agents emerge, they can be added without redesigning the system.

Together, MCP and A2A allow enterprises to move beyond single-purpose AI assistants toward collaborative, analytics-driven agent networks that can automate entire business processes.

Agentic Analytics & Workflow Examples

Conversational Analytics

One of the most immediate benefits of pairing Dremio’s MCP Server with agentic AI frameworks is the ability to perform natural-language analytics. Instead of writing SQL, a user can simply ask, “What were our total sales by region last quarter?” The agent interprets the request, calls Dremio’s Run SQL Query tool via MCP, and returns results in seconds.

The semantic layer in Dremio ensures that the response is not just technically correct but also aligned with business definitions. Queries like “active customers” or “churn rate” map directly to governed datasets, giving stakeholders confidence that answers are consistent across the organization.

From Analytics to Action

The real power of agentic systems comes from chaining analytics to downstream automation. For example:

- An agent queries Dremio for a list of customers with declining engagement.

- The results are passed to a messaging agent via A2A.

- That agent uses its own MCP server (e.g., SendGrid or Twilio) to launch an email or SMS campaign.

This workflow demonstrates how analytics can serve as the trigger for intelligent, automated business actions. The data remains governed, the process is standardized, and the entire flow runs with minimal human intervention.

Multi-Step Orchestration

Beyond simple reporting, agents can execute multi-step reasoning with data. Consider a pipeline where:

- An analytics agent queries Dremio for regional sales performance.

- A visualization agent generates charts for an executive dashboard.

- A scheduling agent distributes those dashboards via email every Monday morning.

Here, MCP provides structured access to data, and A2A enables smooth collaboration among agents with specialized roles. The outcome is a dynamic, fully automated analytics pipeline that adapts to changing business needs.

Business Value

These examples highlight the dual advantage of Dremio’s MCP Server:

- Empowerment for business teams, who can interact with data conversationally without writing code.

- Acceleration for engineering teams, who can build scalable, modular automations by combining MCP-based tools with A2A-based collaboration.

The result is a new class of agentic analytics workflows, smarter than dashboards, more flexible than static pipelines, and fully governed by the data lakehouse.

Future Directions

Expanding Agent Ecosystems

The combination of MCP and A2A is still in its early stages, but momentum is building quickly. We are beginning to see registries, such as the emerging MCP Market, where developers can discover, share, and install MCP servers just as easily as installing npm packages or Python libraries. This makes it possible to compose agent ecosystems rapidly, connecting data platforms, communication tools, CRMs, and more. In parallel, A2A initiatives are pushing toward standardized directories where agents can publish their capabilities and discover each other dynamically.

Enterprise-Grade Scalability

For organizations, scaling these agentic workflows requires more than just protocols. Governance, monitoring, and orchestration become critical. Dremio’s role here is especially valuable: its semantic layer ensures that as more agents tap into the lakehouse, they always rely on the same trusted definitions. Paired with features like Reflections for acceleration and security controls for access management, Dremio ensures that MCP-based agents can scale across the enterprise without sacrificing performance or compliance.

New Use Cases on the Horizon

As these standards mature, expect to see richer applications:

- Agent-powered BI: Instead of static dashboards, AI agents that continuously monitor KPIs and proactively alert teams.

- Closed-loop automations: Agents that not only identify trends in Dremio but also trigger workflows in sales, finance, or supply chain systems, without human intervention.

- AI-driven governance: Multi-agent systems that watch for data quality issues, lineage gaps, or policy violations and automatically remediate them.

Getting Started Today

While the vision is expansive, you don’t need to wait for the future to benefit. With Dremio’s MCP Server, LangChain integrations, and A2A-compatible frameworks, you can start building agentic analytics workflows right now. A simple proof of concept, like querying data in Dremio through a LangChain agent or chaining an analytics agent with a notification agent, can demonstrate immediate value and lay the foundation for more advanced deployments.

Conclusion

The evolution of agentic AI is reshaping how enterprises think about analytics and automation. Agents are no longer isolated assistants; they are becoming collaborative systems capable of reasoning, planning, and acting across multiple domains. To unlock their full potential, these agents need both governed access to data and the ability to coordinate with one another.

This is exactly where MCP and A2A come together. MCP ensures that agents can securely interact with enterprise tools like Dremio, accessing trusted data through well-defined interfaces. A2A, in turn, provides the framework for those agents to collaborate, delegating tasks, exchanging results, and orchestrating end-to-end workflows.

Dremio’s MCP Server sits at the heart of this ecosystem. By exposing the semantic layer and query engine of the lakehouse through MCP, it makes analytics conversational, governed, and accessible to both humans and AI agents. When paired with frameworks like LangChain or extended into multi-agent systems via A2A, Dremio enables a new generation of agentic analytics workflows, where data discovery takes seconds instead of days, and insights translate seamlessly into business action.

For AI and data engineers, the opportunity is clear: start small, experiment with Dremio’s MCP Server, and build proof-of-concept agents that connect data with action. From there, scale into multi-agent workflows that automate and optimize entire business processes. In this future, your data lakehouse isn’t just a platform for analytics, it becomes the foundation for intelligent, autonomous systems that drive real enterprise value.