12 minute read · November 29, 2022

Time Travel with Dremio and Apache Iceberg

· System Engineer, Dremio

Introduction

Dremio now offers the capability to both query and manipulate data stored in Apache Iceberg table format.

Apache Iceberg is rapidly becoming the industry-standard open table format, allowing data management within the data lake — see here for more details on Apache Iceberg.

In this blog, we’ll show how Dremio can be used to create and amend Iceberg datasets. Then we’ll take a look at how we can step back in time in order to examine the state of the table at earlier points in time.

To get started with Dremio, we recommend you launch our Dremio Cloud offering, see here for details of getting started with Dremio Cloud.

Step 1: Create an Iceberg Dataset Using Dremio

Let’s assume that you don’t already have a dataset in Iceberg format (if you do have one, then you can work with that, and proceed to the next step).

We will use the Samples dataset as a source for populating our Iceberg table.

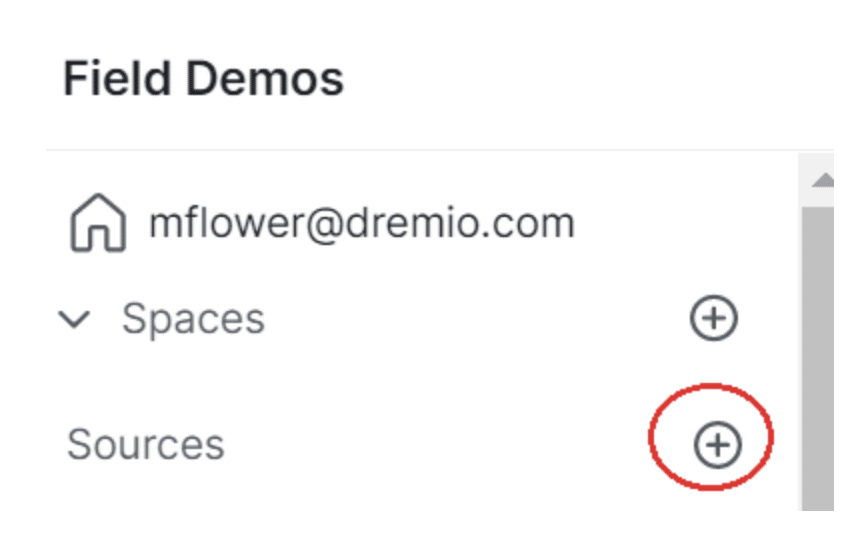

To connect to the Samples source:

1. Click Add Source

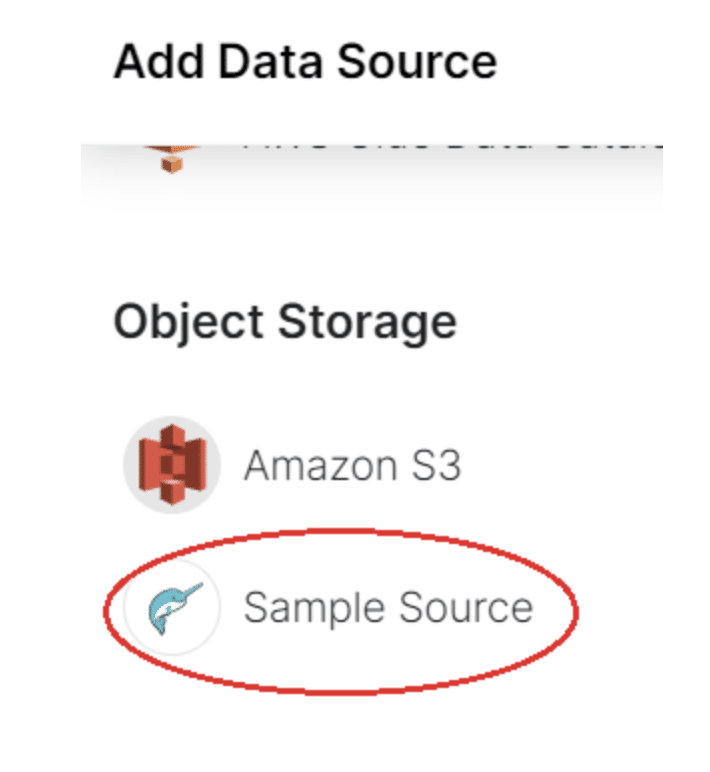

2. Select the Sample Source

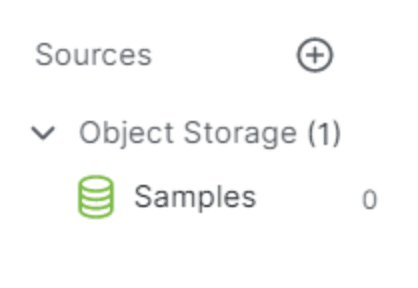

3. It will then be listed in your Sources under “Object Storage” or “Data Lakes”

Open Your Dremio UI

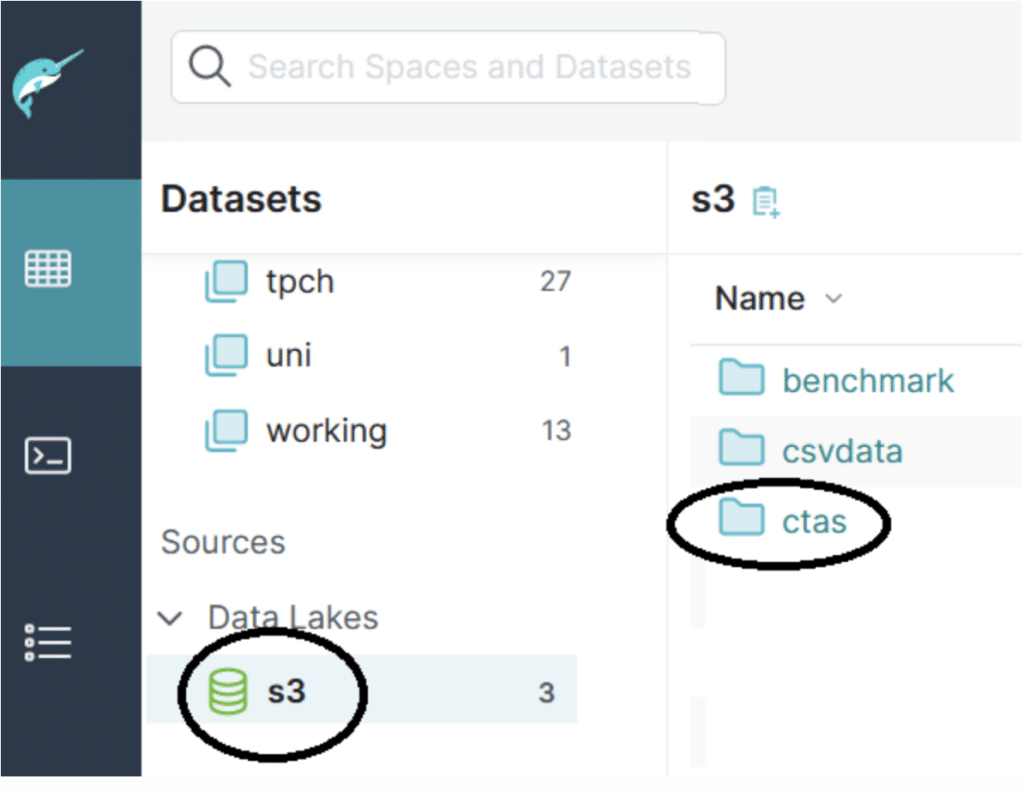

We can create an Iceberg format dataset in a S3 data lake. Here we will use a data lake named “s3,” under which we have an existing subfolder named “ctas.” If you don't already have a data lake connected to your Dremio instance, you can find instructions on how to do that here.

Open a SQL window to run a command to generate an Iceberg dataset.

Here, we will use one of the existing “samples” datasets to populate the new table with 200 rows, but you can use any available data source to generate the new dataset.

CREATE TABLE s3.ctas.iceberg_blog STORE AS (type=>'iceberg') AS SELECT * FROM Samples."samples.dremio.com"."tpcds_sf1000"."catalog_sales" limit 200;

Step 2: Examine an Existing Iceberg Table

Let’s see what’s in this new Iceberg table. Run this SQL command:

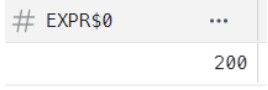

select count(*) from s3.ctas.iceberg_blog;

This shows that there are 200 rows in the table:

Let's add 500 more rows:

INSERT INTO s3.ctas.iceberg_blog SELECT * from Samples."samples.dremio.com"."tpcds_sf1000"."catalog_sales" limit 500;

Recheck the row count:

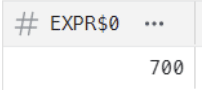

select count(*) from s3.ctas.iceberg_blog;

Now we see 700 rows (200 + the 500 rows we have just added):

Step 3: Review the Changes in the Iceberg Table

We will identify different snapshots for our dataset using Dremio’s table_history() function.

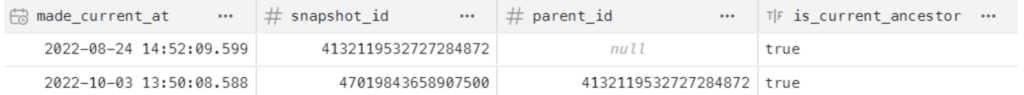

Run this query to examine the available snapshots:

SELECT *

FROM TABLE( table_history('s3.ctas.iceberg_blog' ));This will give output showing two snapshots, e.g.,

N.B.:

- The first entry (

snapshot_id=4132119532727284872) is the snapshot created after we created the table. - The second entry (

snapshot_id=47019843658907500) is the snapshot created after we inserted more rows. Note that this has a parent_id (4132119532727284872) of the first snapshot.

Step 4: Time Travel in an Iceberg Table

We can time travel using the available snapshot id information to view our Iceberg data from a different point in time, see Time Travel Queries.

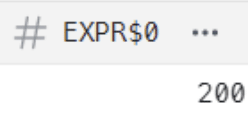

So let's trawl back through these snapshots, looking at the first snapshot id:

select count(*) from s3.ctas.iceberg_blog AT SNAPSHOT '4132119532727284872';

The result is 200 rows — i.e., before we just added the extra rows.

Step 5: Create a VDS, Make it Seamless to Travel Back in Time

We’ve seen that we can use the AT method of stepping back through different table snapshots.

Let's retrieve data from our first snapshot.

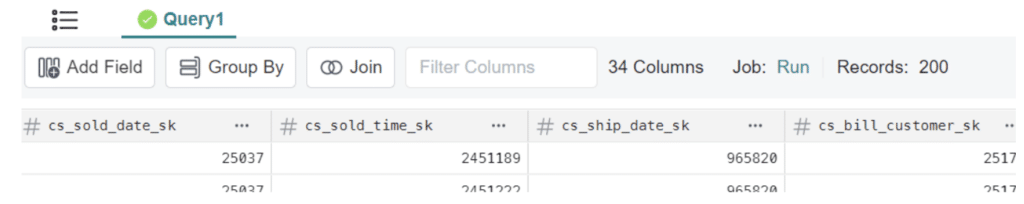

select * from s3.ctas.iceberg_blog AT SNAPSHOT '4132119532727284872';

This shows the 200 records from our original snapshot.

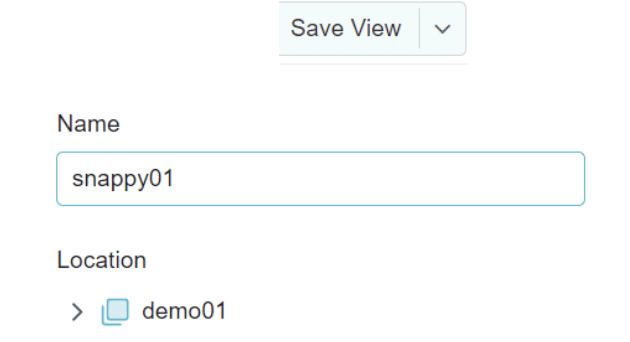

Now let's create a new virtual data set (VDS) from our query.

You have two options:

1. Click Save View, and save the VDS in a named space:

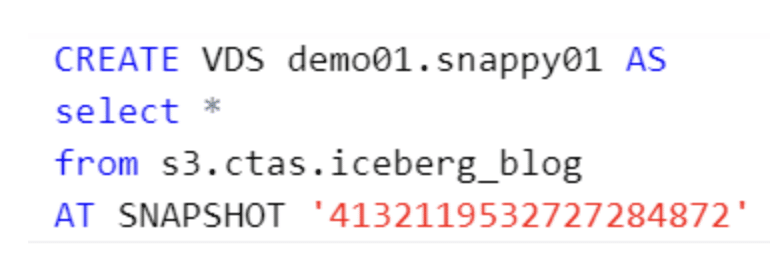

2. Use the SQL CREATE VDS statement:

CREATE VDS demo01.snappy01 AS select * from s3.ctas.iceberg_blog AT SNAPSHOT '4132119532727284872'

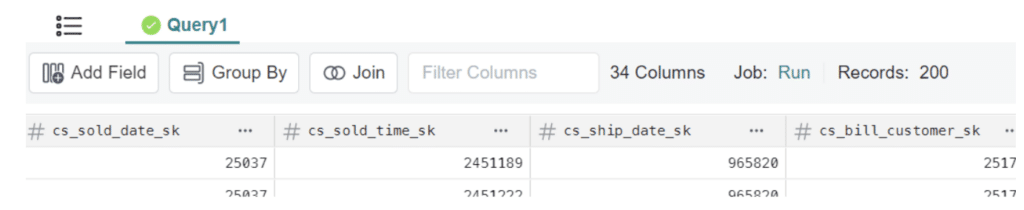

Now let's query the VDS.

select * from demo01.snappy01

This snapshot and VDS will remain static, even if more rows are added into the source “iceberg_blog” table.

Conclusion

Apache Iceberg is a major step toward a true data lakehouse, offering full DML capabilities on open data formats. Furthermore, it offers point-in-time capabilities, allowing us to ask questions such as:

- What data has changed between transactions? What was added, updated or deleted?

- What was our stock level at the beginning of the month?

Get a Free Early Release Copy of "Apache Iceberg: The Definitive Guide".

Sign up for AI Ready Data content