40 minute read · November 29, 2022

What Is a Semantic Layer?

· Senior Product Marketing Manager, Dremio

The semantic layer is a business representation of corporate data for end users. In most data architectures, it sits between your data store (like data warehouse and data lake) and consumption tools for your end users. By representing data in a business-friendly format, data analysts can create meaningful dashboards and derive actionable insights from data without needing to understand the underlying physical data structure.

This guide explores what semantic layers are, their benefits and how they’re implemented within your enterprise data stack.

Key highlights:

- A semantic layer is a business representation of data that translates complex technical structures into accessible, analytics-ready insights for end users.

- Semantic layers sit between data storage and consumption layers, providing consistent logic, governance and simplified access across the enterprise data stack.

- Implementing a semantic layer improves collaboration, data consistency and self-service analytics through a unified semantic data model.

- Dremio delivers a unified semantic layer within its open data lakehouse, enabling faster queries, stronger governance and true self-service analytics without data duplication.

Why use a semantic layer?

Companies use data warehouses or data lakes to store data from multiple sources. End users need a way to access this data in a way that is meaningful to them. The problem is, the data there only makes sense to data engineers. Many teams try to solve this challenge with existing tools — but those solutions only go so far.

A semantic layer is not:

- A replacement for a data lakehouse or data warehouse

- An alternative to a data transformation or ETL tool

- A BI or visualization platform

- An OLAP cube or aggregation engine

Each of these plays a role in the modern data stack, but none are designed to translate technical data into business-ready insights.

Data engineers create ETL pipelines from source datasets into data lakes and data warehouses. They physically organize the data into schemas and tables. The table names are complex and reflect the physical data model.

This is where semantic layers are needed.

As the logical layer for data access, the semantic layer provides a way for teams to collaborate and share data products. It gives data consistency and simplicity across different domains. The semantic layer standardizes business logic and makes data more useful to everyone. A well-architected semantic layer empowers end users to become decision-makers with self-service analytics.

Key semantic layer benefits

A semantic data model provides a unified, business-friendly abstraction layer over complex data environments, unlocking significant value for organizations by making data more accessible, consistent, and actionable. By bridging the gap between technical data platforms and business users, it enables teams to collaborate effectively, trust their data, and independently generate insights.

Collaboration on data

A semantic layer fosters cross-team collaboration by providing a shared understanding of metrics, definitions, and relationships across domains. Teams can work together on analysis, reporting, and AI initiatives without confusion or duplication.

- Standardize business definitions across departments.

- Enable shared dashboards and reports with trusted metrics.

- Facilitate cross-domain projects by providing a common data vocabulary.

Data consistency

By centralizing definitions and metrics, a semantic layer ensures data consistency across all tools, platforms, and business processes. This reduces errors, improves trust in analytics, and supports better decision-making.

- Maintain a single source of truth for metrics and dimensions.

- Reduce reconciliation work between disparate data sources.

- Ensure consistent reporting and analysis across all domains.

Self-service analytics

A semantic layer empowers business users to explore and analyze data independently, enabling ad hoc insights without relying on IT or complex technical knowledge. This accelerates decision-making and increases agility.

- Provide business-friendly interfaces for querying and reporting.

- Allow users to generate insights using standardized metrics.

- Reduce reliance on technical teams for routine analytics tasks.

How a semantic layer architecture fits into the modern data stack

| How semantic layers fit into data stacks | How it works |

| Position in the stack | A semantic layer sits between data storage and analytics tools, providing a business-friendly abstraction that translates complex, heterogeneous data into consistent, accessible metrics and dimensions for end users. |

| Core functions | It standardizes metrics, enforces governance, and enables self-service analytics, transforming disparate data sources into a unified, trusted layer that supports ad hoc exploration, reporting, and AI/ML workflows. |

| Integration with other layers | The semantic layer connects data platforms, ETL pipelines, BI tools, and AI systems, ensuring consistent definitions, streamlined queries, and seamless interoperability across the modern data stack ecosystem. |

| Enterprise impact | By providing trusted, consistent data, the semantic layer accelerates insights, enhances collaboration, reduces errors, and supports scalable, governed analytics and AI initiatives across the organization. |

Methods of implementing semantic layers

Now that we’ve set a baseline for what a semantic layer is, we’ll review common ways organizations implement a semantic layer.

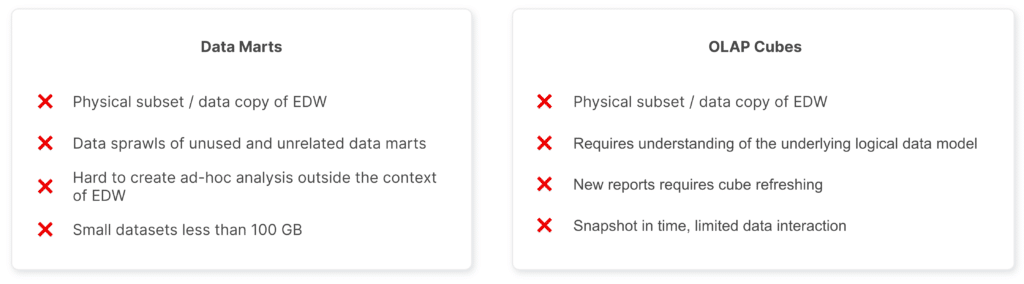

Data Marts

Data warehouses often aggregate data from many sources - and some may be irrelevant to business users.

To avoid redundancy and to give data analysts access to just the datasets they need, data engineers will create data marts, a curated subset of the data warehouse that provides a domain-specific view of data for various departments. When creating data marts, data engineers will often represent this data in business-friendly language for end users.

Data marts are one way to implement a semantic layer, but it does come with their own set of challenges.

Challenges with Data Marts:

A limitation of data marts is their dependency on the data warehouse. Slow and bombarded data warehouses are often the reason for creating data marts. The size of a data warehouse is typically larger than 100 GB and often more than a terabyte. Data marts are designed to be less than 100 GB for optimal query performance.

If a line of business requires frequent refreshes on large data marts, then that introduces another layer of complexity. Data engineers will need more ETL pipelines to create processes ensuring the data marts are performant.

Now that your data mart is less than 100 GB, what happens if end users request data outside the context of the data warehouse?

Many organizations have data sources that must stay on-premises. Others may store data in another proprietary data warehouse, sometimes across different cloud providers. This makes it hard for end users to do ad-hoc analysis outside the context of their data warehouse. Business units create their own data marts, resulting in data sprawl across the enterprise, which is a data governance nightmare.

Learn more: how to create a no-code data mart with a unified semantic layer

OLAP Cubes

In addition to planned queries and data maintenance activities, data warehouses also support ad hoc queries and online analytical processing (OLAP). An OLAP cube is a multidimensional database for analytical workloads. It performs analysis of business data, providing aggregation capabilities and data modeling for efficient reporting.

Challenges with OLAP Cubes:

OLAP cubes for self-service analytics can be unpredictable because the nature of business queries is not known in advance. Organizations cannot afford to have analysts running queries that interfere with business-critical reporting and data maintenance activities. Because of this, datasets required to support OLAP workloads are extracted from the data warehouse, and analysts run queries against these data extracts.

OLAP cubes’ dependency on the data warehouse poses many challenges. As extracted datasets from the data warehouse, cubes require an understanding of the underlying logical data model. In many cases, massive amounts of data are ingested into memory for analytical queries, incurring expensive computing bills.

Because the data extracts are a snapshot in time of the data warehouse, they offer limited interaction with the data until the OLAP cubes are refreshed. Depending on the workload, it’s not uncommon for cubes to take hours for data refresh.

Why enterprises prefer a unified semantic layer

Most organizations prefer to have a single source of enterprise data rather than replicating data across data marts, OLAP cubes or BI extracts. Data lakehouses solve some of the problems with a monolithic data warehouse, but they’re only part of the equation. A unified semantic layer is just as important.

A unified layer is mandatory for any data management solution, such as the data lakehouse. Some benefits include:

- A universal abstraction layer: Technical fields from facts and dimensions tables are transposed into business-friendly terms like Last Purchase or Sales.

- Prioritizing data governance: A unified semantic layer makes it easy for teams to share views of datasets in a consistent and accurate manner, meaning only users with provisioned access can see the data.

- All your data: Your end users need self-service access to new data. You don’t want to spend more time creating ETL pipelines with dependencies on proprietary systems. Consume data where it lives.

Top platforms for business-friendly data access semantic layers

A semantic layer simplifies access to complex enterprise data by providing a business-friendly abstraction that standardizes metrics, definitions, and relationships across tools and platforms. The following table highlights some of the leading platforms that enable consistent, governed, and self-service analytics, making data accessible for BI, AI, and cross-team collaboration.

| Top platforms for business-friendly data access semantic layers | Key features |

| Dremio | Enables a data lake semantic layer by defining business views and virtual datasets without data movement, offering role‑based access and performance optimizations for unified, governed analytics. |

| AtScale | Provides a Universal Semantic Layer that defines metrics once, supports both BI and AI/agent consumption, and connects live to cloud warehouses while enforcing governance and maximizing performance. |

| Looker | Built‑in semantic modeling layer (LookML) defines dimensions, aggregates, and business logic once for use across dashboards, AI tools and embedded apps—ensuring consistency and trust. |

| Snowflake Semantic Views | Enables semantic views within the warehouse that abstract physical schemas into business‑friendly entities, simplifying data access and enforcing consistent logic across tools. |

| dbt Semantic Layer | Integrates metric definitions and semantic models directly into the dbt workflow, centralizing logic, enabling version control, and serving consistent metrics to BI, apps and AI. |

| Cube Cloud | An API‑first semantic layer that offers REST, GraphQL, SQL access, pre‑built integrations and centralized modelling to deliver consistent metrics and secure access across apps and BI. |

| Graphwise | Combines knowledge‑graph and semantic‑layer capabilities for structured and unstructured data, enabling AI‑ready context, semantic reasoning and unified data for enterprise use. |

| Google Cloud Data Catalog with Vertex AI | Offers metadata and feature‑store layers in Google Cloud, cataloguing datasets/models for discovery, and supporting semantic feature access for AI/ML workflows via Vertex AI. |

| PoolParty | Semantic platform focused on ontology, taxonomy and knowledge‑graph management, enabling enterprise semantic middleware and aligning data semantics across silos, search and AI. |

1. Dremio

Dremio is a leading data lake engine and semantic layer platform designed to simplify, accelerate, and unify data access across your modern data stack. It eliminates the need for complex ETL pipelines by allowing users to query data directly where it lives, whether in a lakehouse, warehouse, or cloud storage. With Dremio’s Universal Semantic Layer, organizations can define metrics, relationships, and hierarchies once and make them available across BI, AI, and data science tools, ensuring data consistency, governance, and trust. This capability empowers both technical and non-technical users to perform self-service analytics with confidence, without sacrificing control or performance.

At the core of Dremio’s value is its ability to deliver high-performance analytics at scale while maintaining flexibility and openness. Features like Dremio Reflections automatically accelerate queries for sub-second response times, while its self-service data access model gives users the ability to explore and analyze data without IT bottlenecks. Role-based access control, data lineage tracking, and integration with major BI tools (like Tableau, Power BI, and Looker) make it a complete platform for governed, enterprise-grade analytics. In essence, Dremio’s semantic layer transforms your data lake into a high-speed, business-ready environment, bridging the gap between raw data and actionable insight.

Key features of Dremio:

- Universal Semantic Layer for consistent business logic and metrics

- Dremio Reflections for automated query acceleration

- Self-Service Analytics for business and data teams

- No Data Movement with direct querying across data sources

- Advanced Governance with role-based access and lineage tracking

- Native Integration with BI, AI, and ML tools across the data ecosystem

2. AtScale

AtScale provides a “Universal Semantic Layer” designed to bridge business logic with cloud‑data platforms and BI/AI tools, offering a consistent metric layer consumed by humans and agents alike. It supports multi‑platform connectivity (Snowflake, Databricks, BigQuery) and emphasizes semantic models that serve dashboards, notebooks, and even AI agents.

AtScale pros:

- Cross‑platform connectivity: one semantic layer for multiple clouds/data platforms.

- AI‑agent readiness: models built for both human BI consumption and autonomous workflows.

- Centralized metric governance: business definitions declared once and reused everywhere.

Cons of AtScale:

- Higher cost or licensing complexity given its enterprise orientation.

- Complexity in setup and modelling might require experienced analytics engineers.

- Dependency on integration maturity for all consuming platforms; some tools may have less mature connectors.

3. Looker (Google Cloud)

Looker uses its LookML modeling language to create a semantic layer within the BI tool, allowing organizations to define dimensions, metrics, and relationships once and reuse across dashboards and instances of “Looker Agents” and newer AI‑enabled interfaces. It emphasizes central definitions and AI‑trustworthiness of analytics.

Looker pros:

- Strong semantic modeling with LookML with reusable definitions and business logic.

- Tight integration with BI workflows and visualizations, enabling self‑service for business users.

- Enhanced AI/LLM trust via governed semantic layer reducing errors in generative analytics.

Cons of Looker:

- Tied to the Looker ecosystem, so the semantic layer may be less portable if using multiple BI tools.

- Visualization and customization capabilities have received criticism for being limited.

- Complexity in scaling large semantic models or enabling them outside of Looker tool stack.

4. Snowflake Semantic Views

Snowflake’s Semantic Views allow organisations to create semantic modelling objects natively inside the Snowflake platform, defining business metrics and dimensions referenced by downstream BI and AI systems. These views sit within the data platform itself.

Snowflake Semantic Views pros:

- Native integration in Snowflake: simpler architecture if your data platform is already Snowflake.

- Direct support for business definitions (metrics, dimensions, relationships) inside the warehouse.

- Reduces fragmentation between BI models and data platform models.

Cons of Snowflake Semantic Views:

- As a newer feature, third‑party tool support and ecosystem maturity may be less developed.

- Lock‑in risk: semantic definitions tied to Snowflake may limit portability across platforms.

- Semantic modelling flexibility may be less comprehensive than specialised semantic‑layer platforms.

5. dbt Semantic Layer

dbt’s Semantic Layer (built on MetricFlow) enables data teams to define business metrics and semantic definitions centrally in the dbt project, then expose those metrics to downstream tools (BI, spreadsheets, notebooks). It focuses on metric consistency and tool‑agnostic consumption.

dbt Semantic Layer pros:

- Central metric definitions: define once, use everywhere, avoid drift.

- Tool‑agnostic consumption: supports analytics tools beyond one vendor.

- Governance and version control built into analytics engineering workflows.

Cons of dbt Semantic Layer:

- Still maturing compared to some full packaged semantic‑layer platforms, meaning fewer features may be available initially.

- BI tool integrations vary so may require extra effort to connect downstream.

- Focus is more on metric definition than on query performance optimizations or virtualisation features.

6. Cube Cloud

Cube Cloud offers a universal semantic layer for modern data stacks, supporting BI, spreadsheets, embedded apps and AI. It emphasises performance optimization (caching, pre‑aggregation), broad integration (Power BI, Excel, custom APIs) and a reusable single source of truth for metrics.

Cube Cloud pros:

- Strong API and multi‑tool support: SQL, GraphQL, REST endpoints for analytics and apps.

- Performance focus: caching and pre‑aggregation to improve query speeds and reduce compute load.

- Broad ecosystem compatibility (including Power BI/Excel) and central governance.

Cons of Cube Cloud:

- Learning curve and evolving product may present onboarding challenges.

- Cost and complexity may increase as models and use‑cases grow across domains.

- Some users report occasional performance unpredictability or complexity in model setup.

7. Graphwise

Graphwise combines knowledge graph and semantic layer technologies to unify structured and unstructured data into an AI-ready, context-rich foundation. By mapping relationships and meanings across data sources, Graphwise helps organizations create intelligent, queryable data models that support reasoning, explainability, and generative AI applications. It bridges the gap between human and machine understanding, making it ideal for enterprises looking to operationalize semantic data for advanced analytics and AI-driven insights.

Graphwise pros:

- Integrates knowledge graph and semantic modeling for contextual data understanding.

- Enables AI and LLMs to query enterprise data semantically for more accurate insights.

- Supports reasoning, explainability, and relationship mapping across diverse datasets.

Cons of Graphwise:

- Requires specialized expertise in ontology and knowledge graph modeling.

- Implementation can be complex for organizations without existing graph infrastructure.

- Smaller ecosystem and community compared to mainstream BI-oriented semantic tools.

8. Google Cloud Data Catalog with Vertex AI

Google Cloud Data Catalog paired with Vertex AI creates a powerful foundation for managing semantic metadata, data discovery, and AI feature sharing. The Data Catalog provides centralized metadata governance and lineage tracking, while the Vertex AI Feature Store enables semantic organization and reuse of features for machine learning models. Together, they act as a semantic backbone for enterprise AI, making data discoverable, interpretable, and ready for advanced AI and ML workflows.

Google Cloud Data Catalog pros:

- Native integration with Google Cloud services for unified metadata and AI feature management.

- Strong lineage tracking, data discovery, and governance capabilities.

- Seamless bridge between semantic metadata and machine learning workflows via Vertex AI.

Cons of Google Cloud Data Catalog:

- Better suited for organizations already committed to the Google Cloud ecosystem.

- Limited out-of-the-box support for non-Google data platforms.

- Requires technical expertise to configure semantic metadata models effectively.

9. PoolParty

PoolParty is a semantic middleware and knowledge graph management platform that enables organizations to align enterprise data semantics across silos, search systems, and AI applications. It provides powerful ontology management, taxonomy creation, and text-mining capabilities that enhance data integration, metadata enrichment, and semantic interoperability. PoolParty is particularly well-suited for organizations in information-heavy industries, such as publishing, government, and healthcare, that require rich, contextual understanding of data assets.

PoolParty pros:

- Robust ontology and taxonomy management for semantic enrichment and interoperability.

- Strong support for text mining, metadata tagging, and semantic search.

- Integrates easily with content management and enterprise knowledge systems.

Cons of PoolParty:

- Steeper learning curve for non-semantic specialists or BI-focused teams.

- Primarily focused on semantic web and content management rather than BI metrics.

- May require additional tools for advanced analytics or visualization capabilities.

Common use cases and semantic layer examples

A semantic layer in data analytics provides a business-friendly abstraction over complex data environments, making it easier for organizations to extract insights, maintain consistency, and accelerate decision-making. By mapping data into meaningful metrics and dimensions, it enables analysts, BI tools, and AI agents to interact with data in terms they understand, rather than struggling with multiple schemas or fragmented platforms. Here are some common use cases and examples of how a semantic layer is applied in practice:

Cross-Departmental Reporting

Organizations often struggle to reconcile data from sales, marketing, finance, and operations due to inconsistent definitions and siloed systems. A semantic layer standardizes key business metrics and dimensions, enabling teams to generate consistent, accurate reports without manually reconciling data from multiple sources.

For example, a company can define “monthly active users” or “revenue per customer” centrally in the semantic layer, ensuring that every department uses the same definition, which reduces errors and improves confidence in shared dashboards. This accelerates reporting cycles and enhances strategic decision-making across the organization.

Self-Service Analytics

Analysts and business users frequently need to perform ad hoc analysis but may lack deep technical skills to query raw data directly. A semantic layer provides a business-friendly interface, allowing users to explore and analyze data without writing complex SQL or understanding underlying data models.

For instance, marketing teams can quickly examine campaign performance or segment customers based on behavior using BI tools connected to the semantic layer. This empowers teams to generate insights independently while maintaining consistency and governance across the organization.

AI and Machine Learning Enablement

AI and ML initiatives require clean, consistent, and semantically enriched data to ensure accurate predictions and actionable outcomes. A semantic layer standardizes metrics and relationships across multiple data platforms, providing a reliable foundation for model training and feature engineering.

For example, a financial services firm can leverage a semantic layer to create consistent customer risk scores or transaction patterns that feed directly into AI models. This reduces data preparation time, improves model accuracy, and enables AI agents to make intelligent, context-aware recommendations.

Data Governance and Compliance

Ensuring proper data governance and compliance is critical for regulated industries, but disparate systems and inconsistent definitions make enforcement difficult. A semantic layer centralizes data definitions, access controls, and lineage tracking, allowing organizations to enforce policies consistently.

For example, healthcare organizations can use a semantic layer to control who can access sensitive patient data while maintaining a consistent view of clinical metrics across reporting and analytics tools. This simplifies audits, ensures compliance, and builds trust in the data being used across the enterprise.

Best practices for managing a semantic data layer

Effectively managing a semantic data layer is critical for turning raw data into trusted, actionable insights across the enterprise. By following proven best practices, organizations can ensure consistent metrics, enforce governance, accelerate analytics, and create a scalable foundation for AI and self-service initiatives. The following practices highlight key strategies to maximize the value and impact of your semantic layer while maintaining agility and reliability.

Start with high-value business domains

Focusing on high-value business domains first ensures that your semantic data layer delivers immediate impact and demonstrates tangible ROI. By prioritizing domains like sales, finance, or customer analytics, teams can quickly establish trust in the semantic layer and show how standardized definitions and accessible metrics improve decision-making.

- Identify domains with the greatest business impact.

- Map key stakeholders and data sources for each domain.

- Pilot the semantic layer with a small, high-value dataset before scaling.

Starting with high-value domains also allows teams to uncover potential challenges early, such as complex data transformations or inconsistent metrics, and address them in a controlled environment. Lessons learned from these initial domains provide a blueprint for scaling the semantic layer across other business areas efficiently.

Standardize metrics and definitions early

Defining metrics and business terms early in the semantic layer prevents misalignment and ensures that all teams interpret data consistently. Standardization creates a single source of truth, reducing confusion, reconciliation work, and errors across reports and analytics dashboards.

- Define key business metrics (e.g., revenue, churn, active users).

- Establish consistent dimensions and hierarchies (e.g., region, product category).

- Document metric definitions and calculation logic centrally.

Early standardization also accelerates self-service analytics by giving business users confidence that their insights are based on accurate, trusted definitions. This practice helps maintain governance while supporting agile analytics across multiple teams and platforms.

Adopt open standards to avoid lock-in

Using open standards in your semantic layer prevents dependency on a single vendor and ensures interoperability across tools and platforms. This flexibility allows your organization to adapt to new technologies, integrate diverse data sources, and switch tools without losing semantic consistency.

- Ensure compatibility with multiple BI, AI, and analytics platforms.

- Maintain portability of semantic definitions across data platforms.

- Use open data modeling standards (e.g., RDF, SQL-based semantic models).

Open standards also facilitate collaboration with external partners or across domains, as the definitions and relationships in the semantic layer can be easily shared and understood. This ensures long-term agility and protects investments in the semantic architecture.

Automate governance and access control

Automating governance ensures that policies, permissions, and compliance rules are consistently applied across all users and platforms. It reduces manual errors, accelerates onboarding, and provides visibility into who can access which data.

- Implement role-based access controls (RBAC).

- Enforce data masking or anonymization rules automatically.

- Track data lineage and usage to support audits and compliance.

Automation also allows your semantic layer to scale without increasing administrative overhead. By embedding governance into the layer itself, organizations can maintain trust in data while empowering self-service analytics and AI initiatives.

Continuously monitor performance and iterate

A semantic data layer is not a “set it and forget it” solution, it requires ongoing monitoring and optimization. Tracking performance ensures that queries remain fast, models are accurate, and business users can reliably access the data they need.

- Monitor query performance and optimize transformations.

- Track usage patterns to identify high-demand metrics and domains.

- Collect feedback from users and iterate on semantic models regularly.

Continuous monitoring and iteration also help the organization adapt to changing business needs, incorporate new data sources, and improve the usability and reliability of the semantic layer over time. This ensures that the layer remains a valuable, evolving asset for analytics, AI, and decision-making.

Get started with a data lake semantic layer from Dremio

Dremio offers a modern, high-performance approach to building a data lake semantic layer, enabling organizations to unlock insights directly from their data lakes without the complexity of moving or reshaping data. By providing a business-friendly abstraction over datasets, Dremio ensures consistent metrics, trusted definitions, and seamless access for analysts, BI tools, and AI agents. Dremio’s architecture supports scalability, high concurrency, and optimized query performance, making it an ideal choice for enterprises looking to unify analytics across multiple domains.

With Dremio, business users can explore data independently while IT maintains governance and control, empowering self-service analytics at scale. Autonomous performance features like Dremio Reflections accelerate queries automatically, reducing wait times and improving productivity. By combining semantic modeling with performance optimization and user-friendly access, Dremio positions itself as a superior solution for organizations seeking a reliable, scalable, and fully governed semantic layer.

Key Features:

- Dremio Reflections: Automatic query acceleration for faster analytics.

- Self-Service Data Access: Business users can explore and analyze data without complex SQL.

- Unified Semantic Layer: Standardized metrics and definitions across all data sources.

- Role-Based Governance: Secure, compliant access controls at scale.

- Seamless BI and AI Integration: Works with popular analytics and machine learning tools.

Ready to enable self-service analytics across all your data? Check out how organizations are using Dremio today for their open data lakehouse experience.

Other Great Articles Involving Semantic Layers

- Blog: Bringing the Semantic Layer to Life

- Blog: Using dbt to Manage Your Dremio Semantic Layer

- Blog: Overcoming Data Silos: How Dremio Unifies Disparate Data Sources for Seamless Analytics

- Blog: BI Dashboard Acceleration: Cubes, Extracts, and Dremio’s Reflections

- Webinar: Enable No-Copy Data Marts with a Unified Semantic Layer

- Webinar: Unified Access for Your Data Mesh: Self-Service Data with Dremio’s Semantic Layer

- Whitepaper: Best Practices to Deliver Self-Service Analytics

- Whitepaper: Dremio Semantic Layer Best Practices for Cost-Efficient Analytics

- Whitepaper: Dremio Architecture Guide

Try Dremio’s Interactive Demo

Explore this interactive demo and see how Dremio's Intelligent Lakehouse enables Agentic AI