If your organization depends on data, you need a place to store it. Not only that — you need the right kind of data storage and management solution for the data you use and produce. Most organizations find that a data warehouse or data lake meets their needs. Many even use both.

What Is a Data Lake?

A data lake is a centralized storage solution for structured, semi-structured, and unstructured data. Virtually any type of data can reside within a data lake, and the lake can scale indefinitely to meet the needs of an enterprise. Because of data lakes’ ability to scale, they often contain enormous quantities — think petabytes — of data. Organizations can store everything from relational data to images to clickstream data inside a data lake. There really aren’t any limits.

In the big data era, data lakes play an increasingly large role in accumulating and managing vast quantities of data. The use of cloud data lakes, in particular, is growing because cloud infrastructure easily fulfills organizations’ need for scale, flexibility, and low-cost data storage. Virtually all sectors of the corporate world, from marketing departments to financial institutions to enterprise tech companies and beyond, now collect large amounts of data from users, customers, and employees. Thanks to the open standards of most data lake environments, data analysts also have access to various tools to run against data stored in the data lake.

The downsides? Since it’s so easy to both accumulate and store data of virtually any type, many data lakes have become “data swamps.” No data lake has to end up this way, but it’s a consequence of the fact that the lake doesn’t have any built-in data governance standards. When storing data in a lake, organizations must take great care to maintain their data in a way that allows data analysts, data scientists, and other users to access and extract value from the data. On their own, data lakes also offer no guarantees for data quality. Data lakes need data management so that organizations can maximize the value of the data stored in the lake.

What Is a Data Warehouse?

A data warehouse is another type of data storage option. In contrast to a data lake, a data warehouse stores structured data. And by structured, we’re talking about “traditional” types of data found in SQL relational databases. Data warehouses have existed for decades and have long been a mainstay for enterprises storing vast quantities of data related to transactions, records, and operational histories. Banks, insurance carriers, brokerage firms, and utilities were storing data in on-premises data warehouses long before the advent of cloud-based data storage.

Since data warehouses support operational reporting, database administrators create schemas that enable the efficient processing of SQL data queries. When your data lives in a data warehouse, you know you’re dealing with predefined schemas with built-in data management. Analysts are used to querying data in data warehouses to find the business insights they’re looking for.

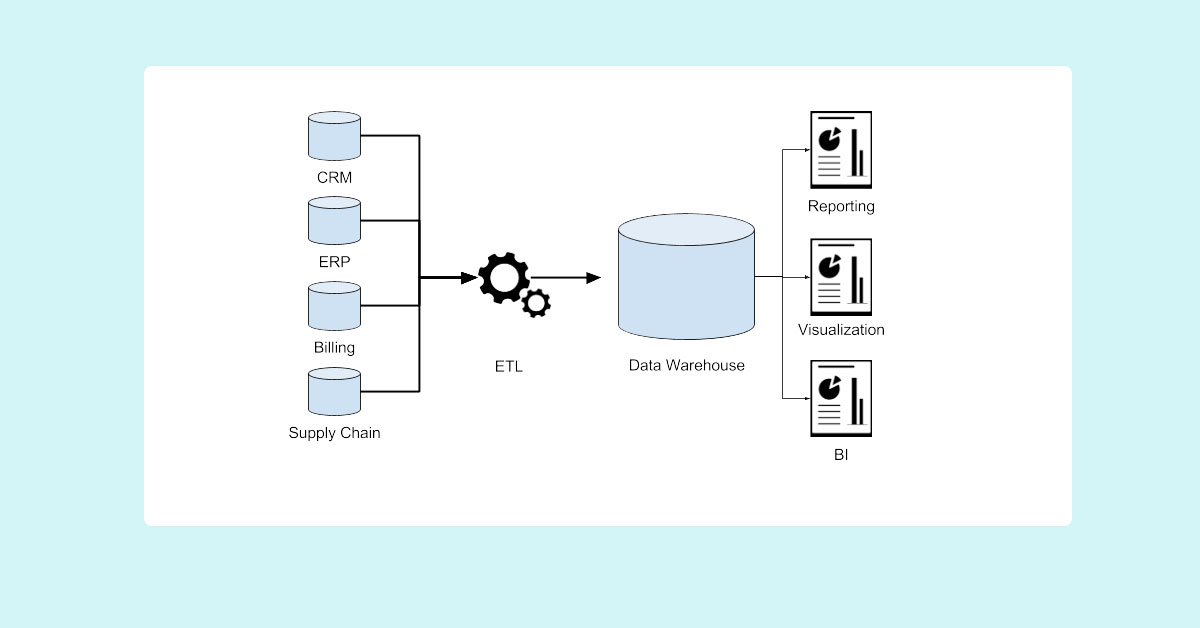

Unfortunately, data warehouses have several drawbacks. For starters, organizations must move and copy data into the warehouse running constant ETL (extract, transform, and load) processes, which is a time-consuming and resource-intensive exercise. Since data sources don’t always match the schema of the data in the warehouse, the ETL process (E) extracts the data from the source system, transforms (T) it into the desired format, and loads (L) it into the warehouse tables.

This practice might have been sustainable in the past. But today, the vast amount of data that enterprises collect makes it practically impossible to copy enough data into data warehouses to satisfy business needs. As a result, organizations often must choose subsets of their data to copy into a data warehouse. This approach can limit data accessibility and create long backlogs when data consumers request new data. In addition, creating copies of data and moving those copies creates additional data pipelines (adding complexity) and increases costs.

Most data lakes utilize low-cost commodity storage or cloud-based object storage, which is far less expensive than most data warehouse infrastructure while offering the benefit of virtually limitless scale.

Data Lake vs. Data Warehouse

Data lakes and data warehouses have some similarities, but organizations have good reasons for choosing one over the other. The largest differences have to do with the types of data your organization needs to manage, how much of it you’re managing, and why.

Data Types

Data warehouses store structured data. Typically, the structured data stored in a data warehouse has already been processed, lives in a relational database, and is accessed via SQL queries. In traditional environments, the structured data found in a data warehouse is typically used for periodic, standardized reports.

An example would be transaction histories for brokerage customers. This type of data will exist in a standard format, display data in rows and columns, and be used for a laundry list of specific reasons: calculating service fees, determining tax obligations, etc. Analysts can query this information to build monthly or quarterly reports and inform business decision-makers.

Data lakes, on the other hand, can store structured, semi-structured, and unstructured data. In addition to relational data, such as transaction histories, a data lake might contain images from a claims adjuster’s site visit, web server logs, or raw text. Nowadays, user log files from Internet of Things (IoT) devices, social media, and websites also reside in data lakes. Basically, if an organization wants to store it for any reason, into the data lake it goes.

Data is stored in a data warehouse in proprietary formats that can only be read by that data warehouse. . To feed new source data into the warehouse, organizations must deploy ETL tools. These tools often run constantly, processing new data such that it’s properly formatted for the relational tables.

The raw data stored in a data lake, by contrast, doesn’t have to follow a predefined structure. In a data lake, the formats are highly diverse, composed of various software frameworks, sources, and file formats. Many organizations choose open source formats like Apache Parquet for files and Apache Iceberg for tables in their data lakes so that they have greater flexibility and control over their data. Unlike data in a data warehouse, data in a data lake can be queried by multiple engines.

Data Volume

Data warehouses tend to be smaller in size than data lakes due in part to the types of data being stored. Typically, a data warehouse will store a smaller quantity of less storage-intensive data — figures inside relational tables don’t take up as much space as clickstreams, high-resolution media, and sensor telemetry. In addition, a data warehouse stores a curated subset of data, while a data lake stores essentially all enterprise data.

While data warehouses often store terabytes (TBs) of data, data lakes commonly house petabytes (PBs) of data. Many may even be home to hundreds of PBs data.

Vendor Lock-In

Enterprises with long-term commitments to data warehouses often have nowhere to go. A significant number of business operations depend on their continued use of the warehouse, their data formats, and the availability of the warehoused data. To migrate to something new would be exorbitant, not to mention extremely disruptive to business.

Data lakes (and cloud data lakes, in particular) enable open architectures. You can run tools against the data and extract insights from various analytics services. As such, the lake doesn’t lock you into a specific vendor, nor does it lock you out of using your data with newer, more powerful tools that come onto the market.

Potential Uses

In most cases, data in a data warehouse is used for generating regular, standardized sets of reports. Earlier, we considered how a data analyst might query transaction histories for clients or groups of clients at a bank or brokerage. Another example might be a water or electric utility that generates quarterly revenue reports vs. expenditures on infrastructure repairs.

The data is carefully curated and is often formatted to comply with regulatory or business mandates for data security and audit purposes.

The potential uses for data in a data lake are far more varied. They include the creation of models for predictive analytics and machine learning that depend on raw data sets. An IoT device manufacturer, for instance, might need to automate device behavior based on the specific actions of users that were tracked by the device. The log of user actions could be sent straight to the data lake, where the device manufacturer could later run queries upon the data to derive insights that inform future improvements to its products.

With a data lake, the relationships between data elements may not be understood before the data is stored. Afterward, however, organizations can deploy any number of tools upon the data to extract value from it.

Types of Users

Business analysts and operational users are most likely to access data stored in a data warehouse. That’s because they’re the users who are most likely to pull reports on specific business questions. When management needs to review a revenue report, for instance, these are the individuals they’ll task with generating the requested data.

Data lakes, on the other hand, are accessible to a wider variety of users. These include data architects, data scientists, analysts, and operational users. In addition to routine operational reports, data analysts will want to (and have the ability to) access source data to gain deeper insights into certain metrics and KPIs beyond the obvious ones that appear in summary reports.

While a data warehouse will have both limited data and limited possibilities for using that data, the data in a data lake lends itself to other types of analysis — predictive modeling, for example. Various types of users will have reasons to access this data. It isn’t the sole domain of those in traditional business analysis roles.

Does a Data Lake Replace a Data Warehouse?

Many enterprises use both data warehouses and data lakes. Data warehouses have been around for decades, and many organizations have made significant investments in them. For that reason, data warehouses are unlikely to disappear soon.

Today, most organizations initially store data in a cloud data lake environment. In some cases, data in the data lake can be queried directly, but in others, data needs to be loaded into a data warehouse. Because the process of transforming and loading data from the data lake into a data warehouse results in data copies, added complexity and increased total cost of ownership (TCO), many organizations are looking to query data lake storage directly to avoid the cost and overhead of managing data warehouses.

Should You Use a Data Lake or a Data Warehouse?

The choice of a data lake or data warehouse often depends on what kind of data you’re storing and how it’s being used. Let’s consider when you should use a data lake vs. a data warehouse.

When to Use a Data Lake

Data lakes should be considered when:

- The types of data that need to be stored are not known in advance

- Data types do not easily fit a tabular or relational model

- Datasets are either very large or are growing fast enough that cost of storage is a concern

- Relationships between data elements are not understood in advance

- Applications include data exploration, predictive analytics and machine learning where it is valuable to have complete, raw datasets

Use Cases for Data Lakes

Data lakes are great for answering questions that involve vast amounts of data from various sources. Here are some examples:

- Exploring customer order data together with raw chat, email, reviews, and customer support transcriptions to identify customer experience issues impeding sales.

- For a mobile communication company, developing a new “opt-in” mobile service where businesses can receive anonymized notifications when a customer is in physical proximity to their location.

- Compiling a dataset of web and OS-level logs, order details, and support interactions to train a machine learning model to quantify the probability of fraud before a transaction completes.

Data lakes are necessary for any organization engaged in data exploration, machine learning, and artificial intelligence initiatives. These projects require huge volumes of data and aren’t suited for data warehouses.

Traditionally, data lakes have required specialized skills and specific programming languages in order to work with the data stored in them. But today, companies like Dremio are upending those traditional limitations, making it possible for data analysts to run familiar SQL queries directly against data stored in the data lake.

When to Use a Data Warehouse

Data warehouses still serve a purpose for industries that are heavily regulated and need to store their data in a warehouse or enterprises that only make decisions based on standardized reports. When your primary objective is to gain business insights from structured data — data that lives within the parameters of proprietary organizational schema — the warehouse may make the most sense.

Organizations have historically used a data warehouse when:

- The data that needs to be stored is known in advance, and organizations are comfortable discarding additional data or creating duplicates

- Data formats are relatively static, and not expected to change with time

- Organizations run standard sets of reports requiring fast, efficient queries

- Results need to be drawn from accurate, carefully curated data

- Regulatory or business requirements dictate special handling of data for security or audit purposes

Use Cases for Data Warehouses

Pretend you’re a database administrator at a financial institution. You’re periodically asked to pull transactional histories for use in quarterly meetings. These transactions might relate to revenue within a given time period; they might have to do with expenditures; they could even relate to customer service performance (think the number of cases marked “resolved” within a quarter). For these types of reports — standardized, periodic, formulaic — submitting an SQL query to a relational database is standard practice across many enterprises.

Other examples of data warehouse use cases include:

- Running a consolidated quarterly report on e-commerce return rates by store and sales item to rank top customer satisfaction issues

- Presenting an accounting dashboard that shows days sales outstanding (DSO) by branch office to assess accounts receivable for the quarter

- For all sales regions, ranking SKUs based on average sales margin and inventory turnover to identify poorly performing sales items

Is a Data Lake Cheaper Than a Data Warehouse?

Generally speaking, a data lake is less expensive than a data warehouse. The cost of storing data in a cloud data lake has decreased to the point where an enterprise can essentially store an infinite amount of data.

On-premises data warehouses can be expensive to set up and maintain. Compared to on-premises, cloud data warehouses may be less expensive to set up. But organizations can quickly find themselves spending more than they intended when the costs of moving and copying data into and out of the cloud data warehouse for analysis are factored in.

Conclusion

From cybersecurity to life sciences to marketing departments to IoT and beyond, there’s an ever-growing need to access vast quantities of data for BI purposes. With more, higher quality data and more sophisticated tools for processing and using it, organizations can innovate, increase their competitive advantage, and grow. For this reason, data lakes have become essential in so many business environments. Organizations continue to use data warehouses for BI and analytics. However, advances in data lake query technologies can help enterprises offload expensive analytic processes from data warehouses at their own pace.