Featured Articles

Popular Articles

-

Dremio Blog: Partnerships Unveiled

Puppygraph Sponsors the Subsurface Lakehouse Conference

-

Dremio Blog: Partnerships Unveiled

Upsolver Sponsors the Subsurface Lakehouse Conference

-

Dremio Blog: Product Insights

Deep Dive into Better Stability with the new Memory Arbiter

-

Dremio Blog: Product Insights

What’s new in Dremio, Delivering Market Leading Performance for Apache Iceberg Data Lakehouses

Browse All Blog Articles

-

Dremio Blog: Partnerships Unveiled

Puppygraph Sponsors the Subsurface Lakehouse Conference

PuppyGraph's sponsorship underlines our dedication to empowering individuals and organizations with knowledge and tools to navigate and excel in the evolving data landscape. Through its innovative platform and active participation in the community, PuppyGraph continues to lead the way in advancing graph analytics and data lakehouse technologies, making the complexities of big data more accessible and manageable. -

Dremio Blog: Partnerships Unveiled

Upsolver Sponsors the Subsurface Lakehouse Conference

By sponsoring the Subsurface Conference, we aim to connect with the community, share insights, and explore the future of data lakehouses together. Join us at the conference to witness the evolution of data management and take your first step towards an optimized data future with Apache Iceberg and Upsolver. -

Dremio Blog: Product Insights

Deep Dive into Better Stability with the new Memory Arbiter

Tim Hurski, Prashanth Badari, Sonal Chavan, Dexin Zhu and Dmitry Chirkov -

Dremio Blog: Product Insights

What’s new in Dremio, Delivering Market Leading Performance for Apache Iceberg Data Lakehouses

Dremio's version 25 is not just an update; it's a transformative upgrade that redefines the standards for SQL query performance in lakehouse environments. By intelligently optimizing query processing and introducing user-friendly features for data management, Dremio empowers organizations to harness the full potential of their data, driving insightful business decisions and achieving faster time-to-value. With these advancements, Dremio continues to solidify its position as a leader in the field of data analytics, offering solutions that are not only powerful but also practical and cost-effective. -

Dremio Blog: Product Insights

What’s New in Dremio, Improved Administration and Monitoring with Integrated Observability

Dremio version 25 represents a significant leap forward in making lakehouse analytics more accessible and manageable. With its enhanced monitoring capabilities, seamless third-party integrations, and a suite of additional features, Dremio is setting a new industry standard for ease of administration. These improvements streamline the monitoring process and empower administrators to proactively manage their environments, ensuring that Dremio continues to be an optimal choice for companies seeking advanced, user-friendly analytics solutions. -

Dremio Blog: Product Insights

What’s New in Dremio, Setting New Standards in Query Stability and Durability

Dremio's version 25 is not just an incremental update; it's a transformative release that redefines stability and durability in SQL analytics. By introducing sophisticated memory management, spillable hash joins, and a proactive memory arbiter, Dremio ensures businesses can rely on its engine for their most critical and complex data workloads. -

Dremio Blog: Product Insights

What’s New in Dremio, Improved Data Ingestion and Migration into Apache Iceberg

With version 25, Dremio redefines the analytics landscape for Apache Iceberg, offering a robust, efficient, and user-friendly platform. By enhancing its native support for Iceberg and integrating features like real-time data ingestion and simplified migration, Dremio empowers organizations to harness the full potential of their data, enabling insightful analytics and informed decision-making in a modern data environment. -

Dremio Blog: Partnerships Unveiled

Streaming and Batch Data Lakehouses with Apache Iceberg, Dremio and Upsolver

Dremio serves as a bridge, connecting data from diverse sources and making it accessible across various platforms. In tandem with Upsolver, we can establish continuous data pipelines that ingest streaming data from sources like Apache Kafka or Amazon Kinesis, depositing this data into Iceberg tables within our AWS Glue catalog. This setup empowers Dremio to perform real-time analytics, creating a potent synergy that yields impactful outcomes. -

Dremio Blog: Various Insights

Advancing the Capabilities of the Premier Data Lakehouse Platform for Apache Iceberg

With the latest release of Dremio, 25.0 we are helping accelerate the adoption and benefits of Apache Iceberg, while bringing your users closer to the data with lakehouse flexibility, scalability and performance at a fraction of the cost. We are excited to announce some of the new features that improve scalability, manageability, ease of use […] -

Dremio Blog: Open Data Insights

Dremio’s Commitment to being the Ideal Platform for Apache Iceberg Data Lakehouses

Dremio's unwavering commitment to Apache Iceberg is not merely a strategic choice but a reflection of our vision to create an open, flexible, and high-performing data ecosystem. Our deep integration with Apache Iceberg throughout the entire stack complements Dremio's extensive functionality, empowering users to document, organize, and govern their data across diverse sources, including data lakes, data warehouses, relational databases and NoSQL tables. This synergy forms the bedrock of our open platform philosophy, facilitating seamless data accessibility and distribution across the organization. -

Dremio Blog: News Highlights

Stay alert for fraudulent recruitment attempts that falsely claim association with Dremio

At Dremio, we uphold trust and transparency as paramount values in all our interactions with customers, partners, employees, and the general public. With these principles in mind, we feel it's crucial to alert you to a concerning trend involving fraudulent job offers falsely attributed to Dremio and its affiliated entities. Scammers are exploiting our name, […] -

Dremio Blog: Various Insights

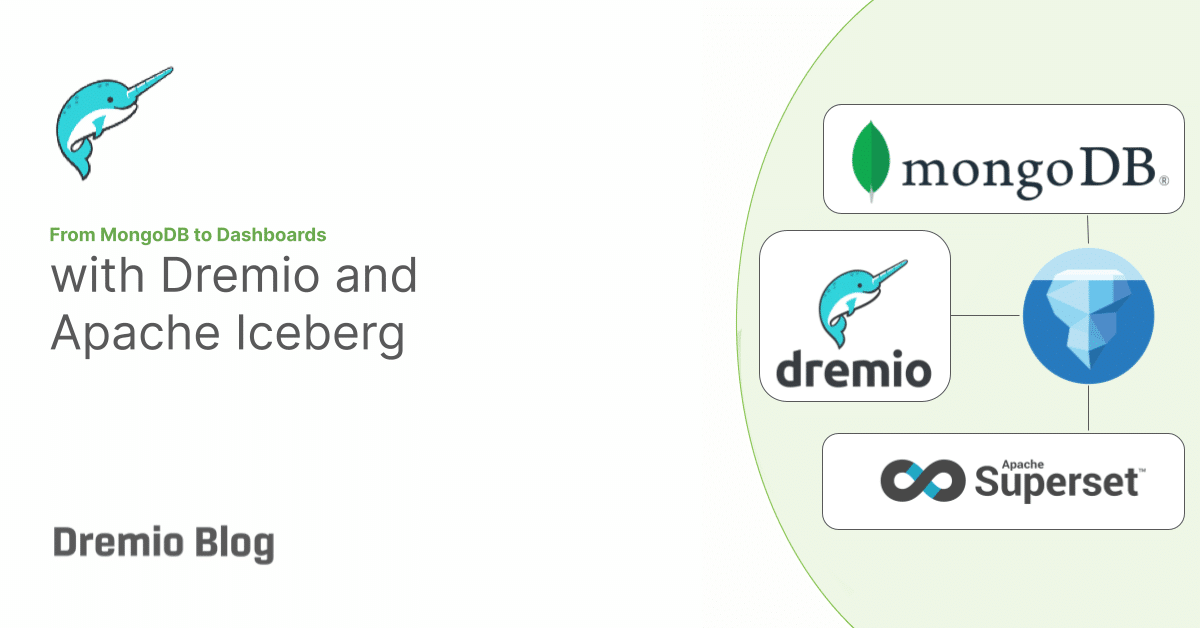

From MongoDB to Dashboards with Dremio and Apache Iceberg

Dremio enables directly serving BI dashboards from MongoDB or leveraging Apache Iceberg tables in your data lake. This post will explore how Dremio's data lakehouse platform simplifies your data delivery for business intelligence by doing a prototype version that can run on your laptop. -

Dremio Blog: Product Insights

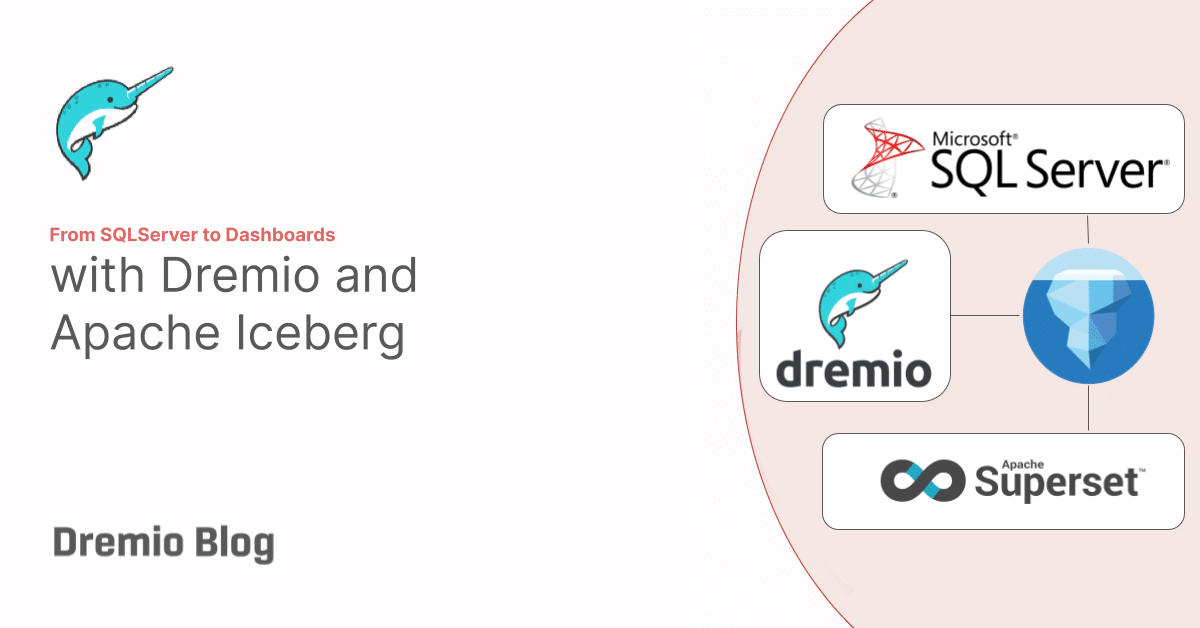

From SQLServer to Dashboards with Dremio and Apache Iceberg

Dremio enables direct serving of BI dashboards from SQLServer or leveraging Apache Iceberg tables in your data lake. This post will explore how Dremio's data lakehouse platform simplifies your data delivery for business intelligence by doing a prototype version that can run on your laptop. -

Dremio Blog: Product Insights

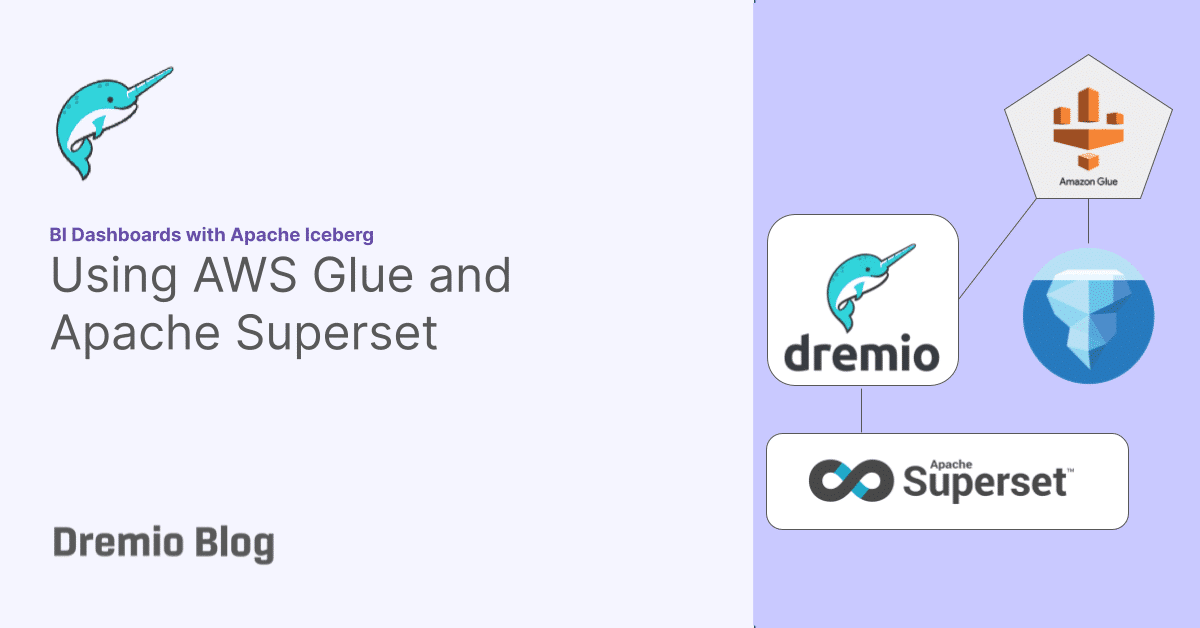

BI Dashboards with Apache Iceberg Using AWS Glue and Apache Superset

Business Intelligence (BI) dashboards are invaluable tools that aggregate, visualize, and analyze data to provide actionable insights and support data-driven decision-making. Serving these dashboards directly from the data lake, especially with technologies like Apache Iceberg, offers immense benefits, including real-time data access, cost-efficiency, and the elimination of data silos. Dremio as a data lakehouse platform, […] -

Dremio Blog: Product Insights

From Postgres to Dashboards with Dremio and Apache Iceberg

Moving data from source systems like Postgres to a dashboard traditionally involves a multi-step process: transferring data to a data lake, moving it into a data warehouse, and then building BI extracts and cubes for acceleration. This process can be tedious and costly. However, this entire workflow is simplified with Dremio, the Data Lakehouse Platform.

- 1

- 2

- 3

- …

- 20

- Next Page »