Tutorials

Learn how to get started using dbt (data-build-tool) on Dremio’s Open Data Lakehouse by following along with this tutorial. In this video, you will learn how to install dbt core along with a preview of the latest dbt on Dremio connector plugin, initialize a new project, run an example dbt model and then publish your […]

To sign up for your free account, go to the Get Started page. Next: Choose whether you want to create an account using the North American or European Dremio control plane. Enter your email address Click “Sign Up for a Dremio Organization” Create a New Account with your email, or use Google, Microsoft, or GitHub […]

Note: Now AWS natively supports Apache Iceberg when using Glue by adding a –datalake-formats job parameter to the glue job. Read more here. For more on implementing a data lakehouse with Dremio + AWS check out this joint blog on the AWS Partner Nework blog. Adding the Dremio and Iceberg Advantage to Data Lakes Accept the […]

There are several issues related to this approach, which are exacerbated by the tens, hundreds or even thousands of dashboards used in an organization: With all of the downsides to this approach, why is it such a common architecture for dashboards? The primary reason is because we were limited by the capabilities of the tools […]

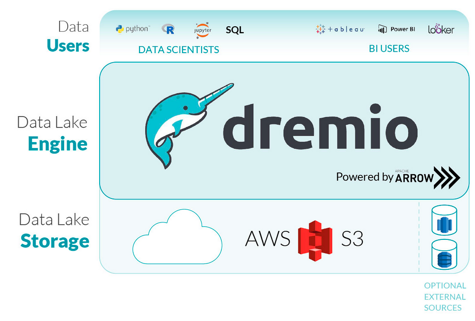

Dremio Dremio AWS Edition, a production-grade, high-scale data lake engine highly optimized for AWS, delivers cost savings and makes it very easy to deploy a Dremio cluster in AWS. Learn more about the Dremio AWS Edition and check out the onboarding videos to get started. Whether you are a current user of Dremio AWS Edition, […]

Dremio 4.6 adds a new level of versatility and power to your cloud data lake by integrating directly with AWS Glue as a data source. AWS Glue is a combination of capabilities similar to an Apache Spark serverless ETL environment and an Apache Hive external metastore. Many users find it easy to cleanse and load […]

Now take a look at the function called generate_metrics() that should generate random data. You want to generate and send information to the Kinesis about the metrics: requests, newly registered users, new orders and users churn over some period of time. The metrics are generated randomly, but they all are sent to Kinesis as comma-separated […]

Parallel projects are multi-tenant instances of Dremio where you get a service-like cluster experience with end-to-end lifecycle automation across deployment, configuration with best practices, and upgrades, all running in your own AWS account. Every time that you launch a new project, it comes with all the best practices already set up for you. In this […]

Introducing Elastic Engines – Dremio In this article we walk you through the steps to provision and manage Elastic Engines, we also show you the steps to manage workloads using queues and rules. Step 2. You will see a default engine already deployed. Now click on Add New Step 3. The Set Up Engine popup […]

In my previous blog post, Next-Gen Data Analytics – Open Data Architecture, I discussed how the data architecture landscape has changed lately. The vast amount of data and the speed in which it is being generated makes it almost impossible to handle using traditional data storage and processing approaches. For a successful data lake to […]

Now we have a query prepared and ready for a parameter to be added.

Enterprises often have a need to work with data stored in different places; because of the variety of data being produced and stored, it is almost impossible to use SQL to query all these data sources. These two things represent a great challenge for the data science and BI community. Prior to working on the […]

Amazon Web Services (AWS) is a cloud services platform with extensive functionality. AWS provides different opportunities and solutions for databases, storage, data management and analytics, computing, security, AI, etc. Among the offered databases and storages are Amazon Redshift and Amazon S3. Amazon Redshift belongs to the group of the leading data warehouses. It is designed […]

Modern businesses generate, store, and use huge amounts of data. Often, the data is stored in different data sources. Moreover, many data users are comfortable to interact with data using SQL while many data sources don’t support SQL. For example, you may have data inside a data lake or NoSQL database like MongoDB, or even […]

- 1

- 2

- 3

- …

- 6

- Next Page »